Getty Images

Explore network plugins for Kubernetes: CNI explained

With Container Network Interface plugins, IT teams can create and deploy network options for diverse Kubernetes environments. Learn how CNI works and compare top network plugins.

Extensibility and optimization are essential to any enterprise platform. The ability to tailor a platform to specific application or business needs without making permanent changes creates opportunities for innovation and enhancement while prolonging the platform's life.

Kubernetes versions 1.25 and later take full advantage of extensibility and optimization by using Container Network Interface (CNI) to work with varied network technologies and topologies. Kubernetes currently requires network plugins to support pods and drive the Kubernetes network model.

Take a closer look at how CNI works with Kubernetes and compare popular network plugins currently available for Kubernetes, including Calico, Flannel, Weave Net, Cilium and Multus.

What is Container Network Interface?

Container technology uses virtual instances to compose, operate and scale highly modular enterprise workloads. Because containers rely on intercommunication over enterprise and cloud networks, enhancing and optimizing network management can dramatically improve container and application performance.

The CNI specification -- version 1.0.0 at time of publication -- is a formal document that details a vendor- and technology-agnostic networking approach for application containers using Linux. With the CNI plugin model, IT admins can create and deploy a wide range of network options for their containers, enabling them to use Kubernetes in diverse networking environments.

The CNI specification defines five aspects of a container networking environment:

- the format used to define the network configuration, including network names, plugins to deploy, keys and network object names;

- the protocol used for container runtimes -- such as runc, CRI-O and containerd -- to interoperate or communicate with network plugins;

- the procedure for plugin execution;

- the procedure for plugins to assign functions or tasks to other plugins; and

- the data type definitions that enable plugins to return results or pass data to the container runtime.

A pod or container has no network interface when it is first created. CNI plugins insert a network interface into the container network namespace. This virtual network then connects hosts and containers, assigns IP addresses, configures routing and performs other tasks to define the network environment so that containers can communicate.

This process is surprisingly complex. The type of cluster networking used in the context of containers and Kubernetes must address four distinct types of communications: pod-to-pod, pod-to-service, external-to-service and tightly coupled container-to-container.

Because Kubernetes is responsible for sharing machines between applications, it's critical to prevent two applications from using the same ports and to coordinate ports across multiple systems. Rather than solve these issues internally, Kubernetes uses a plugin approach, which reduces the burden on Kubernetes itself.

CNI is not a Kubernetes plugin, but rather the specification that defines how plugins should communicate and interoperate with the container runtime. CNI plugins can be created and developed in any manner, and they often reflect the standards of development, testing and delivery found in other software projects. However, plugins must ultimately adhere to the CNI specification to be used with Kubernetes in a working container environment.

How does CNI relate to Kubernetes?

CNI is not native to Kubernetes. Developers using the CNI standard can create network plugins to interoperate with a variety of container runtimes. CNI networks can use an encapsulated network model, such as Virtual Extensible LAN (VXLAN), or an unencapsulated -- also known as decapsulated -- network model, such as Border Gateway Protocol (BGP).

Kubernetes is just one of the runtime environments that adheres to the CNI specification. Other CNI-compatible runtimes include the following:

- rkt

- CRI-O

- OpenShift

- Cloud Foundry

- Apache Mesos

- Amazon Elastic Container Service

- Singularity

- OpenSVC

Dozens of third-party plugins adhere to the CNI standard, including Calico, Cilium, Romana, Silk and Linen. The CNI project page on GitHub maintains a comprehensive list of CNI-compatible projects.

Benefits and drawbacks of CNI plugins

The following are some of the benefits of using a CNI plugin architecture:

Software extensibility. With CNI plugins, developers don't need to build software for every possible deployment situation or environment. Instead, businesses can simply choose the plugin that best fits their requirements.

Avoiding lock-in. Ideally, a plugin approach prevents vendor lock-in and enables businesses to use plugins created by anyone.

Easy changes. When deployment needs or environments change, businesses can alter the platform simply by installing new CNI plugins.

However, CNI plugins are not perfect, and any plugin-based platform can also present potential problems. Businesses should consider these tradeoffs before deploying any plugin-dependent platform:

Unexpected bugs. Platform developers don't always test or validate plugins, which can lead to flaws such as bugs, poor performance and security problems. Any testing or validation of a software platform should account for its intended plugins, including re-validating the platform for stability and performance when a plugin is updated or replaced.

More moving parts. Admins need to follow updates and patches not only for platforms such as Kubernetes, but also for any plugins, which can complicate software deployment and management.

Changing standards. Plugin standards such as CNI are not permanent, and any developments can require changes to plugins. This, in turn, might demand new or updated plugins that involve added complexity and labor for the business. Likewise, there are no guarantees of backward compatibility or timely updates for vital plugins to adhere to new standards. All of this can be disruptive for businesses.

Compare Kubernetes CNI plugins

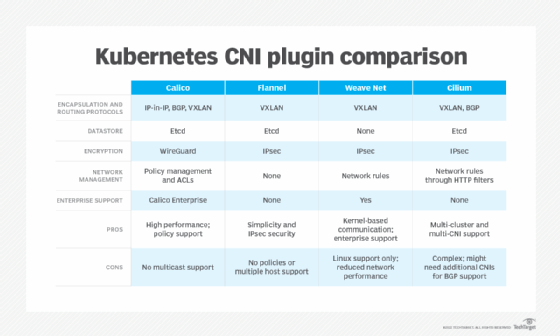

There are numerous CNI plugins for Kubernetes. Each plugin performs similar tasks and is installed as a daemon, but plugins differ in their design and approach to encapsulation, routing, datastores, encryption and support. Organizations should consider each of these factors when choosing a Kubernetes CNI plugin.

Calico

Calico is a popular open source CNI plugin designed for flexibility, network performance, advanced network administration, and connection visibility between pods and hosts.

Calico uses BGP routing as an underlay network or IP-in-IP and VXLAN as an overlay network for encapsulation and routing. BGP does not encapsulate IP traffic, which eliminates the need for an encapsulation layer and enhances container network performance. Calico handles tunneling encryption with WireGuard.

The plugin supports tracing and debugging using network management features such as policy management and access control lists (ACLs). Network policies use allow/deny matching, which can be created as needed and assigned to pod network ingress policies. Calico offers enterprise support through Calico Enterprise.

Flannel

Flannel is a mature and stable open source CNI plugin designed around an overlay network model based on VXLAN and suitable for most Kubernetes use cases.

Flannel creates and manages subnets with a single daemon that assigns a separate subnet to each Kubernetes cluster node as well as an internal IP address. The plugin uses etcd to store host mappings and other configuration items. Encryption is supported through standard IPsec.

Flannel is a good choice for IT staff new to the Kubernetes CNI landscape who want greater command over their Kubernetes clusters. However, Flannel does not support network policies, cannot run multiple hosts through a single daemon and does not offer enterprise support.

Weave Net

Weave Net, a product of Weaveworks, offers CNI plugin installation and configuration within Kubernetes clusters.

Weave creates a mesh overlay network capable of connecting all cluster nodes. The overlay uses a kernel system to move packets and works directly between pods within a single host, though this is not an option when moving traffic between hosts.

The Weave plugin handles other network functions -- such as fault tolerance, load balancing and name resolution -- through a Weave DNS server. Weave uses IPsec for encryption and VXLAN as the standard protocol for encapsulation and routing.

Unlike many other CNI plugins, Weave does not use etcd, instead storing network configuration settings in a native database file used by Weave and shared between pods. The plugin supports network policies through a specialized weave-npc container, which it installs and configures by default.

Cilium

Cilium is an open source CNI known for high scalability and security that is installed as a daemon on each node of a Kubernetes cluster.

Cilium uses VXLAN to form an overlay network and extended Berkeley Packet Filter to manage network connectivity and application rules. It supports both IPv4 and IPv6 addressing and uses BGP for unencapsulated routing. Cilium can support multiple Kubernetes clusters and, like Multus, provides multi-CNI capabilities.

Cilium handles aspects of network management, such as network policies, through HTTP request filters. Policies can be written to YAML or JSON files, both of which provide network traffic enforcement for incoming and outgoing traffic.

Multus

Multus is a CNI plugin designed to support multiple network interfaces to pods.

By default, each Kubernetes pod has only one network interface and a loopback. Multus acts as an umbrella or meta-plugin that can call other CNI plugins, enabling businesses to deploy pods with multiple network interfaces.

Multus is useful in several settings, including traffic splitting, where traffic types must be carefully managed to ensure quality of service. It can also enhance performance for certain traffic types that benefit from hardware performance boosts, including single-root I/O virtualization, and improve security where multi-tenant networks require careful isolation.

The future of CNI

Because the current 1.0.0 CNI specification meets a wide range of container network configuration needs, CNI is not expected to advance rapidly in the near future. However, the CNI standard might evolve to provide greater autonomy and more dynamic responses to changing network conditions.

For example, future CNI versions might allow for dynamic updates to existing network configurations or enable dynamic policy updates in response to security demands, such as firewall rules, or network performance requirements.