Fotolia

Configure advanced VM settings in vSphere 6.7

Configuring advanced VM settings is no easy task. Some common questions admins ask include where to place VM swap files and how many sockets and cores they should use.

With advanced VM setting configuration in vSphere 6.7, admins can optimize workloads and improve performance and resource utilization. There are several ways that vSphere administrators can configure advanced VM settings, such as change the VM swap file location, select sockets and cores, as well as enable multiple storage controllers.

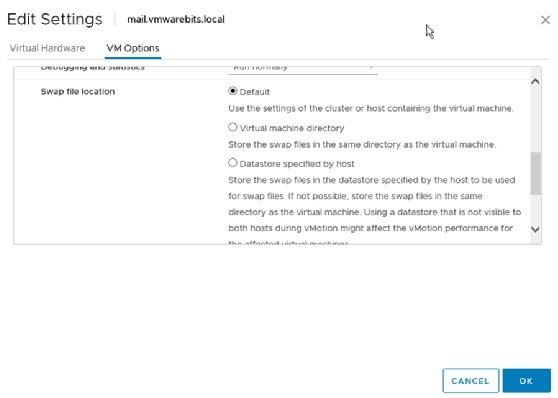

Under the VM advanced settings in vSphere 6.7, there is a setting to change the swap file location. If admins do not select from available settings, the system automatically stores the swap file within the VM files in the VM's home directory. Here, admins can locate .vmx, .nvram and other files.

Some admins might wonder why there is a choice to save the swap file in the VM directory if the directory is the default location. That is because there is a cluster-wide setting and a host setting that admins can override for this particular VM. Before admins choose a different location for the swap file, they should change the configuration.

Location options for VM swap file storage

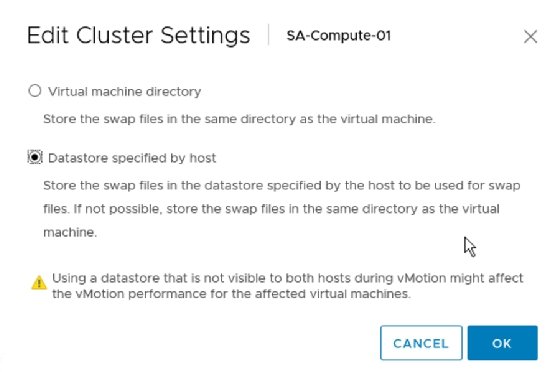

If admins' hosts are in a cluster -- and most hosts are -- then they inherit the cluster's location setting for the swap file, which automatically stores the swap file within the VM's home directory. But admins can configure clusters to place the swap file in a data store specified by the host. However, this means admins must then configure a data store on every host in the cluster.

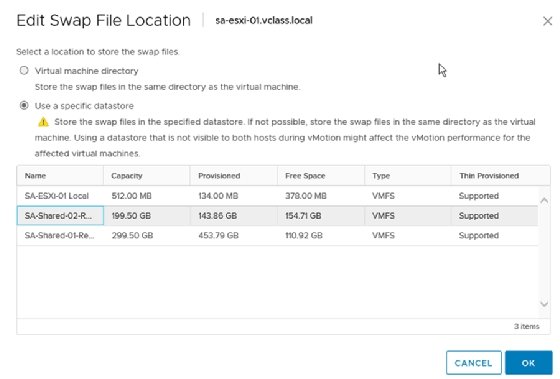

For host configuration, the default is to store the swap file with the VM. But during configuration, admins can decide which data store they will use for swap file storage. If admins decide to change this setting, they see a warning for when the data store is not visible by both hosts; when admins initiate vMotion between these hosts, it can affect vMotion performance.

Admins come across this error because the destination host must first create a swap file on its data store before being able to start the VM. Admins can use the host's local data store for the swap file and still use vMotion between hosts as long as the VM data files are on a shared data store.

Admins can also choose to store the swap file elsewhere. It is possible that admins' hosts have local disks that are going unused and, if there is enough storage that can accommodate the swap files, then admins can save precious disk space on their storage area network (SAN).

For example, if admins have 12 hosts with 100 VMs and a total of 1,600 GB of RAM, they save 1.6 TB of storage through configuration. That might not be much, but if admins are low on disk space, it can buy them some time.

However, admins should not purchase hard disks for swap file storage, because is better to add more storage to their SAN; very often hosts come with a local hard disk that is unused.

Admins might also want to place the swap file within a different data store when they have purchased expensive high-end storage and do not want to waste that space on swap files.

In some cases, admins also might not want to place swap files on a replicated data store. If this occurs, admins should keep the swap files on a nonreplicated data store and only place the VM's data on a replicated data store.

A completely different approach for using swap files is to reserve VM memory. The storage does not use the swap space for the reserved memory, which reduces the consumed storage space enormously.

Choosing sockets and cores

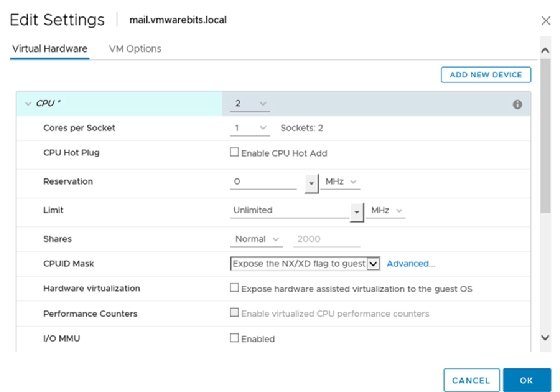

The required number of sockets and cores depends on the physical hardware and the number of virtual processors within a system, but for all VMs up to four or even eight processors and a small amount RAM is sufficient. Either way, the number of sockets and cores does not ultimately affect performance.

Admins should ask themselves what they consider too much RAM. Generally, it all depends on the non-uniform memory access (NUMA) architecture of their servers. If a server has 256 GB of RAM and the memory is correctly placed in the dual in-line memory module slots, then each CPU directly addresses 128 GB of RAM.

Additional RAM access goes through a bridge and is less efficient. As long as the VM stays within the size of a NUMA node, such as a four vCPU VM with 64 GB RAM, then it doesn't matter if admins select four sockets with a single core each or one socket with four cores.

Socket selection could matter if a VM application uses a socket-based licensing model. In this case, it is relevant if admins expose one or four sockets to the VM; the selection of sockets and cores also matters if VMs are too large to fit into a NUMA node.

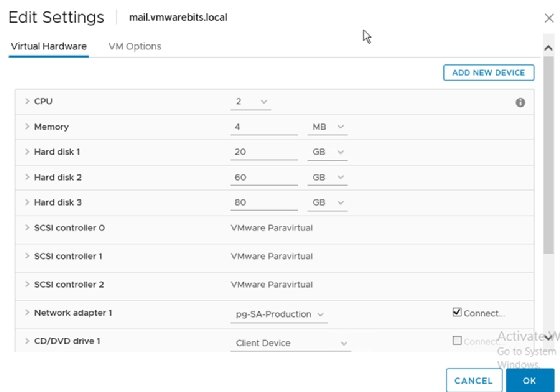

Multiple disks with several storage controllers

Admins' attention is often drawn toward CPU and memory when they discuss VM performance optimization. Though CPU and memory are the most expensive resources that admins should use as efficiently as possible, there's also the potential for performance gain in the storage I/O channel.

When a VM uses a single disk and does not generate much I/O, then there's not much to gain if admins add multiple disks and controllers. However, if admins run an application server, file server or a database server, multiple storage locations can improve performance.

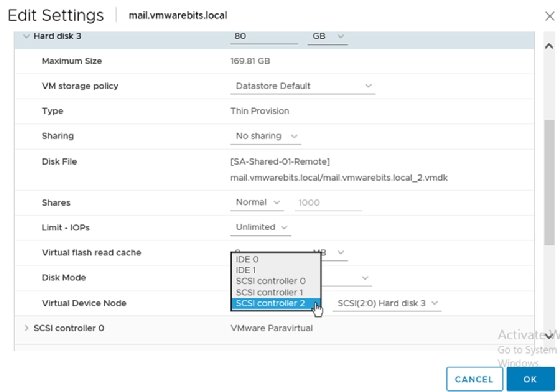

If admins choose multiple partitions for data and transactional logging, then they will most likely use separate virtual disks for these partitions. But admins can also use a separate storage controller for those disks.

For each disk, admins can configure them so that the disk is bound to a separate storage controller. The benefit for this setup is that admins' guest OS uses a separate storage I/O queue per controller for reads and writes to and from each disk. This provides a slight improvement when each disk has a high load.

To be able to see if this setup improves performance, it's important for admins to first monitor their application's throughput and CPU usage on the ESXi host for a week or two. After changing the configuration, admins should perform the same measurements to find out if and how much storage I/O performance has improved.