Fotolia

An overview of Apache Airflow for workflow management

This introductory look at Apache Airflow walks through some of the basics of the workflow management tool -- from installation steps to its various GUI options.

Apache Airflow is an open source workflow management tool that IT teams can use to streamline data processing and DevOps automation pipelines.

Based on Python and underpinned by a SQLite database, Airflow lets admins manage workflows programmatically and monitor scheduled jobs. In this Apache Airflow overview, we cover the tool's installation, integrations, core concepts and key commands. Then, we'll create a sample task with Python and exhibit Airflow's GUIs.

Apache Airflow core concepts and installation

Apache Airflow defines its workflows as code. Workers in Airflow run tasks in the workflow, and a series of tasks is called a pipeline. Airflow also uses Directed Acyclic Graphs (DAGs), and a DAG Run is an individual instance of an active coded task. Pools control the number of concurrent tasks to prevent system overload.

With Apache Airflow, there is no tar file or .deb package to download and install as there would be with other tools like Salt, for example.

Instead, users install all the OS dependencies via the pip Python package installer, such as build-essential packages, which include kernel files, compilers, .so files and header files. Then, they install a single Python package. Consult the Airflow documentation for the complete list of dependencies.

Airflow dependencies vary, but the superset includes:

- sudo apt-get install -y --no-install-recommends \

- freetds-bin \

- krb5-user \

- ldap-utils \

- libffi6 \

- libsasl2-2 \

- libsasl2-modules \

- 1 \

- locales \

- lsb-release \

- sasl2-bin \

- sqlite3 \

- unixodbc

Begin the installation procedure with the following command:

pip install apache-airflow

Next, initialize the SQLite database, which contains job metadata:

airflow initdb

Then, start Airflow:

airflow webserver -p 8080

Finally, open the web server at localhost:8080.

Don't run the system via web server, as it's intended for monitoring and tuning only. Instead, use Python and Jinja templates plugged into boilerplate Airflow Python code to run those tasks from the command line. Jinja exists inside Python code, but it is not Python language.

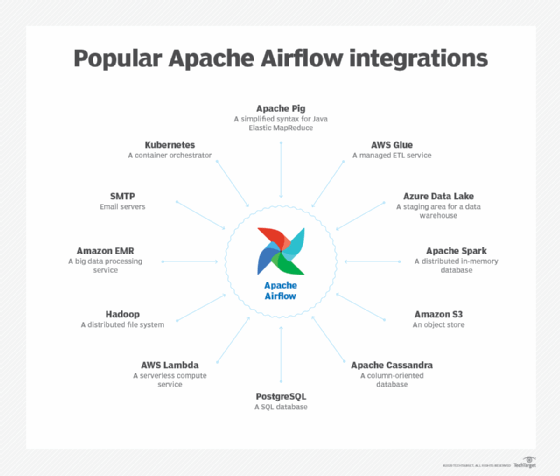

Users can add additional packages as well, and Airflow offers a variety of possible integrations. For example, connect Hadoop via the command pip install apache-airflowhdfs, to work with the Hadoop Distributed File System.

Airflow integrations

Airflow works with bash shell commands, as well as a wide array of other tools. For example, some of Airflow's integrations include Kubernetes, AWS Lambda and PostgreSQL. More integrations are noted in the image below.

Command line

Airflow runs from a command line.

The major commands potential users need to know include:

- airflow run to run a task;

- airflow task to debug a task;

- airflow backfill to run part of a DAG -- not the whole thing -- based on date;

- airflow webserver to start the GUI; and

- airflow show_dag to show tasks and their dependencies.

Users define tasks in Python. While they don't need much Python programming knowledge to use Airflow -- because they can copy templates -- they do need a little. For example, users must know how to use the datetime objects and timedelta objects.

Users who know a lot of Python can extend Airflow objects to write their own modules.

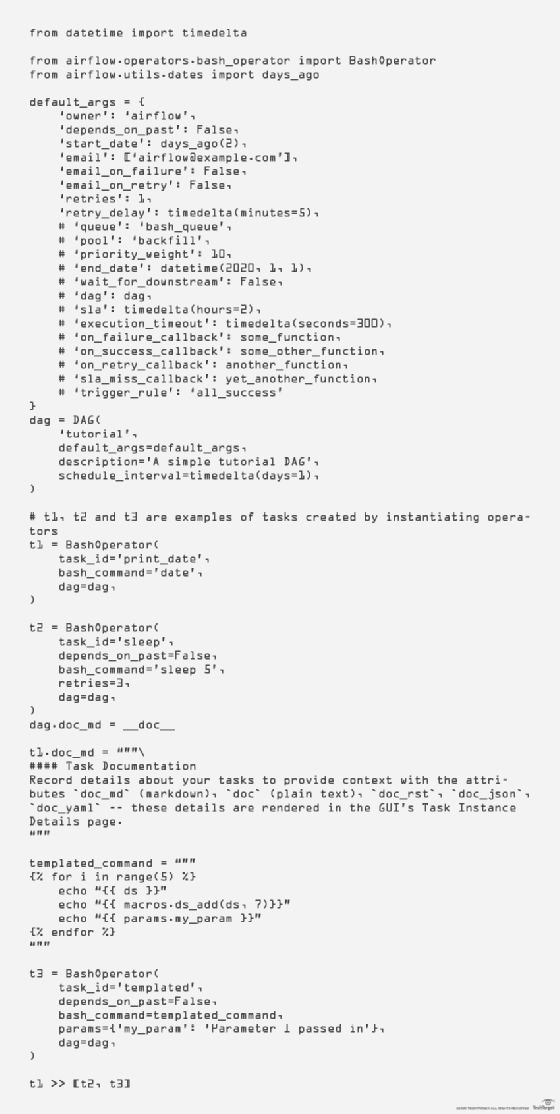

Sample Airflow task

Below is a pared-down version of the example Python task demonstrated in the Apache Airflow documentation, with sections of code explained throughout.

This task has three operations, with the first dependent on the following two. Dependencies are intrinsic to DevOps, because they enable certain jobs to run -- or not run -- depending on the outcomes of other jobs.

This enables IT admins to run several DevOps steps with a dependency on each. Bash commands do simple -- and complex -- tasks, such as copy files, start a server or transfer files. For example, this script might copy a file to an Amazon Glue staging area, but only if the previous step, such as to retrieve that file from another system, completes successfully.

Arguments to the DAG constructor are shown in Figure 2. This example uses the BashOperator, as it runs a simple bash command. All operators are extensions of the BaseOperator command. In addition, the other major APIs include BaseSensorOperators, which listens for events coming from an integration -- for example, Amazon Glue -- and Transfers, which ships data between systems.

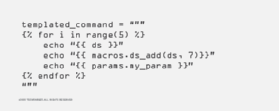

The above code uses Jinja, a popular macro programming language. Jinja passes macros and parameters into BashOperator and other Python objects via compact syntax.

For example, templated_command is a complete Jinja macro. It's fed into the BashOperator object. As shown in Figure 3, this runs 5 times and echos the parameter value params.my_param from the t3 BashOperator object.

This final section in Figure 4, t1 >> [t2, t3], tells Airflow not to run task 1 until tasks 2 and 3 have completed successfully.

The section of code in Figure 5 -- taken from the segment of Figure 1 that precedes the t3 commands -- creates markdown to write documentation that will display in the GUI.

Markdown is the syntax used by GitHub and other systems to create links, make bulleted lists and show boldface type, for example, to create a display with lots of relevant task information.

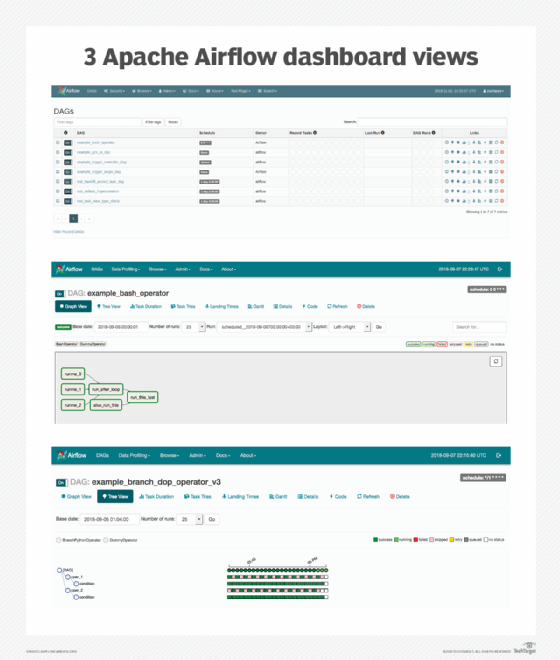

Apache Airflow's GUI

Run the GUI from the command line with the Python command airflow webserver and a REST interface, which is in beta at time of publication. Use the GUI for monitoring and fine tuning.

Each DAG, or task, is available in a list format on the DAGs screen of the GUI. But users have other view options as well, such as Tree View, which displays DAGs across time, for instance, as they run and shut down and wait. Remember that each DAG has a time to start. Another view option is the Graph View, because DAGs are a computer science concept that represent items in a tree-like object. The graph in Figure 6 shows jobs, their dependencies and their current state.

Other screens include:

- Variable View, which shows certain variables, like credentials;

- Gantt Chart, which shows task duration and overlap;

- Task Duration, which shows a timeline of task duration;

- Code View, which shows the python code of each pipeline job; and

- Task Instance Context Menu, which shows task metadata.