Getty Images

A breakdown of container runtimes for Kubernetes and Docker

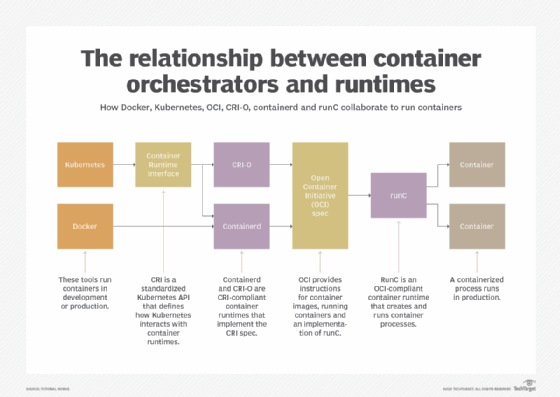

For a container ecosystem to work, it needs a container runtime. Brush up on Kubernetes and Docker containers, then compare runtime options such as runC, CRI-O and containerd.

Containers are agile and lightweight virtual entities that revolutionized software design and workload management. But containers have dependencies and require a container runtime -- often part of a broader container engine -- capable of unpacking a container image file and translating that file into a running process on a computer.

There are several container runtimes available today, and the choice of runtime is often governed by the choice of container engine. But the decision can have important consequences for the enterprise.

Let's take a closer look at the roles and relationships of containers, engines and runtimes, and consider some of the implications involved.

What are containers, container engines and container runtimes?

A container is a special type of virtual machine. As with most VMs, multiple containers can exist on the same physical computer. This improves the computer's resource utilization and saves money -- because more computing work can be performed on fewer servers. VMs are completely isolated entities, however, whereas containers share the underlying OS kernel, which enables containers to be far smaller, faster and exist in greater numbers on a computer compared to traditional VMs.

Containers require a container engine. A container engine is a general software platform that supports container use. Typical container engines include Docker, CRI-O, RKT and LXD. The engine takes user input, interacts with a container orchestrator, loads the container image file (from a repository, usually stored in a cloud service, either public or private) and prepares storage to run the container. Finally, it calls and hands off the container to a container runtime to start and manage the container's deployment.

A container runtime is a low-level component of a container engine that mounts the container and works with the OS kernel to start and support the containerization process. For an OS such as Red Hat Enterprise Linux, the runtime would set up cgroups, set SELinux policy, set AppArmor rules and so on. The most common runtime is runC, but other container runtimes include crun, railcar and Kata Containers.

standardization is an important part of container technology. By applying standards to container image formats and associated metadata, the container industry can develop images and tools that are more refined, secure and interoperable. Standards streamline the methodology used to mount and run container images as containers. This leads to refined and reliable container runtime tools.

Although there are several container image formats, the Open Container Initiative (OCI) has emerged as a popular and accepted standard for container images and runtimes. OCI uses runC, which other engines, such as Docker and CRI-O, can also use -- enabling greater container compatibility across container engines.

Containers put an enormous value on orchestration, relying on vital tools like Kubernetes to automate and manage containerized applications and environments within the local data center, as well as in the cloud. This means Kubernetes must handle an array of container image formats -- especially when creating and managing pods and clusters. The Kubernetes Container Runtime Interface (CRI) provides an API that connects Kubernetes to container runtimes.

CRI-O is an implementation of CRI which enables Kubernetes to use any OCI-compatible runtimes -- rather than using Docker as the runtime for Kubernetes. CRI-O turns Kubernetes into a container engine that supports runC and Kata Containers as container runtimes for Kubernetes pods -- though any OCI-compliant runtime should work.

The relationship between engines, runtimes and standardized interfaces is illustrated in Figure 1.

Docker and container runtimes

Running containers in the Docker environment requires four fundamental components: the Docker engine/interface, the underlying daemon, the runtime and the container itself.

- Docker. The container engine sits at the very top of the container stack. A platform such as Docker installs on a server or development computer and includes all the tools and utilities needed to build and run containers. Docker provides a comprehensive platform for developers and systems administrators -- such as DevOps users. A key part of the container engine is the UI. Docker provides a command-line interface -- the CLI, or "console" -- named docker-cli that users wield to build images, create container registries, load images from container registries and then start and manage containers.

- Daemon. The Docker engine doesn't manage containers directly, but instead relies on a separate module or high-level daemon process -- part of the Docker package -- called containerd. Containerd manages the container environment and oversees execution. The containerd daemon is a central aspect of the Kubernetes CRI that enables Kubernetes to interact with various container runtimes, such as runC and Kata Containers.

- Runtime. The container runtime is the low-level component that creates and runs containers. Docker currently uses runC, the most popular runtime, which adheres to the OCI standard that defines container image formats and execution. However, because Docker observes OCI-compliance, any OCI-compliant runtime should work.

- Containers. Finally, the containers are created and managed at the bottom of the container stack.

Kubernetes and container runtimes

Running containers in a Kubernetes environment bears similarity to the Docker environment and involves four major elements: the orchestration platform and interface, the daemon or high-level runtime, the low-level runtime and the container itself.

- Orchestration. Kubernetes is an open source platform IT teams use to orchestrate and manage containerized workloads and services. Kubernetes handles a range of orchestration tasks, including discovery and load balancing, container storage orchestration, automatic container rollouts and rollbacks, container distribution, security and container resilience processes -- also called self-healing. While Kubernetes is not a container engine in the same vein as Docker, Kubernetes must have the ability to manage and execute containers to create, control and maintain container cluster resilience. This critical ability to interact with various runtime components is handled through the Kubernetes CRI API.

- Daemon. Kubernetes interacts with container daemons through the Kubernetes CRI API. Because CRI is established as an industry standard, any daemon that adheres to CRI should interoperate with Kubernetes. Thus, CRI can interact with containerd, but it might be easier to use the lightweight daemon CRI-O, based on CRI and designed specifically for Kubernetes. CRI-O offers fewer features and capabilities than containerd but can add components from other projects to flesh out the functionality.

- Runtime. CRI-O must interact with a low-level container runtime. Because CRI-O is OCI-compliant, it should interoperate with any OCI-compliant container runtime. CRI-O uses the common runC runtime by default but can use other runtimes such as crun and Kata Containers. Some runtimes might require plugins, or shims, to achieve full interoperability, so it's important to test and validate any stack to ensure adequate stability and performance.

- Containers. As with Docker, the containers orchestrated by Kubernetes are managed at the bottom of the container stack.

Cloud Foundry container runtime

Kubernetes has quickly emerged as a standard platform for containerized application deployment automation, scaling and management. But Kubernetes alone might require more direct administrative control and oversight than some organizations can provide -- especially for cloud-based containerized applications. Cloud Foundry (CF) developed BOSH as an open source release engineering tool that assists deployment, lifecycle management and monitoring of distributed systems in the cloud.

Cloud Foundry combined the use of Kubernetes and BOSH to create CF container runtime to create, deploy and manage highly available Kubernetes clusters on a cloud platform in totality. The addition of BOSH provides several benefits to containerized environments including:

- high availability and multiple availability zone support;

- health monitoring for multiple master/etcd/worker nodes and VM healing;

- efficient scaling of instances within a cluster; and

- management of rolling upgrades for Kubernetes clusters.

Thus, the CF container runtime is not a low-level runtime like runC or crun, but rather a high-level platform designed to streamline complex Kubernetes clusters. It brings consistency and repeatability to cluster deployments and delivers a single uniform means to provision and manage Kubernetes clusters.

Other container runtimes

Developers and systems administrators might encounter other container runtimes including:

- Crun. A fast and lightweight OCI-compliant container runtime fully written in C.

- Kata Containers. A light OCI-compliant tool which can run in nested virtualization environments, though container startup time can be a little slower.

- gVisor. An OCI-compliant runtime with a faster startup time than Kata Containers. It intercepts system calls between the container and kernel, but only implements a limited number of system calls.

- IBM's Nabla Containers. A runtime that doesn't run Linux containers out of the box and uses only nine system calls for maximum security.

Most container runtimes are OCI-compliant, which enables them to run the same container image files without the need to alter or recompile the files for the runtime. Nevertheless, it's important to test and validate the runtime with vital containers to ensure adequate performance, stability and interoperability.