1xpert - Fotolia

Service mesh upstarts challenge Istio, Linkerd

Istio seemed destined for Kubernetes-like dominance in the service mesh market, but recent open source governance drama created an opening for competition from networking vendors.

As service mesh moves toward the enterprise mainstream, new choices have emerged that claim improved integration with existing networks and ease multi-cloud management.

Service mesh is a network architecture that has become popular alongside containers and Kubernetes, as it offers finer-grained centralized management of complex network connections between microservices than traditional methods. Service meshes distribute network policies through clustered environments via a distributed data plane and provide a control plane that centrally configures and maintains them.

Linkerd 1 service mesh was first to market in 2016. Then Istio, which was first to focus on Kubernetes networking, mostly dominated early enterprise conversations about the technology after its first release from Google and IBM in 2017. Linkerd soon added its own service mesh for Kubernetes with the release of Linkerd 2 in 2018 but so far has not supported the Envoy open source proxy widely considered the industry standard. Istio prompted an outcry when Google declined to donate it to an open source foundation last October, and underwent some architectural upheavals with version 1.5 in March.

These events created an opportunity for the field of competitors to expand in the months since. Fresh service mesh alternatives surfaced this year, including an open source service mesh project for Kubernetes launched by Microsoft in August, and within the last month, stable versions of service meshes from Kong and F5 Nginx.

Brad Casemore

Brad Casemore

"Worlds are colliding," said Brad Casemore, an analyst at IDC, of the Kong and F5 Nginx forays into service mesh.

Specifically, the worlds of API gateways, Kong's traditional speciality, and networking load balancers and application delivery controllers (ADCs) that are the traditional domain of F5. These areas intersect in ingress controllers, which manage connections to external networks from Kubernetes clusters, Casemore said.

"Some observers perceive service mesh as an evolution of API gateways, but ingress controllers demand more of the robust networking and security functionality that is typically associated with enterprise-grade load balancers and ADCs," he said. "API gateway vendors are now endeavoring to position themselves as networking vendors, albeit at the application layer."

Nginx, Kuma sync service mesh with existing networks

Integrations with existing network resources represent the main difference between earlier service meshes and newer offerings such as Kuma and F5's Nginx Service Mesh, which reached a beta 1.0 release in mid-September. Both can connect virtual machines and containers in the same mesh, while Istio, Linkerd and Microsoft's Open Service Mesh remained focused on Kubernetes.

Nginx Service Mesh, slated for general availability in early 2021, integrates automatically with F5 load balancers and Nginx ingress controllers. F5 positions it as a lightweight alternative to its own Istio-based product, marketed through its Aspen Mesh incubation project, which the vendor framed in a press release as more suitable for high-scale Kubernetes environments.

"All of a sudden, Istio's not a lock, and there may be room to position more service meshes that are easier to manage and in line with investments customers have already made in networks, bare metal [servers] and virtual machines," Casemore said. "There's more choice in service mesh, and room for alternatives meant for smaller environments."

Kuma integrates with Kong's open source ingress controller, and the paid version of Kuma, Kong Mesh, can be managed alongside Kong's API gateway and ingress controller through Kong Konnect, a new SaaS management product the company launched this week.

Austin Cawley-Edwards

Austin Cawley-Edwards

"You need an entry point to the mesh, so you do need some sort of gateway, regardless of what you choose," said Austin Cawley-Edwards, director of data platform at FinTech Studios, a financial analytics company based in New York, which uses Kuma and Kong's ingress controller.

"We had already had some negative experience with the API gateway from AWS -- it was difficult to update both by hand and with automation," Cawley-Edwards said in a presentation at the Kong Summit virtual event this week. "Kong's native Kubernetes ingress controller made integrating with our existing cluster and Helm deployments [easier], and Kong has given us the external control we needed to expose external services securely."

Kuma seeks niche in multi-mesh management

API management vendor Kong first expanded into service mesh last September with its Kuma project, which it donated to the Cloud Native Computing Foundation (CNCF) in June. Kuma reached a stable 1.0 version this week after security and performance improvements by community maintainers.

While Nginx Service Mesh is based in part on the open source Nginx web proxy, it is not an open source project. Kuma, like Linkerd, is governed by the CNCF, while Istio famously is not. However, Kuma also supports the Envoy service mesh sidecar project, which Linkerd does not.

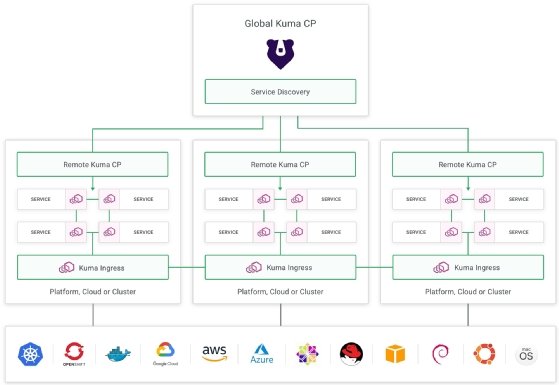

Kuma also takes a novel architectural approach to managing multiple meshes in multiple cloud availability zones, data center locations and Kubernetes clusters. It can manage multiple sub-meshes from a central location without requiring the deployment of separate Kubernetes clusters or tying meshes to Kubernetes namespaces, as Istio does.

Linkerd also avoids those requirements with its multi-cluster support in version 2.8, released in June, through an approach it calls service mirroring. This loosely couples the control planes for multiple clusters, on the basis that a centralized control plane can create performance and availability issues. Kuma, however, centralizes management of multiple meshes through a set of federated remote control planes that Kong officials said dodge the performance and availability problems cited by Linkerd.

"The global and remote control plane separation has been engineered specifically to avoid having a single point of failure," said Marco Palladino, CTO and co-founder at Kong. "The data plane proxies in Kuma always talk to the remote control plane in charge of the region where they are being provisioned … if the global control plane is down, the connectivity among services will keep working, [and] the remote ones could fail, too, and the data plane connectivity would still work [through] data plane proxies."

These differences have caught the attention of a Kong customer that signed on as a Kong Mesh early adopter, that plans to remotely manage on-premises infrastructure for clients, including those who still use VM-based infrastructure rather than containers.

"I see [Kong Mesh] as a way to extend myself to clients that want to keep data on-premises," said Charles Boicey, chief integration officer and co-founder at healthcare analytics company Clearsense in Jacksonville, Fla. "I don't have to do upgrades and maintenance for 10 different clients in person -- all the improvements that I make within the Clearsense environment can carry through to [customers'] environments."