What is hardware-assisted virtualization?

Hardware-assisted virtualization is the use of a computer's physical components to support the software that creates and manages virtual machines (VMs). Virtualization is an idea that traces its roots back to legacy mainframe system designs of the 1960s.

Early mainframes used the Control Program/Conversational Monitor System operating system and were adept at provisioning the mainframe's available computing resources into isolated environments capable of running enterprise workloads.

With the arrival and emergence of the X86-based personal computer in the 1980s, the idea of client-server computing gained popularity and high-volume distributed computing replaced mainframes in some organizations and the concept of virtualization was limited to partitioning hard drives into logical segments. At the time, there was no need for multiple small, distributed computers to share the limited resources each possessed.

Over the years, the evolution of computing power vastly outpaced that of software development and consequently, the resources that software required. CPU clock speeds jumped from megahertz to hundreds of megahertz to gigahertz, and memory volumes rose from hundreds of KB to GB, all in just a few decades. At the same time, the number of servers and other computers operating within many enterprises swelled.

The growing need for virtualization

By the close of the 20th century, enterprise computing faced enormous growth challenges from power, cooling and data center space constraints. Around that same time, system engineers realized that modern servers only utilized a small percentage of their available computing resources. For example, a typical physical server running an enterprise application might only utilize 5-15% of that server's available resources. The remaining resources typically went unused.

This sparked interest in resurrecting the idea of virtualization technology for x86 architectures. Designers theorized that a virtualized computer could potentially host multiple workloads, thereby reducing the total number of servers in the environment, along with the required power, cooling and space requirements. This concept, which would later be known as server consolidation, was the foundation of many early enterprise virtualization initiatives.

Mid-2000s brings about expanded hardware for virtualization

Early forays into software-based virtualization met with little success because the system overhead imposed by translation, shadowing and emulation limited virtualization efficiency and VM performance. A typical system could only host few VMs, and few applications performed well, if at all, in those software-based VMs.

The solution to this problem would initially come in the form of hardware-assisted virtualization, which added critical virtualization functions as fast, efficient, new processor commands. This proved to be a far better approach than software-based virtualization.

Both Intel and AMD worked to develop hardware-assisted virtualization extensions, and the first x86 CPUs with these new extensions appeared around 2005 with late-model Intel Pentium 4 -- 662 and 672 -- processors, and 2006 with AMD Athlon 64, Athlon 64 X2 and Athlon 64 FX processors. In the following years, Intel and AMD further expanded the hardware virtualization capabilities of processors and associated chipsets.

For example, Intel processors added support for extended page tables around 2008 and an unrestricted guest mode appeared around 2010, enabling vCPUs to operate in real mode. nested virtualization support -- creating a VM within another VM -- appeared after 2013. Support for virtualization in other system devices, graphics and networking have also become mainstream. Today, only a small number of purpose-built processors -- such as several Intel Atom processor variants -- might not provide hardware-assisted virtualization.

Intel and AMD aren't the only chip makers to delve into hardware-assisted virtualization support. Some RISC chip makers also support virtualization extensions in the ARM architecture. Intel and AMD maintain almost complete dominance in the server and PC processor market, however; both vendors are typically discussed, at the exclusion of any others.

Hardware-assisted virtualization vs. full virtualization and paravirtualization

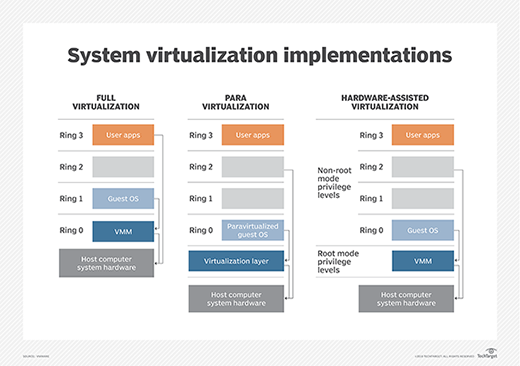

Before the introduction and broad adoption of hardware-assisted virtualization, virtualization was accomplished through software using two techniques: full virtualization and paravirtualization. With the advent of hardware-assisted virtualization, neither approach is used to any significant degree today.

The major approach to software-based virtualization is full virtualization, where binary translation trapped high privilege instructions and enabled the VMM software to emulate those functions instead, giving VM OSes the illusion of hardware access. But this approach imposed significant system overhead, which limited the number of VMs a system could support practically. And the emulation didn't support the compatibility or performance needs of all applications.

Not every workload would run well, if it ran at all, in a VM under software using full virtualization. Full virtualization software products such as VirtualBox and Microsoft Virtual PC are now considered obsolete.

The alternative to full virtualization is paravirtualization. In this software-based virtualization model, the hypervisor provides an API offering virtualization functions, and the guest OS in each VM would then make API calls to use virtualization features of the hypervisor. The problem here is that OSes such as Windows didn't natively support such APIs. Paravirtualization would require extensive modifications to the OS, which were difficult to make.

Paravirtualization is still supported in Linux 2.6.23 and later kernels, called pv-ops. An alternative includes VMware's Virtual Machine Interface, but this has since been deprecated and removed from Linux kernel 2.6.37 and VMware products in favor of more efficient hardware-assisted virtualization.