What is change failure rate (CFR)?

Change failure rate (CFR) is a software development performance metric that measures the percentage of software deliveries that required remediation after release to production. Lower CFR numbers indicate fewer problems in the development and testing phases of the work cycle.

Software teams use development methodologies such as Agile and DevOps to build products through frequent and continuous cycles of iteration. Each new software build brings changes such as new features and functionality, performance enhancements, and fixes for better reliability.

Not all changes the software team attempts are successful. Change can introduce new software defects, called bugs, that were undetected by testing. A rewritten piece of the software can cause incompatibilities with dependencies within the product. A release might work correctly but fail to meet customer experience expectations. These situations require more software engineering time to assess and address. A change failure is any incident that requires a remediation such as a patch, a code rollback or a hotfix.

The CFR is the proportion or percentage of releases to production that are associated with failure incidents.

The CFR metric is supported by the DevOps Research and Assessment (DORA) group. Organizations might use CFR in conjunction with other DORA-promoted metrics: deployment frequency, lead time for changes, and mean time to restore. When used together with other relevant metrics, CFR can provide insight into the efficiency and effectiveness of an organization's software development practices.

How to calculate the change failure rate

CFR is calculated as a ratio of the number of change failures over a given period divided by the total number of deployments over the same period. That provides a ratio from 0 to 1. CFR users generally multiply the number by 100 to provide a percentage.

Example CFR calculation. A DevOps team releases 30 changes to production in a month. Ten of those changes required deliberate remediation once released to production. The CFR ratio is 10/30, which resolves to 0.33. The team tracks CFR as a percentage, so calculates (10/30) x 100 to get 33%.

CFR accuracy. The key to successful CFR tracking is proper data gathering. The math is simple, but the adage "garbage in, garbage out" applies. Development teams must count the total number of deployments and then track and associate incidents with each of those deployments fairly and consistently.

Data gathering is subject to errors and oversights that can potentially skew the results. Common CFR errors include the following:

- Delivery counted as deployment. Builds that are delivered but not deployed should not be counted by this performance metric. The decision not to deploy a build could come from many reasons, such as the business added a new feature requirement. Something that did not deploy cannot fail in deployment.

- Including external incidents. Incidents such as network outages, hardware failures, or faults in a database server or other supporting services unrelated to the build should not be counted as change failures.

- Inconsistent or unrealistic incident scope. Incidents can include a range of issues, such as performance or stability degradation, rather than outright failures. Teams must use application performance monitoring and other relevant tools to recognize non-catastrophic incidents and include only relevant incidents in the CFR calculation.

How to use CFR

Once you reach a CFR figure, business and software engineering project leaders can evaluate and interpret it. Changes to the production application and IT environment demand development and testing time as well as other employee resources. Change failures mean that the business must spend more time on the change fixing problems with it.

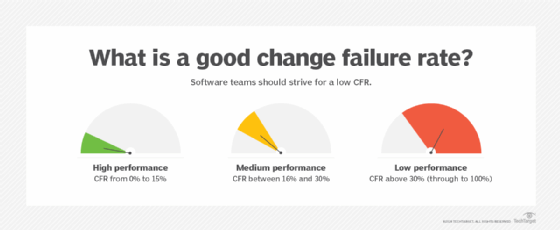

Low and falling CFR figures are desirable, while high CFR figures and rising CFR trends are undesirable. Generally, a low CFR is from 0% to 15%. A rate in this range indicates high software development and delivery performance. A CFR between 16% and 30% is medium performance. Higher CFRs, above 30%, represent low performance that teams should work to improve.

Software development organizations should consider tracking CFR as a metric if they want to increase delivery velocity and reduce lead time, delivering more builds faster. Organizations early in their DevOps journey typically have plenty of opportunity to improve DevOps code quality, testing and workflows. These companies can gain insights into DevOps maturity by tracking CFR.

CFR is also valuable in larger and more-established software organizations where there are many projects and an assortment of teams, including a mix of in-house teams, contractors and offshore providers. The difference of just a few percentage points can translate to significant time and cost for large, complex software businesses.

The problem with 0 CFR

The theoretical ideal for CFR is zero -- no failures when a build deploys to production. Although businesses strive to reduce CFR, they're unlikely to reach 0 CFR. Change failures are like the defects that cause them: virtually inevitable. Developers make mistakes, and testing tools and strategies are not perfect. Seeking to achieve and maintain 0 CFR would prove costlier and more problematic for the business than managing a realistic CFR range.

Do not demand 0 CFR. Instead, establish an acceptable rate of change failures that the business can achieve and maintain as a benchmark. CFR levels of 15% or less typically indicate an effective and efficient development operation. If CFR levels remain at or below that level, the business is spending an acceptable level of resources on failure remediation. Above that level, the business can objectively investigate and correct excessive CFR.

Performance metrics related to CFR

CFR is rarely used alone and is frequently tracked alongside other DORA metrics, such as deployment frequency, lead time for changes and mean time to restore services. A mix of performance metrics can bring useful perspective to software development efficiency. Here is a brief explanation of how each metric DORA exhorts interacts with CFR:

- Deployment frequency. DF is a measure of development velocity that tracks how fast the business releases new builds. Lowering CFR while increasing DF can indicate that the team is improving its development efficiency and implementing more effective workflows. It can translate to lower development costs or more productivity for the same cost. By contrast, increasing DF and increasing CFR might mean inadequate testing or poor code quality in the rush to production.

- Lead time. LT demonstrates how fast the business can go from a code commit to a deployment. Lowering CFR while decreasing LT also suggests better development efficiency and workflows, because it takes less time to complete each build. Decreasing LT with a rising CFR can also mean problems in tooling and workflows.

- Mean time to restore. MTTR measures remediation time. It is the time from incident detection to restored normality. MTTR is tangential to CFR. A falling MTTR is indicative of team expertise in incident management.

Reducing change failure rate

Software development organizations have numerous strategies to reduce CFR:

- Work small and fast. Iterative software development paradigms, such as DevOps, target small and frequent changes. Keeping each cycle short and limited in scope will minimize changes, make testing objectives clear, and enable effective problem tracking and remediation. If you change only one part and the build breaks, it's easy to know where the problem is.

- Improve code quality. Use tools and policies to manage the ways that software is designed and built. Code checkers can operate automatically to identify style, syntax and other quality problems, often within the IDE and compiler. Addressing code quality issues is commonly part of a change management process.

- Invest in testing. Testing should be appropriate to the changes in the build. When testing is inadequate or does not properly examine the code changes, there is no way to validate the integrity of those changes. Incomplete testing introduces undiscovered bugs into production.

- Improve bug reporting. Use tools and practices to track deployment failures. Gather information about the type of each failure and its implications. This context can aid in remediation and offer insights about root causes. Understanding why failures happened can inform better coding and testing in future releases.

- Utilize code reviews. Automation has its limits. Work on in-depth code reviews by staff members. Don't merge pull requests without a satisfactory review. Pulling code, making changes and then merging those changes without adequate review could result in errors that lead to deployment failures.

Tools to manage change failure rate

DevOps tool chains regularly include the capability to track software development and delivery metrics that organizations use to measure CFR. Software lifecycle tools that discuss CFR include the following:

- DX, a platform for tracking developer and software team productivity.

- Hatica, a project management and analytics tool for development teams.

- Jit Open ASPM Platform, which tracks automated security testing during development.

- LaunchDarkly, a release management tool that uses feature flags to deploy changes.

- Metridev, a metrics tracking platform for development teams and leadership.

- Opsera Unified Insights, a tool for tracking metrics through the CI/CD toolchain.

- Swarmia, a productivity tracking tool for software teams with reporting.

- Waydev, an analytics tool to track development initiatives.

History of change failure rate

The history of CFR is closely related to the emergence of DORA. DORA started as a company that sought to correlate the best practices and outcomes of DevOps with business goal success.

The DORA team identified the four metrics that closely correlate DevOps to organizational performance: DF, change LT, CFR and MTTR. In addition, the DORA team found that trunk-based development approaches were prevalent in high-performing team outcomes. Trunk-based development is a version control practice wherein small, frequent updates are merged to a core trunk or main branch.

DORA published their first findings in the "2014 State of DevOps Report." DORA has released annual reports and other guides on a regular basis.

Google acquired DORA in December 2018. DORA continues to operate as a research business dedicated to understanding software delivery and business performance.