Getty Images/iStockphoto

What's the role of an application load balancer vs. API gateway?

Application load balancers and API gateways both manage network traffic. Learn the differences between them and how to use them together in a modern IT deployment.

Load balancers and API gateways both handle network traffic, but the services function and support enterprise networks differently.

The conversation around network traffic management shouldn't focus exclusively on application load balancers vs. API gateways: Organizations can use the two together, but one doesn't require the other. For example, an API gateway connects microservices, while load balancers redirect multiple instances of the same microservice components as they scale out.

The choice of application load balancer (ALB) vs. API gateway should depend on the business need and use case. When application access is the goal, an ALB is typically the right choice. When an API is used to connect a client application with business data and back-end systems, an API gateway is the appropriate addition.

What is an application load balancer?

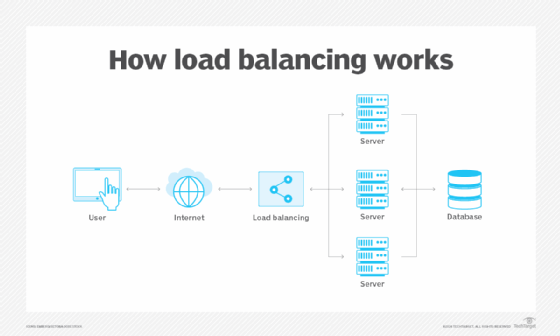

An ALB distributes incoming network traffic across two or more servers. An enterprise network sees high volumes of traffic, and an application that runs on a single server can lack sufficient network or compute power to handle all the traffic requests it receives.

An enterprise must run two or more application instances concurrently -- often on two or more physical servers. This provides applications with the capacity to handle all of the traffic. Redundancy helps ensure high availability (HA): If one server fails, the load balancer redirects traffic to the remaining instances on other servers. Load balancers direct incoming network traffic to each respective server via algorithms:

- Round robin algorithm. Distributes traffic basically evenly. For example, in a two-server configuration, this algorithm assigns 50% of traffic to each server. A weighted round robin algorithm allows the balance of traffic to be altered depending on the server's capacity or other preferences.

- Least connections algorithm. Directs traffic to the server with the fewest active connections, which is propitious when an environment's servers vary in capabilities.

- IP hash algorithm. Directs network traffic to servers based on the traffic request's origin. This algorithm is ideal for IT environments in which servers reside in different geographic regions; traffic is routed to the closest physical server for the lowest application latency.

- Adaptive algorithm. Allows traffic distribution to be adjusted dynamically based on the status of each server.

An organization can deploy a load balancer as a dedicated physical device or as software that runs on virtual servers.

Application load balancer functions

A typical application load balancer can offer a wide array of features intended to handle traffic and log important behaviors. Common ALB features include the following:

- Traffic support. An ALB can support applications using HTTP, HTTPS and HTTP/2, as well as WebSockets and Secure WebSockets. Look for IPv4 and IPv6 support.

- SSL termination. Normally an encrypted request remains encrypted to the target, but SSL termination allows the load balancer to decrypt the request before forwarding that traffic to the target. This can aid performance on some applications.

- Session affinity. Load balancers are traditionally agnostic, routing any requests to any targets -- essentially supporting stateless application behavior. Session affinity (aka sticky sessions) can route requests from the same client to the same target, allowing some level of stateful behavior.

- Authentication support. Load balancers often include support for certificate management functions such as multiple certificates and certificate binding (provisioning an SSL/TLS certificate to a load balancer).

- Request tracing. Tracing features can be enabled to track HTTP requests between clients and targets or other services on the network. This can help with security and troubleshooting.

- Access logs. Logs can record all requests sent to the load balancer for later analysis and troubleshooting as needed.

- Group support. Load balancers can include group support to help organize and restrict the traffic allowed to and from the ALB. Such features are important for network security as well as application performance management.

- Service integrations. Load balancers often integrate with other network services -- especially cloud-based load balancers. For example, a load balancer designed for Amazon Web Services is likely to provide integrations for AWS services such as CloudWatch, Web Application Firewall (WAF), AWS Certificate Manager and CloudTrail.

Application load balancer benefits and drawbacks

Load balancers are commonplace in enterprise applications, and they carry an assortment of tradeoffs that application architects and IT staff should consider. The benefits of an ALB are straightforward:

- Superior application availability. A single application instance poses a single point of failure. Using a load balancer to direct traffic across multiple application instances helps to ensure overall application availability. When a failure is detected in one server, the ALB can redirect traffic to other server instances, keeping the application available to users.

- Application scaling. A load balancer is key to running multiple application instances (servers), which is vital when an application needs to be scaled beyond the compute and network capacity of a single instance. Application scaling almost always involves the use of load balancers.

- Better performance. The ability to direct traffic between multiple instances helps to ensure that no single server is overburdened with excessive traffic. Multiple load balanced instances can bring better network and application performance -- yielding better business outcomes.

- Better UX. Users expect HA and responsiveness from enterprise applications. Consequently, the availability and performance benefits of load balancers typically translate into better customer satisfaction and a superior UX.

- Improved security. Many modern load balancers include security features and protocols that can monitor and mitigate malicious activity, block DDoS attacks and support powerful data encryption/decryption capabilities to protect application data against loss or theft.

Despite the important benefits application load balancers provide, there are several drawbacks that can impact the use of ALBs in enterprise application environments:

- Match the protocol to the situation. Distance matters in networks, and the algorithms available in load balancers must be selected with care. For example, some algorithms are intended for local applications -- geographic distances and network disruptions can pose challenges for some ALB algorithms.

- Single point of failure. While the ALB is intended to improve application availability and performance, the ALB itself can become a single point of failure. Application architects designing a deployment environment for HA applications may need to implement an HA load balancing scheme to prevent the load balancer from being a single point of failure. Similarly, some features such as session affinity can create a persistent relationship between a client and a target that itself becomes a potential single point of failure.

- New traffic needs. All traffic is not created equal, and load balancers might not easily accommodate new and emerging types of data traffic. For example, an ALB designed to handle common file access tasks might not perform as well with more demanding data types such as IoT device data. In these cases, existing load balancers may need to be updated or replaced with newer models intended to support more demanding traffic needs.

- The impact of algorithms. The ALB must process and make decisions about every piece of traffic that arrives. This introduces inevitable latency which can have a small but meaningful impact on application responsiveness and performance. The choice of algorithm, and the compute power of the load balancer itself, can unintentionally reduce application performance.

What is an API gateway?

An API gateway is an organizer and translator that connects various -- often unrelated -- pieces of software.

An API gateway manages network traffic, but in a different way. Today's software relies increasingly on APIs to integrate disparate components of an application and enable those components to communicate. An API gateway takes API requests from a client -- which is the software that makes an API call -- and sorts out the destination applications or services necessary to handle those calls. API gateways also manage all the translations and protocols between different pieces of software.

API gateways are often associated with microservices application design and deployment. Enterprises are designing and building modern applications as a series of independent services, rather than a single monolithic application. Those services communicate via APIs; the API gateway ensures that those services -- and the many API calls exchanged between those services -- interoperate properly in an overall deployment.

Organizations implement gateways as a service, and most frequently deploy them as a software instance on a VM.

API gateway functions

While an API facilitates communication and exposes enterprise applications and data, the API gateway provides several broad managerial and gatekeeping functions:

- Traffic management features. This is the heart of any API gateway, which can be a critical gatekeeper needed to manage countless API client requests. Traffic management features can include rate limiting, routing, load balancing, error handling, application or service health checks, request responses and even support A/B or other testing schema.

- Security features. Security is vital to ensure that API requests come from clients that are authorized to access the applications, services and data exposed by the API. The API gateway typically handles the authentication, authorization, encryption and access controls -- such as role-based access control -- needed to keep enterprise data secure. Some API gateways may also include features such as a WAF and protection DoS attacks.

- Monitoring features. Since the API gateway handles all the API client requests and responses, it's the perfect place to implement monitoring and observability features including request tracing and activity logging, along with desirable metrics and KPIs. This provides detailed insights into the organization's API system, how it's used and any problems that might arise.

- Scalability features. An API gateway is typically implemented as a service or application, but the traffic handling capabilities of the service are finite. The API gateway should support scalability features that allow parallel implementations for scalability to handle more requests, as well as redundancy for better API gateway service availability.

API gateway benefits and drawbacks

Although an API gateway is not a required component of an API system, a modern API gateway is often deemed essential because of the benefits that it provides:

- Streamlined API operation. Rather than passing an API call to an API directly, the API gateway serves as the common gatekeeper for all API exchanges between a client application and the business. This central service provides a single means of monitoring and controlling all API traffic and managing call volumes to maintain reliable and responsive client application performance.

- Flexible operation. The presence of a common API gateway allows business and technology leaders to tailor policies and rule sets that can govern API access, responses and security in close alignment with business and compliance requirements. When business needs change, it's a simple matter to adjust policies and rule sets at one central point within the API gateway.

- Observability and insight. The monitoring and logging capabilities provided by an API gateway are critical for gaining insight into the behaviors and performance of the API and back-end systems involved. This is essential to maintain security, track usage to justify scaling or upgrades and troubleshoot problems as they emerge.

While the benefits of an API gateway have solidified their role in modern API systems, API gateways can also pose several challenges that IT and business leaders should consider:

- Single point of failure. As with load balancers, an API gateway is typically implemented as a single service located directly in the traffic path between clients and local services. A fault within the API gateway, such as a failed API gateway server or service, can effectively render the organization's entire API inaccessible -- causing severe disruption to the business and UX. An API gateway may need to be architected or implemented for HA operation and scalability.

- Security vulnerabilities. The API gateway is a central and trusted service within the organization's network. Implementing appropriate security within the API gateway is critical because any compromise can potentially impact every service or API call/response. API gateways are often separated or implemented specifically for internal or external APIs.

- Complexity. Although API gateways are not extremely complex services, the policies and rules implemented for the gateway can be complex, carry challenging dependencies and vary with each API version. Consequently, the API gateway and policy management should be an integral part of the API lifecycle management process. Good API design standards and schema can help to ease API gateway management complexity.

Stephen J. Bigelow, senior technology editor at TechTarget, has more than 30 years of technical writing experience in the PC and technology industry.