What are the benefits of an MLOps framework?

While maintaining machine learning models throughout their lifecycles can be challenging, implementing an MLOps framework can enhance collaboration, efficiency and model quality.

The current age of machine learning presents new challenges in model development, training, deployment and management. To address these difficulties, organizations need a systematic way to maintain ML models throughout their lifecycles.

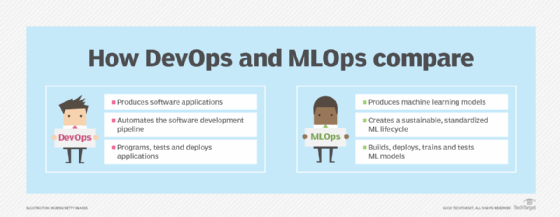

Software development has long embraced iterative and Agile workflows such as DevOps. By blending software development and IT operations, DevOps can eliminate traditional organizational silos, strengthen collaboration and improve software quality.

Business and technology leaders are now adapting DevOps principles to the ML lifecycle, adopting cultures and practices that integrate model development with deployment and infrastructure management. This has led to the emergence of machine learning operations (MLOps). When implemented correctly, an MLOps framework can yield many benefits for organizations' ML and AI initiatives.

What is MLOps?

MLOps is a set of practices intended to streamline the development, testing and deployment of machine learning models.

Machine learning projects share many similarities with conventional software development. Applications that rely on ML models often involve extensive code that teams must develop, test, deploy and manage. This makes it easy to apply DevOps principles and Agile development paradigms to ML models.

However, there are important differences between ML models and traditional software applications. Traditional apps focus on application behavior, such as user interactions, whereas ML models are built explicitly to process vast quantities of quality data to generate outputs.

In addition, ML models are often integrated with data science, data visualization and data analytics platforms. Unlike traditional apps, ML models also require training, validation and periodic retraining. These differences demand careful adaptations of DevOps practices for MLOps.

Much of the MLOps pipeline is based on the recurring cycle of coding, testing and deployment found in DevOps. The principal differences between MLOps and DevOps include two additional tasks at the beginning of the MLOps loop:

- Model creation. Data scientists and ML engineers design the relationships and algorithms that define an ML model.

- Data collection and preparation. Data, which is necessary for training and operating the model, can be obtained from various sources and must be cleaned and curated prior to use. Different data sets are used for training and production.

Another key difference between DevOps and MLOps is time. Although both fields use an iterative and cyclical approach, DevOps is typically decoupled from time; a successful DevOps release doesn't depend on subsequent releases.

MLOps, in contrast, employs short, consistent cycles that incorporate frequent model updates and retraining. This approach helps prevent troublesome ML model drift, where changes in data patterns over time reduce the model's predictive accuracy.

Risks like model drift emphasize the importance of monitoring in MLOps. In DevOps, monitoring usually focuses on measuring app performance. While this is true for MLOps, too, monitoring in MLOps also involves monitoring for risks like ML model drift to ensure output accuracy.

Key MLOps practices

An MLOps framework is governed by a series of practices and principles affecting data, modeling and coding. Common practices include the following.

Version control

While versioning is common in software development and deployment, MLOps emphasizes the use of version control throughout the full iterative loop. For example, version control is applied to data sets, metadata and feature stores in the data preparation stage. Training data is typically versioned, and the model's algorithms and associated codebase are tightly version controlled to ensure that the right data is used for the right model. This control helps with model governance and ensures more predictable and reproducible outcomes -- a central tenet of model explainability.

Automation

Automation is crucial in workflow creation and management throughout the MLOps lifecycle. It is key to data set transformations, such as data normalization or other data processing tasks, as well as in training and parameter selection. Automation also speeds up the deployment of trained and tested models to production with minimal human error.

Testing

Code testing is vital for ML models, and MLOps extends testing to data preparation and model operation. Testing during the data preparation phase ensures that data is complete and accurate, meets quality standards, and is free of bias. Testing during the training phase ensures accurate output and proper integration with other tools or AI platforms. Ongoing deployment testing lets the business monitor the model for accuracy and drift.

Deployment

MLOps workflows and automations focus strongly on deployment processes. Deployment in MLOps often involves data sets and availability, ensuring that quality data is available to the ML model and its broader AI platform. Deployment also retains its traditional importance in code or model deployment through technologies such as containers, APIs and cloud deployment targets for rapid scalability.

Monitoring

Monitoring is routine in app deployment, but MLOps emphasizes ongoing infrastructure and ML model monitoring to oversee model performance, output accuracy and model drift. Teams can remediate performance issues with traditional practices like scaling, and resolve output issues through periodic retraining or feature modifications.

Benefits of MLOps

MLOps practices are gaining traction as ML and AI platforms proliferate across industries. When implemented properly, an MLOps framework can yield numerous business benefits:

- Faster deployments. MLOps practices, such as end-to-end automation and testing, accelerate the development and deployment of ML products. This provides a competitive advantage by speeding time to market and enabling faster model updates and revisions as data changes.

- Improved collaboration. MLOps requires strong communication and collaboration. It is most effective when silos are eliminated, enabling ML project teams to work seamlessly from data collection through deployment and monitoring.

- Higher-quality models. Successful MLOps environments produce higher-quality ML models with superior algorithmic architectures, more efficient development and deployment phases, and better accuracy and effectiveness over time.

- Enhanced efficiency. As with any well-defined workflow or process, MLOps streamlines and automates many routine and repetitive tasks involved in ML model creation. This lets professionals focus on model development and business innovation.

Risks of MLOps

Despite the benefits, MLOps can pose several challenges that businesses should consider before embarking on an MLOps initiative. Common risks include the following:

- Limited flexibility. Establishing a workflow imposes guardrails and restrictions on ML projects. MLOps often works best for organizations supporting multiple mature projects with frequent iterative cycles where automation and internal practices can be codified. Experimental or infrequent ML projects might benefit less from MLOps.

- Risk of errors. Automation can provide powerful benefits, but automating the ML lifecycle can also easily propagate mistakes or oversights in existing workflows, especially in data management and model training. This creates significant possibilities for errors that can affect ML models.

- Cost and complexity. Implementing a major workflow such as MLOps requires tools, training and time. This poses long learning curves and can increase the cost and complexity of ML projects.

Stephen J. Bigelow, senior technology editor at TechTarget, has more than 20 years of technical writing experience in the PC and technology industry.