Getty Images/iStockphoto

Is your business ready for the EU AI Act?

The EU AI Act provides businesses with a rubric for AI compliance. Businesses must understand the landmark act to align their practices with upcoming AI regulations.

The European Union's Artificial Intelligence Act was approved by EU lawmakers and takes effect in 2024. This legislation aims to protect citizens' health, safety and fundamental rights against potential harms caused by AI systems.

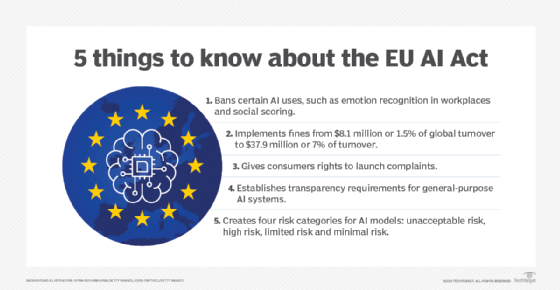

The comprehensive EU AI Act creates a tiered risk categorization system with various regulations and stiff penalties for noncompliance. For businesses looking to use the Act as a framework for improved AI compliance strategies, it's essential to understand its key aspects and implement best practices.

Who does the EU AI Act apply to?

The EU AI Act applies to any AI system within the EU that is on the market, in service or in use. In other words, the Act covers both AI providers (the companies selling AI systems) and AI deployers (the organizations using those systems).

The regulation applies to different types of AI systems, including machine learning, deep learning and generative AI. Exceptions are carved out for AI systems used for military and national security, as well as for open source AI systems -- excluding large generative AI systems or foundation models -- and AI used for scientific research. Importantly, the Act applies not just to new systems but to applications already in use.

Key provisions of the EU AI Act

The AI Act adopts a risk-based approach to AI, categorizing potential risks into four tiers: unacceptable, high, limited and minimal. Compliance requirements are most stringent for unacceptable and high-risk AI systems, which therefore require more attention from businesses and organizations hoping to align with the Act's requirements.

Examples of unacceptable AI systems include social scoring and emotion manipulation AI. Creating any new AI system that falls into this category is prohibited, and existing ones must be removed from the market within six months.

Examples of high-risk AI systems include those used in employment, education, essential services, critical infrastructure, law enforcement and the judiciary. These systems must be registered in a public database, and their creators must demonstrate that they don't pose significant risks. This process involves satisfying requirements related to risk management, data governance, documentation, monitoring and human oversight, as well as meeting technical standards for security, accuracy and robustness.

These detailed requirements for high-risk AI systems are still being finalized, with the EU expected to make more specific guidance and timelines for compliance available in the coming months. Businesses developing or using high-risk AI systems should stay informed about these developments and prepare for implementation as soon as the final requirements are published.

Like the EU's data privacy regulation, the GDPR, the AI Act imposes strict penalties for noncompliance. Companies can face fines reaching over 30 million euros or 7% of their global annual revenue, whichever is higher. Different penalty tiers apply for various types of violations under the Act.

Enterprise roadmap: 10 steps to compliance

The EU AI Act is the first of many possible upcoming AI regulations. There is still time to achieve compliance with the AI Act, and companies can get a head start by following a series of holistic steps. This approach can help companies avoid penalties, ensure the distribution of their AI products and services, and confidently serve customers in the EU and elsewhere.

1. Strengthen AI governance

As AI laws increase, AI governance will no longer be a nice-to-have but a must-have for businesses. Use the EU AI Act as a framework to enhance existing governance practices.

2. Create an AI inventory

Catalog all existing and planned AI systems, identifying the organization's role as either an AI provider or an AI deployer. Most end-user organizations are AI deployers, whereas AI vendors are typically AI providers. Assess each system's risk category and outline the corresponding obligations.

3. Update AI procurement practices

When selecting AI platforms and products, end-user organizations should ask potential vendors about their approach to and roadmap for AI Act compliance. Revise procurement guidelines to include compliance requirements as a necessary criterion in requests for information and requests for proposals.

4. Train cross-functional teams on AI compliance

Conduct awareness and training workshops for both technical and nontechnical teams, including legal, risk, HR and operations. These workshops should cover the obligations the AI Act imposes throughout the AI lifecycle, including data quality, data provenance, bias monitoring, adverse impact and errors, documentation, human oversight, and post-deployment monitoring.

5. Establish internal AI audits

In finance, internal audit teams are responsible for assessing an organization's operations, internal controls, risk management and governance. Similarly, when it comes to AI compliance, create an independent AI audit function to assess the organization's AI lifecycle practices.

6. Adapt risk management frameworks for AI

Extend and adapt existing enterprise risk assessment and management frameworks to encompass AI-specific risks. A holistic AI risk management framework makes it simpler to comply with new regulations as they become applicable, including the EU AI Act as well as the other global, federal, state and local-level regulations on the horizon.

7. Implement data governance

Ensure proper governance for the training data used in general-purpose models, such as large language models or foundation models. The training data must be documented and in compliance with data privacy laws like GDPR and the EU's copyright and data scraping rules.

8. Increase AI transparency

AI and generative AI systems are often described as black-box tools, but regulators prefer transparent systems. To increase transparency and interpretability, explore and develop capabilities in explainable AI techniques and tools.

9. Incorporate AI into compliance workflows

Use AI tools to automate compliance tasks, such as tracking regulatory requirements and obligations applicable to your organization. Possible AI applications in compliance include monitoring internal processes; maintaining an inventory of AI systems; and generating metrics, documents and summaries.

10. Proactively engage with regulators

Some of the details about AI Act compliance and implementation are still being finalized, and changes could occur in the months and years to come. For any unclear or gray areas, contact the European AI Office to clarify expectations and requirements.

Kashyap Kompella is an industry analyst, author, educator and AI advisor to leading companies and startups across the U.S., Europe and the Asia-Pacific region. Currently, he is the CEO of RPA2AI Research, a global technology industry analyst firm.