Getty Images/iStockphoto

How to source AI infrastructure components

Rent, buy or repurpose AI infrastructure? The right choice depends on an organization's planned AI projects, budget, data privacy needs and technical personnel resources.

AI requires infrastructure -- and not just any infrastructure. Training and running AI models often relies on special infrastructure resources, such as servers equipped with GPUs, which enable parallel computation for AI workloads that require massive compute resources.

Unfortunately, AI-friendly infrastructure isn't always easy to source. Due to ongoing GPU shortages, acquiring the right hardware can be challenging, not to mention expensive. And although it's possible to use cloud-based infrastructure for AI workloads, that approach comes with its own set of challenges.

Businesses have several options for building out or accessing the infrastructure necessary for AI workloads. To start, organizations need to determine their key infrastructure requirements for AI, then evaluate the pros and cons of the various approaches to obtaining that infrastructure.

Infrastructure requirements for AI

Exact infrastructure needs vary depending on the specifics of each AI workload. But in general, any organization looking to deploy its own AI model or application needs the following:

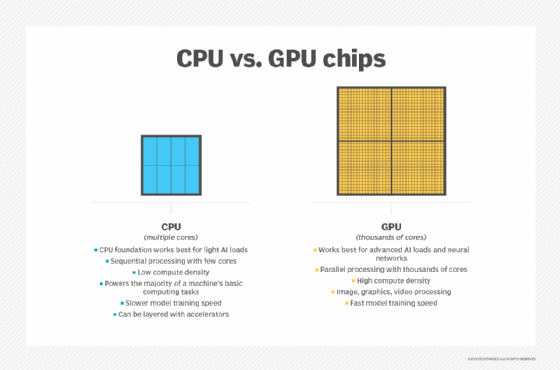

- Compute resources, which are critical for AI model training as well as analytics. It's sometimes possible to use standard CPUs for machine learning workloads, but GPU-enabled hardware is often better for use cases requiring extreme amounts of computing power.

- RAM, which provides short-term storage during model training and data processing.

- Persistent storage resources, which store training data.

4 strategies for sourcing AI infrastructure

How can organizations go about acquiring the infrastructure that AI demands? Compare the following viable approaches.

1. Purchase new hardware

The most straightforward option is to go out and buy new servers optimized for AI workloads. This approach enables businesses to acquire exactly the right hardware for their use cases.

The obvious drawback is that purchasing AI infrastructure outright can be very expensive. A single GPU-enabled server can cost tens of thousands of dollars, and large-scale AI deployments could require dozens or even hundreds of such servers.

Organizations choosing this option also need to set up and maintain the servers in house, increasing the operational burden on their IT teams. So, for organizations without budget to burn or spare personnel resources to devote to managing new AI servers, the "buy" approach to AI infrastructure might not be the right choice.

2. Repurpose existing servers

Instead of buying brand-new servers, it's also possible to repurpose existing servers for AI workloads. This is an especially good option for businesses with spare servers on hand -- for example, a company that has recently migrated workloads to the public cloud and no longer needs all the on-premises servers it owns.

The challenge here is that not every server can support the unique infrastructure needs of AI workloads. Not all servers include interfaces that enable IT staff to install GPUs, for example.

Organizations looking to repurpose existing servers will also need to purchase any additional hardware components -- such as GPUs -- that their AI workloads demand, meaning that this strategy isn't revenue neutral. But overall, it's still more cost-effective than purchasing totally new AI infrastructure.

3. Use GPU as a service

Organizations whose main AI infrastructure requirement is GPUs should consider a GPU-as-a-service offering.

This option gives the business access to GPU-enabled servers hosted in the cloud. It's the same idea as renting virtual servers on a traditional public cloud IaaS platform. But in the case of GPU as a service, the rented servers contain GPUs.

This is a good approach for organizations that only need access to GPUs on a temporary basis -- for example, to train AI models. It also offers the flexibility to pick and choose among different types of GPU-enabled server configurations.

The main challenge of GPU as a service is that it requires migrating AI workloads to the GPU-enabled infrastructure, which involves some effort. Organizations using rented servers to process user data might also run into data governance and privacy issues, as this exposes potentially sensitive information to a third-party platform.

4. Use GPU over IP

Another way to acquire GPU-enabled infrastructure for AI without setting it up in house is to use a GPU-over-IP service.

This approach uses the network to connect AI workloads hosted on one server to GPU resources within a different server, even if the server that hosts the workloads does not have a GPU. Consequently, the organization doesn't have to migrate workloads or expose data directly to third-party infrastructure. Everything remains on servers that the business controls, with the exception of parallel computation, which is enabled by GPUs in remote servers.

GPU over IP is a relatively new idea, and taking advantage of it requires finding GPU-enabled servers with capacity to spare. But it offers some convenience and data privacy advantages over the more traditional GPU-as-a-service approach.

Questions to ask when choosing an AI infrastructure

When navigating the options described above, consider the following questions to choose the right AI infrastructure approach.

- What's your budget? The more money that is available to invest in AI infrastructure, the more feasible it is to acquire and manage servers for AI workloads.

- How long will you need the infrastructure? For one-time model training, it makes more sense to opt for GPU as a service or GPU over IP. But organizations requiring AI infrastructure on an ongoing basis might find it worthwhile to build their own infrastructure.

- How sensitive is your data? Organizations that can't risk exposing data to third parties should build in-house AI infrastructure or explore GPU-over-IP options.

- What are your personnel resources? Businesses planning to build their own AI infrastructure will need staff skilled in AI infrastructure management. Using GPU over IP might also require some specialized skills. By comparison, GPU as a service requires the least management and maintenance effort because engineers can simply spin up AI infrastructure resources in the cloud.