How to build an MLOps pipeline

Machine learning initiatives involve multiple complex workflows and tasks. A standardized pipeline can streamline this process and maximize the benefits of an MLOps approach.

Machine learning operations -- or MLOps -- is a complex process involving numerous discrete workflows, including AI model training, testing, deployment and management. Performing all these tasks efficiently can be challenging, especially for organizations adopting machine learning at scale.

MLOps pipelines help solve this problem. With an MLOps pipeline, organizations can automate and integrate MLOps workflows, making them easier to manage and scale. To set up a pipeline, organizations must first understand how to implement each core process within their MLOps initiative. These processes can then be integrated to form one cohesive pipeline.

What is an MLOps pipeline?

An MLOps pipeline is a set of integrated tools that automate core workflows within the machine learning lifecycle. The core components of an MLOps pipeline include the following:

- Design and planning involves defining the organization's goals in adopting an MLOps framework and determining what data and models are needed.

- Data preparation ensures that data is properly formatted and meets data quality standards for training a machine learning model.

- Model tuning involves customizing model weights and parameters to optimize performance.

- Model training is the process of exposing an AI model to data and teaching it to identify relevant patterns.

- Model testing evaluates the trained model's behavior and performance before deployment to ensure that it functions as expected.

- Model inference applies the trained and tested model to a real-world environment.

- Model monitoring checks a deployed model over time, identifying problems that arise during inferencing and flagging degraded performance.

Teams can perform each of these processes separately. However, doing so makes it difficult to implement an efficient, scalable MLOps strategy because it requires manual data transfers between processes. Performing steps separately also makes it more difficult for ML and data teams to ensure repeatability and consistency.

An MLOps pipeline integrates and automates these core MLOps processes. This not only saves time and resources, but also improves consistency, as repeated instances of processes are performed the same way each time.

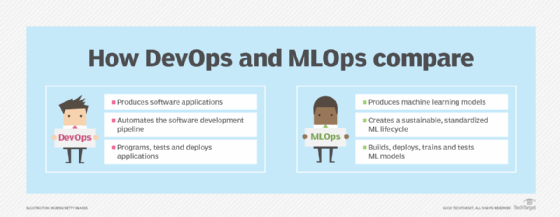

MLOps pipelines are analogous to pipelines in other contexts. For example, DevOps pipelines automate and integrate the processes involved in software delivery, and data pipelines do the same for the steps required to prepare and process data.

Create an MLOps pipeline

To build an MLOps pipeline, first implement tools that automate each of the core MLOps processes described above. Next, integrate those tools so that they connect to form an efficient pipeline.

Here's an overview of how to set up each stage of an MLOps pipeline.

Design and planning

In the design and planning phase, teams experiment with various approaches to completing a specific task or goal. Jupyter notebooks are a popular tool at this stage -- and throughout the MLOps lifecycle -- for interactive coding and testing. Data scientists and ML engineers use Jupyter notebooks to work iteratively through different ideas, documenting tests and sharing findings until the team can decide on an ultimate strategy.

Data preparation

Data preparation involves ensuring that the data used in AI training is as high-quality as possible. This stage involves using open source tools such as OpenRefine to clean and transform data, as well as addressing issues such as missing values, normalization and encoding categorical variables.

Model tuning

Model tuning involves adjusting the parameters that control the model training process. These parameters, also known as hyperparameters, are essentially configuration variables that tell models how to interpret and work with training data.

The ability to tune models prior to each training run is important. In some cases, model tuning is as simple as changing configuration values, so specialized tools aren't necessary. In other cases, teams might use hyperparameter optimization frameworks such as Ray Tune or Optuna to automate this process. It's common for ML teams to retrain models with tweaked parameters across multiple iterations, refining the model based on the performance metrics of previous runs.

Model training

Model training is the process of feeding data into the model so that it can look for and learn relevant patterns. This is typically done by training the model on a cleaned and curated data set using tools such as TensorFlow and PyTorch, two popular open source frameworks for model training.

Model testing

Model testing is important for validating that trained models work as expected. This stage involves testing the model using a validation data set separate from that used for training, and identifying potential problems, such as overfitting. TensorFlow and similar tools have built-in functionalities to assist with model testing by automatically analyzing model behavior using metrics such as accuracy and precision.

Model inference

Once a model has been trained and tested, MLOps engineers deploy it into a real-world environment where it can perform inferencing: making decisions based on the patterns it identified during training. TensorFlow, among other tools, provides "serving" capabilities that facilitate model deployment into production environments. These capabilities ensure that models can process incoming real-time data to make predictions and decisions after deployment.

Model monitoring

Monitoring models on an ongoing basis involves tracking the output they generate and identifying issues like problematic output or drift. Model drift refers to changes that degrade a model's accuracy over time due to changes in how the model is used or the types of input it receives.

Model monitoring is a complex task, and the best way to perform it depends on several factors -- for example, how the model is deployed and the types of input and output it handles. A variety of model monitoring tools are available, including open source tools such as Prometheus and Grafana.

Unify MLOps tools into a pipeline

To unite these tools into an efficient pipeline, there are several strategies to consider.

One approach is to integrate tools manually. For example, teams can write custom scripts that move data between different stages of the MLOps pipeline, such as data preparation and model training. Tools like Jupyter notebooks are beneficial here because they can execute code that moves data across these MLOps phases.

However, the manual approach can be inflexible and labor-intensive. Organizations seeking a more automated MLOps pipeline should consider using a platform that provides built-in integrations. For example, Kubeflow -- a common open source tool for setting up an MLOps pipeline -- can deploy a variety of popular MLOps tools as part of a unified pipeline on top of Kubernetes. In addition, cloud vendors offer proprietary cloud-based MLOps pipelines and platforms, such as Amazon SageMaker and Vertex AI Pipelines on Google Cloud.

Chris Tozzi is a freelance writer, research adviser, and professor of IT and society who has previously worked as a journalist and Linux systems administrator.