LuckyStep - stock.adobe.com

Does using DeepSeek create security risks?

The Chinese AI chatbot, despite its efficiency and customizability, raises serious concerns about data privacy, censorship and security vulnerabilities for business users.

Two years after ChatGPT's launch, China introduced a major rival to OpenAI's technology: DeepSeek.

The DeepSeek chatbot quickly gained traction in early 2025, surpassing ChatGPT as the most downloaded freeware app on Apple's iOS App Store in the U.S. market shortly after its Jan. 10 release. A week later, it reached the same milestone on Android's Google Play Store.

However, DeepSeek's rapid rise in popularity has sparked serious privacy and security concerns, particularly for business users who might input sensitive data into the chatbot. It's essential to understand these risks before using this new generative AI tool.

What is DeepSeek?

DeepSeek, developed by a Chinese AI startup of the same name, is a recent entrant to the crowded generative AI chatbot market. But its open source nature and the ability to access it entirely for free set it apart from competitors like OpenAI's ChatGPT, Google's Gemini and Anthropic's Claude. To get started with DeepSeek's web application, users need only an email address or phone number to register for a free account.

What makes DeepSeek truly noteworthy, however, isn't its affordability, but its efficiency. Whereas other leading chatbots were trained using supercomputers with as many as 16,000 GPUs, DeepSeek achieved comparable results with only around 2,000 GPUs, The New York Times reported -- a huge and highly cost-effective reduction in resource requirements.

Functionally, DeepSeek shares similarities with ChatGPT; it's an AI-based chatbot that can answer questions and solve logical or coding problems. DeepSeek offers two main models: DeepSeek-R1, a reasoning model similar to OpenAI's o1 or o3, and DeepSeek-V3, a general conversational model similar to OpenAI's GPT-4o or GPT-4. However, DeepSeek distinguishes itself through its performance in technical and mathematical domains, whereas ChatGPT delivers natural, context-aware responses across a wider range of topics.

DeepSeek web app vs. local hosting

Users can host models locally via GitHub or access them through DeepSeek's web and mobile applications. When using the web or mobile app, users interact with models running on servers managed by DeepSeek. This means that DeepSeek's data-sharing policies apply, as do any centralized security measures the company has implemented.

In contrast, developers who locally host or fine-tune DeepSeek models deploy them directly onto their own infrastructure. As a result, their data isn't shared with DeepSeek, but responsibility for application security and data privacy disclosures is transferred to the downstream developer.

Security implications of using DeepSeek

As a China-based organization, DeepSeek operates under strict censorship and data regulations. This raises concerns about user data being potentially shared with the Chinese government. In addition, DeepSeek's heavily censored and monitored environment poses privacy risks, especially for users outside China who might not fully understand the implications of using an AI tool closely tied to government oversight.

Privacy issues

According to DeepSeek's privacy policy, the company collects the following user data when users access the model via the web app UI:

- Account information.

- User input.

- Communication data when a user contacts DeepSeek.

- Device and network details.

- Log information.

- Location data.

- Cookies.

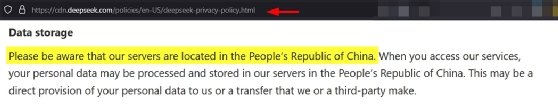

Critically, this information is stored on servers located in the People's Republic of China and can be shared with government entities.

Open source structure

DeepSeek is open source, which provides numerous advantages. For example, developers and startups can locally host and fine-tune prebuilt DeepSeek models inexpensively instead of training their own from scratch -- a process that can otherwise cost millions of dollars.

However, this open source approach also introduces serious risks concerning content generation:

- Harmful content. Developers can modify DeepSeek's code to circumvent built-in safety mechanisms designed to prevent dangerous outputs. By fine-tuning the model, users could create variants capable of generating malicious code or responding to high-risk prompts like "how to make a bomb."

- Disinformation. The ease with which developers can modify DeepSeek's code and deploy custom variations makes it easier for malicious actors to generate and disseminate disinformation, enabling them to spread such content on social media platforms at scale.

Large language models from Western companies typically disallow generating malicious or dangerous content. Although users sometimes induce models like ChatGPT and Claude to produce harmful outputs, known as jailbreaking, doing so is often quite difficult due to the extensive guardrails these companies have developed. Moreover, proprietary models' safety mechanisms are more likely to be opaque to users, since their code is not exposed.

DeepSeek, on the other hand, seems comparatively more vulnerable to jailbreaking, even before fine-tuning. This could lead to scenarios where modified DeepSeek variants generate dangerous content, such as ransomware code or tools to automate cyberattack operations, including command-and-control mechanisms and evasion techniques.

Data storage risks

DeepSeek's centralized data storage poses privacy and security risks for international users. Unlike many Western-developed AI tools, which typically use a decentralized structure that distributes data across global networks of servers, DeepSeek hosts all its servers in mainland China.

This data storage location means that interactions with DeepSeek's web application fall under Chinese legal jurisdiction. Consequently, when users from the U.S. or Europe engage with DeepSeek, their data becomes subject to Chinese laws and regulations, potentially exposing sensitive personal or corporate information entered into DeepSeek to oversight by Chinese authorities. Note that data storage concerns don't apply to users who run DeepSeek models locally on their own servers and hardware.

DeepSeek's data storage practices have already raised serious concerns among Western countries. For instance, Italy banned access to the DeepSeek application after the company failed to address concerns raised by the Italian data protection authority about its privacy policy. Similarly, in the U.S., a bipartisan Senate bill aims to prohibit the use of DeepSeek on federal government devices and networks.

Protection against AI hallucinations

According to research by Lasso Security, the current implementation of DeepSeek fails to adequately protect against AI hallucinations -- instances where an LLM fabricates false or misleading information while presenting it as factual. These errors often occur when models encounter ambiguous queries, attempt to respond to prompts without sufficient training data or are pushed to produce answers on topics beyond their knowledge boundaries.

The consequences of hallucinations can be serious, including the following:

- Distribution of misinformation to end users.

- Faulty business decisions based on fabricated data.

- Exposure of intellectual property.

- Regulatory compliance violations when hallucinated outputs conflict with established standards.

Encryption and security flaws

Security researchers at Wiz identified critical vulnerabilities in DeepSeek's database infrastructure enabling unauthorized access and manipulation. These flaws reportedly exposed sensitive data contained within millions of log entries, including conversation histories, cryptographic keys, back-end infrastructure details and other privileged information.

And that wasn't the only discovery of an exposed DeepSeek database. A separate audit by cybersecurity firm NowSecure of DeepSeek's iOS app also uncovered serious security and privacy vulnerabilities:

- Unencrypted data transmission. The app transmits user data over the internet without proper encryption protocols, making it vulnerable to interception attacks.

- Weak, hardcoded encryption keys. The DeepSeek app uses the deprecated Triple DES encryption algorithm with embedded static encryption keys, violating fundamental security engineering best practices.

- Insecure data storage. Sensitive account information such as usernames, passwords and encryption keys is stored in an insecure manner, increasing the risk of credential theft.

- Excessive data collection. The app harvests superfluous device telemetry beyond functional requirements, enabling user deanonymization through device fingerprinting.

- Data storage location. All user interactions and associated metadata are reportedly stored on China-based infrastructure, making them easily accessible to Chinese government entities.

DeepSeek vs. ChatGPT vs. Gemini security

DeepSeek's security posture dramatically differs from some of its major rivals, including Gemini and ChatGPT, particularly in data privacy and regulatory oversight.

The following are a few critical points of comparison:

- Data jurisdiction. DeepSeek stores all user data in China, where it falls under Chinese legal jurisdiction. In contrast, Gemini and ChatGPT operate under Western privacy regulations such as GDPR and CCPA, which provide stronger user privacy protections than Chinese data laws.

- Guardrail implementation. Gemini and ChatGPT use strict, difficult-to-bypass safeguards to prevent users from generating harmful content. DeepSeek's open source nature enables easier modification of safety measures through fine-tuning.

- Data storage. OpenAI and Google use distributed server architectures with regional data sovereignty options, letting EU users, for example, store their data within the EU. DeepSeek, by contrast, centrally stores all user data in China, with no regional isolation options.

- Transparency. Google and OpenAI publish periodic security white papers and allow third-party audits of their models. The same is not true of DeepSeek.

- Government oversight. DeepSeek operates under China's national security laws, which require companies to cooperate with state authorities without disclosure or requiring justification. Gemini and ChatGPT operate under legal frameworks that typically require a court order or other legal process for a government to access specific user data.

Nihad A. Hassan is an independent cybersecurity consultant, expert in digital forensics and cyber open source intelligence, blogger, and book author. Hassan has been actively researching various areas of information security for more than 15 years and has developed numerous cybersecurity education courses and technical guides.