AI vs. machine learning vs. deep learning: Key differences

Artificial intelligence, machine learning and deep learning are terms often used interchangeably, but they aren't the same. Understand the differences and how each is used.

Organizations of all sizes use different types of AI to facilitate key functions. Distinguishing among AI technologies and understanding their architectures is essential to choosing AI to fit use cases.

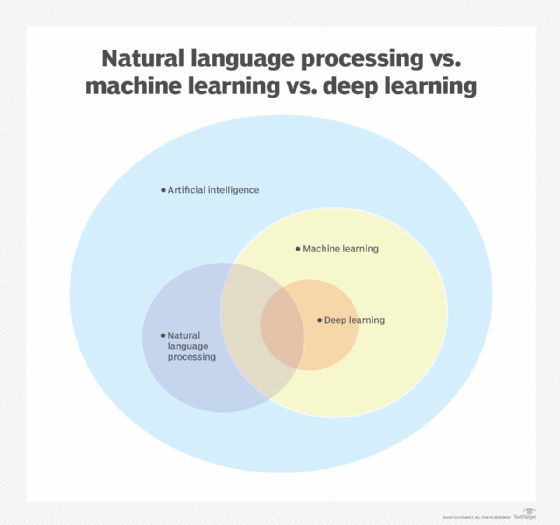

Artificial intelligence is any type of computer system that simulates human intelligence. AI is an umbrella term for all sorts of technologies, from rule-based systems to OpenAI's ChatGPT. Machine learning (ML) is a subset of AI that refers to systems that can learn from data. Deep learning is a type of machine learning that uses deep neural networks to enable systems to learn from extensive, complex data sets and simulate human cognition. Deep neural networks are complex, multilayer architectures.

This guide highlights the significant differences among AI, machine learning and deep learning, examines how each serves business use cases and explores each technology's types and techniques.

AI vs. machine learning vs. deep learning

AI, machine learning and deep learning each come with their own features and abilities to consider.

This table outlines their differences in definition, complexity, data handling, human intervention, speed, accuracy and autonomy.

| Category | Artificial intelligence | Machine learning | Deep learning |

| Definition | Encompasses any systems that can mimic human intelligence. | A subset of AI where systems learn from data without explicit programming. | A subset of machine learning that uses deep neural networks to model complex patterns. |

| Complexity | The complexity of an AI system depends on the implementation approach: simple, such as rule-based chatbots, or highly complex, as with self-driving cars. | ML systems are moderately complex, requiring intervention through feature engineering and structured data. For instance, data scientists manually identify and extract relevant features from raw data. | Deep learning has high complexity because of its multilayered neural network architecture. Deep learning systems automatically extract features from massive amounts of raw data through multiple processing layers. |

| Data handling | AI systems work with both structured and unstructured data and can handle various data types. For instance, rule-based AI works well with structured data, such as databases, customer records and numerical data sets. Generative AI applications process unstructured data, such as images, text documents and audio files. | ML systems work with structured and semistructured data -- such as spreadsheets and labeled images -- that was already processed and organized. Data quality is essential. Data scientists invest time in data preparation, cleaning and feature extraction to convert raw information into ML algorithm-friendly formats. | Deep learning can effectively handle unstructured data, such as images, audio and text, without preprocessing. |

| Human intervention | In rule-based systems, AI needs manual coding, whereas other forms of AI systems learn independently. | ML requires data scientists to label data and select features. | Deep learning requires minimal human intervention, automatically learning features from raw data. |

| Speed and accuracy | AI systems show varied speeds based on their design. For example, rule-based AI systems operate quickly since they follow predetermined logic paths without processing or learning from data. Learning-based AI systems start slower and become more efficient as they accumulate experience. | ML has a faster training curve than deep learning because the training data is labeled and organized. However, the tradeoff is that ML systems are less accurate with complex and unstructured data. | Deep learning has a slower training curve than general ML and needs enormous computational resources because of its complex neural network architecture. However, being trained using a massive data set, deep learning's accuracy is high. |

| Autonomy | Autonomy levels depend on AI architecture. Rule-based AI systems have minimal autonomy because they operate within controlled parameters and are designed to execute predetermined responses. In contrast, advanced AI systems have high autonomy because they learn from interactions and adapt based on new experiences. | ML systems have moderate autonomy because they require human guidance during the learning process -- for example, needing labeled examples. | Deep learning is highly autonomous as it can self-learn from unstructured data. |

When to use AI, machine learning or deep learning

Choosing among AI, machine learning and deep learning depends on specific needs, data types and problem complexities. Below is a guide to help organizations decide which approach works best based on each technology's optimal use cases and limitations.

This article is part of

What is enterprise AI? A complete guide for businesses

AI use cases and limitations

Rule-based AI is best used when tasks require decision-making without heavy data dependency. For example, a basic AI-powered chatbot -- such as a customer service chatbot -- can answer multiple-choice questions with preset answers.

Rule-based AI also suits industries such as automotive manufacturing. Car factories commonly use robotic arms that execute preprogrammed movements for repetitive tasks. These machines are controlled using rule-based technology.

Alongside rule-based AI, other forms of AI, such as generative AI and LLMs, cover many complex uses:

- Chatbots. Simple rule-based AI chatbots follow scripted instructions, while advanced AI chatbots can learn from conversations and produce multimodal output.

- Game AI. AI systems can use predefined strategies to simulate gameplay. More advanced gaming systems use machine learning technologies to make moves and learn over time.

- Facial recognition. This technology analyzes facial features to verify human identities. It is widely used in modern smartphones, such as in Apple Face ID and Android Face Unlock.

- Real-time translation. AI-powered translation tools can instantly translate speech or text. For example, two people can use it to translate Chinese to English during a conversation.

- Predictive analytics. Online shopping platforms, such as Amazon, suggest products based on a customer's browsing history, previous purchases and preferences.

AI technologies come with limitations, which vary depending on the subcategory of AI. For one, LLMs famously hallucinate, meaning they produce incorrect or misleading output.

Due to their predefined decisions, rule-based AI systems are less likely to hallucinate. However, rule-based systems are limited to the tasks they are programmed to complete and cannot adapt to changing environments or learn over time.

Lastly, while designed to mimic human intelligence, AI systems still struggle with nuanced decision-making, abstract thought and understanding context.

Machine learning use cases and limitations

ML systems suit situations that require prediction from structured or semistructured data and tasks that require automated decision-making based on data patterns.

Use cases for ML systems include the following:

- Fraud detection. In banks and online payment systems, such as PayPal, ML systems can analyze user behavior patterns, transaction history and account patterns to flag suspicious activities in real time.

- Sales forecasting. In retail and e-commerce, ML systems can help predict sales based on past sales trends and seasonal patterns to optimize inventory accordingly.

- Spam filtering. When developing a spam filter tool, engineers can use emails to train an ML algorithm to learn common spam patterns and remove them automatically.

ML systems also come with several limitations to consider. For one, because data quality is a primary factor affecting ML performance, machine learning requires extensive human effort to label data, which consumes time and resources. ML systems are also not as effective with unstructured data, such as images, audio and free-form text, and they struggle when handling tasks not included adequately in training data sets.

Deep learning use cases and limitations

Deep learning can handle unstructured data, such as images, speech, video and natural language text. It also proves beneficial for tasks requiring high accuracy with minimal human intervention. Deep learning is an option to solve complex tasks that ML models fail to solve.

DL has many use cases, including the following:

- Self-driving cars. Deep learning enables AI models to process real-time video feeds and receive data from various sensors -- such as cameras and radar -- along with surrounding environmental information to help self-driving cars navigate roads.

- Medical diagnosis. Deep learning can help detect abnormalities in MRI scans, CT scans and X-rays with accuracy that often exceeds human specialists.

- Voice assistants. Deep learning can help AI voice tools, such as Apple's Siri and Amazon's Alexa, understand natural human speech patterns and accents to provide accurate responses and execute commands, such as controlling smart home devices.

- Image recognition. Deep learning enables tools such as Google Photos to sort faces without manual input.

While deep learning is a powerful AI technology, it comes with limitations to consider. For one, it requires massive data sets and computing power, including GPUs or TPUs, to train models. Deep learning also often adopts the black box decision-making process, making it harder to interpret and explain deep learning-generated results compared to conventional machine learning or rule-based AI.

Lastly, deep learning requires high development and operational costs due to computational requirements and specialized expertise needed.

AI types and techniques

AI systems mimic human intelligence and cognitive abilities, such as problem-solving, learning and decision-making. AI uses prediction capabilities -- such as forecasting outcomes based on data -- and automation -- performing tasks automatically without human intervention -- to handle complex tasks.

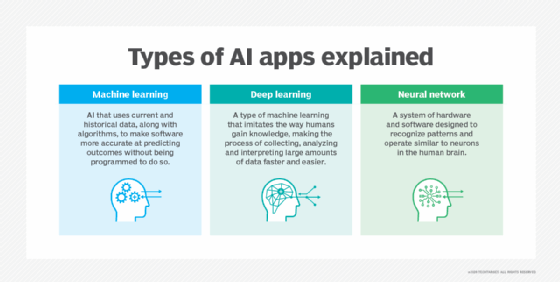

Different types of AI rely on varied AI techniques and processes, such as machine learning algorithms, deep learning models and natural language processing, which teaches computers how to understand human language.

AI systems are built for specific tasks and cannot generalize or perform tasks outside their scope of training. Sometimes referred to as weak or narrow AI, all AI systems today fall into this category. In contrast, general or strong AI is a theory that explains a future of artificial general intelligence (AGI), where AI systems could perform any task a human could.

There are four main types of AI. The two prevalent types today are reactive AI and limited memory AI. Reactive AI systems are task-specific and rely on programming, not memory, to take actions. Examples of reactive AI are rule-based systems, such as simple chess programs and recommendation engines. In contrast, AI systems with limited memory -- such as generative AI and virtual assistants -- can learn from past experiences and continuously improve.

The other two types of AI are forward-looking possibilities: theory of mind AI and self-aware AI. Theory of mind AI refers to systems that understand human emotions, intentions and behaviors. Self-aware AI refers to conscious AI that can operate in real-world environments like a human would. Self-aware AI, like AGI, would come with many ethical and safety concerns.

Machine learning types and techniques

Machine learning is a subset of AI that enhances AI tools and systems with self-learning technology. For instance, machine learning enables computers to learn from a vast amount of data without programming every rule. Instead of writing a program saying, "If X happened, perform Y," ML engineers can supply the ML algorithm with many examples to learn from so it can make decisions on its own.

ML techniques include many technology processes and methods, such as data collection, labeling and processing techniques; model optimization and tuning; compute resources; model training and retraining; and deep learning and neural networks.

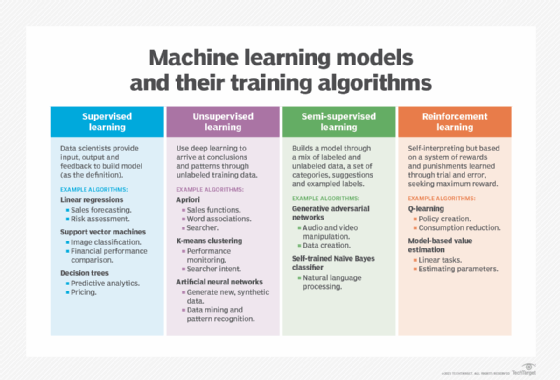

There are also four types of machine learning: supervised, unsupervised, semisupervised and reinforcement. In supervised learning, ML engineers label training data for models, whereas in unsupervised learning, models identify patterns in unlabeled data. Semisupervised learning involves both labeled and unlabeled data, and reinforcement learning models rely on performance feedback to learn over time.

Deep learning types and techniques

Deep learning is a subset of machine learning that uses neural networks to simulate how decisions are made by human brains. Unlike other forms of machine learning, deep learning algorithms can learn directly from unstructured data -- such as images, audio and text -- which makes up most global data.

Deep learning techniques differ from machine learning in three main ways:

- Neural network depth. ML systems commonly use shallow neural networks, comprising one to two hidden layers: the layers in between input and output that perform computations and feature extraction. In contrast, different types of deep learning models, such as convolutional or recurrent neural networks, generative adversarial networks and transformer models, use networks of three or more hidden layers -- sometimes hundreds -- to perform complex pattern recognition.

- Data dependency. ML systems rely on labeled data, such as human-categorized inputs, such as tagging "moon" in photos that contain the moon. In contrast, deep learning systems can use unsupervised and semisupervised learning through extracting features from raw, unlabeled data -- such as grouping similar untagged images.

- Human intervention. Machine learning requires feature engineering, as people must define what's essential in data sets. In contrast, deep learning automatically learns hierarchies of features, such as edges > shapes > objects in images.

Nihad A. Hassan is an independent cybersecurity consultant, expert in digital forensics and cyber open source intelligence, blogger, and book author. Hassan has been actively researching various areas of information security for more than 15 years and has developed numerous cybersecurity education courses and technical guides.