10 AI and machine learning trends to watch in 2026

AI is reshaping virtually every aspect of business operations. Stay ahead of the curve by exploring these 10 emerging AI trends for 2026.

In just a few years, AI has transitioned from experimental development to full production use cases that encompass everything from retail sales and marketing to healthcare, manufacturing, finance, security, logistics and transportation. Even personal smart devices and applications now incorporate some level of AI functionality.

Global business is fully committed to AI growth. A report from Grand View Research noted that the global AI market, estimated at U.S. $279 billion in 2024, is expected to reach nearly $3.5 trillion in 2033. Tech giants are investing heavily in R&D, driving market adoption across vertical industries.

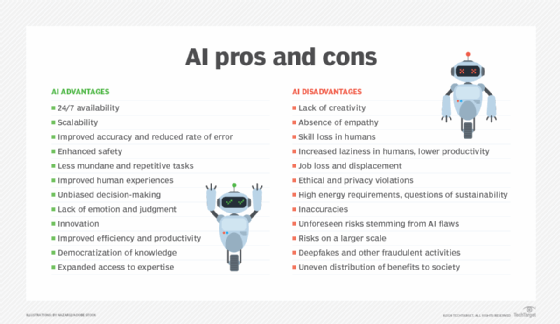

But the AI evolution is fraught with growing pains. The enormous potential for productivity is tempered by concerns over security, accuracy, performance, regulatory compliance, and human pushback. The sense of urgency to adopt AI can overwhelm an organization's capabilities, possibly placing the businesses and their customers at risk. Technology leaders, regulators and legislators are struggling to adapt to the rapidly changing AI landscape.

As AI continues its march forward, 10 AI trends have emerged that are worth watching in 2026.

1. Agentic AI leads the pack

AI agents are autonomous software entities designed to support agentic AI systems, focusing on automation, reasoning and adaptation. Agentic AI can gather data, plan and act with high levels of autonomy. Agentic systems process vast amounts of real-world data to yield faster and more accurate business decisions, reduce manual errors and offer ongoing optimization at a scale that humans can't surpass.

This article is part of

What is enterprise AI? A complete guide for businesses

The market for autonomous AI and agents will grow about 40% annually from $8.6 billion in 2025 to $263 billion in 2035, according to a report by consultancy Research Nester. In 2026, AI agents will continue to evolve from simple AI assistants to increasingly sophisticated virtual employees capable of creating, optimizing, and operating comprehensive end-to-end business workflows with minimal human direction. A logistics agent, for example, might reroute thousands of supply shipments in response to changing weather or traffic conditions, while a marketing agent could draft a campaign strategy, test variations, launch the best version and adjust marketing budgets in real time.

Agentic AI will continue to improve in performance and accuracy, offer highly tailored agents for specific industry verticals, known as vertical AI agents, and provide increasingly capable integrations that enable agents to access broader assortments of data sources, applications and systems.

2. Standardization comes to AI IQ measurements

Currently, the AI industry lacks an industry standard for benchmarking and comparing enterprise AI systems. Some common benchmarks exist, including GLUE, SQuAD, and RACE, but they only capture limited cross-sections of AI capabilities and can't be compared side by side.

In 2024, researchers at Simon Fraser University developed a comprehensive Machine Intelligence Quotient (MIQ) framework intended to assess the intelligence of autonomous vehicles. In 2026, the MIQ will emerge as a standard comparison benchmark for AI systems. MIQ embraces a composite scoring system that exceeds traditional performance measures to include metrics such as reasoning ability, accuracy, efficiency, explainability, adaptability, speed and ethical compliance compounded into a unified comprehensive score. As AI systems adopt MIQ standardization, it will be possible to rate and compare AI systems built in-house as well as those provided by competing AI providers.

Standardized benchmarks like MIQ will become essential for heavily regulated industries such as healthcare and finance where accuracy, explainability and other factors are closely tied to regulatory compliance. Standardized benchmarks will also help AI vendors optimize their AI applications, knowing that businesses will be watching and comparing benchmarks to inform AI procurement and deployment decisions.

3. AI inspires proactive governance

AI governance enables businesses to ensure their AI systems are transparent, explainable and bias-free. As AI in business evolves from optional to essential, the need for AI governance will become increasingly acute, moving from reactive compliance that simply meets prevailing regulatory obligations to proactive governance that ensures responsible AI at the operational level.

The push for AI governance and regulation is primarily focused on government and defense, where highly secure AI systems demand tight governance to protect sensitive data. In 2026, AI governance and regulatory pressure will expand in other highly regulated industries such as finance, life sciences and healthcare, where privacy, ethics and bias mitigation are vital for proper compliance.

The need for proper AI governance, combined with the emergence of new AI regulations, is driving significant growth in AI governance. Grand View Research predicted that the current AI governance market, estimated at $308.3 million in 2025, will surpass $1.42 billion by the end of the decade.

Although the U.S. hasn't enacted federal-level legislation for AI governance, some states have enacted state-specific AI legislation, such as the California Bot Act or HB 3773 in Illinois. The EU has enacted the Artificial Intelligence Act, which categorizes AI by risk and examines high-risk AI applications for transparency, human oversight, data quality and fairness.

4. Multimodal AI enhances the human interface

Humans must be able to interact effectively with AI systems. There are numerous modes available for human-machine interactions, including text, voice, images, video and sound. Typical single-mode AI systems are limited to a single type of input. The problem with single-mode input is context. Human interactions are frequently more subtle and complex than written words and can involve body language, vocal inflections and facial expressions.

Multimodal AI combines multiple input modes, enabling AI systems to better understand situations and contexts, and provide more responsive and accurate AI. For instance, a user can use images or video and verbally explain a situation to an AI model, rather than typing a textual description. The result can be more natural and efficient AI interactions, improving the UX and yielding better outcomes.

The importance of multimodal AI can't be overlooked in 2026 and beyond. Multimodal AI systems are crucial to the widespread adoption of AI. The multimodal AI market is expected to grow from $1.6 billion in 2024 to $27 billion in 2034, led by machine learning (ML), natural language processing and computer vision, according to Global Market Insights.

5. AI boosts endpoint device capabilities

Centralized AI collects data and passes it across a network to a centralized location, where it's processed and analyzed. The resulting decisions are then exchanged back across the network to help guide and execute actions. As more AI systems push network bandwidth, processing power and costs to the limit, centralized AI is becoming increasingly unsustainable.

Edge AI isn't a new idea, but it will gain new prominence in 2026. Edge AI, like other edge computing technologies, aims to gather, process and analyze data where it's created, providing real-time performance with minimal network reliance and latency. Edge systems also require significantly less network resources to exchange processed data, such as AI outcomes, to centralized locations.

This new push for edge AI brings low-power AI-enabled chips to endpoint devices capable of perceiving, reasoning and acting remotely while preserving data security and user privacy. AI-enabled chips include the Hailo-10H edge AI accelerator, the Kinara Ara-2 AI processor and the NXP i.MX 95 applications processor family.

Such AI chips are embedded in robotics, autonomous vehicles, healthcare wearables and other consumer devices such as smartphones. These AI-enabled devices use a field of machine learning called TinyML and specialize in creating small, highly optimized ML models with small software footprints and ultra-low power chip requirements. Local AI also lowers AI power use, mitigates bandwidth demands, especially for costly services such as 5G, and reduces network latency for faster response times that are critical for real-time AI decision making.

The edge AI market -- encompassing edge devices, traditional edge gateways and edge servers -- is projected to grow from $24 billion in 2024 to $357 billion by 2035, according to consultancy Roots Analysis.

6. Regulations emphasize geopatriation and sovereign AI

Technology is rapidly becoming a reflection of the volatility and uncertainty prevalent in modern global politics. Industries have long faced mounting regulatory and legislative actions, such as GDPR and CCPA, for data geopatriation and data sovereignty, which is the demand to place workloads and data within the borders of respective local, regional or national interests.

AI systems are increasingly being subject to similar geopatriation and sovereign AI regulatory efforts. These efforts are designed to ensure AI systems remain geographically contained under the physical oversight of the prevailing government, so AI data, infrastructure and security are aligned with the national interest. As a result, sovereign clouds are emerging where sensitive data and AI workloads can be deployed. A 2024 Gartner forecast projected that the sovereign cloud IaaS market will reach $169 billion by 2028.

Although the U.S. doesn't regulate AI geopatriation and sovereign AI, the EU AI Act and Canada's in-progress Artificial Intelligence and Data Act aim to ensure the safety of AI systems.

7. AI demands greater energy efficiency and sustainability

A principal limiting factor to AI growth and adoption is the availability and cost of energy. AI training, iteration and user request processing are pushing electricity supplies to critical levels. These demands will only increase as AI systems continue to emerge and evolve to meet the demands of production-level IT.

A 2025 International Energy Agency (IEA) report predicted that electricity demand from data centers worldwide will more than double to approximately 945 terawatt-hours (TWh) by 2030, with AI expected to be the largest driver -- global electricity demand from AI-optimized data centers is projected to more than quadruple by 2030.

Expect a renewed emphasis on data center power and cooling energy efficiency in 2026 and beyond, especially for AI-optimized data centers. This renewed attention to green computing must address issues of energy-efficient hardware, energy sustainability and availability, multiple energy sources, power and cooling costs, government regulations, infrastructure resilience and national security -- and add investor consideration and brand differentiation to the list.

Tomorrow's IT leaders can't settle for knowledge of technology alone. IT roles will demand a comprehensive mastery of sustainable IT design, IT lifecycle optimization and carbon analytics to ensure that AI-enabled businesses can meet performance, cost and regulatory goals.

8. AI redefines the cybersecurity landscape

IT systems have become far too complex and integrated to rely on traditional monitoring tools and reactive security methodologies. AI is rapidly evolving as both a primary means of attack and a formidable means of defense.

Modern security threats increasingly use powerful AI to automatically generate subtle and convincing forms of deception, including phishing, deep fakes, password hacking and voice cloning. These emerging threats can elude traditional security mechanisms -- and even fool human professionals. To mitigate these threats, agentic AI tools can, for example, autonomously analyze networks, find potential weaknesses and simulate sophisticated attacks without human intervention to identify avenues of attack or spot potential weaknesses before they can be exploited.

AI will play a more prominent role in cybersecurity in 2026 and beyond. AI models can identify anomalies, automate alerts and respond to incidents long before threats escalate. AI-driven cybersecurity will extend beyond zero trust and strong authentication. It will use security agents to provide a strong proactive defense capable of autonomously searching, finding and mitigating a wide range of security threats. Such AI security platforms will also learn and adapt to ever-changing threats like phishing, malware and data theft.

Another growing security trend for 2026 is the rise of confidential computing. This technology uses protected CPUs -- a hardware-based trusted execution environment -- to isolate sensitive data while it's being processed in an encrypted form, effectively creating a completely encrypted storage and processing environment. Cloud providers such as Microsoft, Google and Amazon are deploying confidential computing, since trust is a critical requirement for data processing.

9. AI reshapes the workplace

AI systems have proven they can enhance business workflows, and this trend will accelerate in 2026 and beyond. AI management platforms are using predictive analytics and automation to autonomously redesign and optimize workflows, HR systems can oversee employee performance and effectiveness, and an array of other AI systems can enhance scheduling, communication, collaboration, data collection and processing, among other everyday business tasks.

When implemented and managed properly, AI systems can free employees from mundane and traditionally error-prone tasks, allowing them to focus on more important business needs.

However, AI acceptability in the workplace is currently mixed. While 85% of leaders and 78% of managers responding to consultancy BCG's "AI at Work 2025" survey use generative AI (GenAI) in their regular work, just 51% of frontline employees reported using GenAI in 2025, down slightly from the previous year.

AI confidence is rising and concerns are easing, but further adoption of AI in the workplace will depend heavily on business leadership support, tools well-suited to perform tasks and thorough training. A well-considered path toward employee reskilling to smooth AI adoption and reposition employees displaced by AI can also help alleviate AI concerns and bolster adoption in 2026.

10. Businesses set to embrace 'invisible AI'

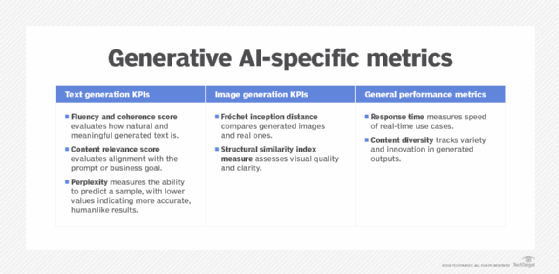

GenAI is empowering businesses of all sizes to create text, music, speech, video and 3D assets with little more than a prompt. GenAI content creation will become even faster and more dynamic, enabling users to create highly personalized content on demand. A McKinsey and Company report suggested that GenAI will be capable of average human performance by the end of this decade. In addition, AI-generated content will increasingly include synthetic data created for software development and testing, network security testing, medical research and other fields.

In 2026 and beyond, increasingly powerful GenAI will become less visible to everyday users as it's seamlessly integrated into a wide range of services and applications -- a trend sometimes dubbed "invisible AI." And organizations will seek new ways to monetize their AI investments through greater productivity, automation and new business models.

Stephen J. Bigelow, senior technology editor at TechTarget, has more than 30 years of technical writing experience in the PC and technology industry.