Getty Images/iStockphoto

Equinix data centers offer Nvidia AI infrastructure

Equinix data centers will offer Nvidia DGX as a managed service. The platform is also available on public cloud providers, AWS, Google Cloud and Microsoft Azure.

Data center provider Equinix has started offering customers the option of running generative AI applications on a fully managed private cloud infrastructure based on Nvidia software and hardware.

The service, launched this week, is the latest example of Equinix outfitting its data centers for customers who want to use their leased facilities to run generative AI models. In December, Equinix started rolling out liquid cooling systems across 100 data centers globally to dissipate the heat generated by the power needed to drive the intense processing of AI applications.

Equinix has partnered with Nvidia to provide managed AI infrastructure, also available on public cloud providers, AWS, Google Cloud and Microsoft Azure. However, the high demand for AI services and the scarcity of high-performing GPUs to run them have made the cost of using the cloud higher than many enterprises are willing to pay.

Equinix's public cloud alternative is the Nvidia DGX supercomputing infrastructure for building and running custom generative AI models. The service includes Nvidia DGX hardware containing eight H100 Tensor core GPUs, 2 TB system memory, networking and storage. The system comes with the Nvidia AI Enterprise software for system management.

Equinix will design, install and operate the Nvidia environment for customers to generate the output customers want from the AI models running on the systems, the company said. Organizations testing the service are in biopharma, financial services, software development, automotive and retail.

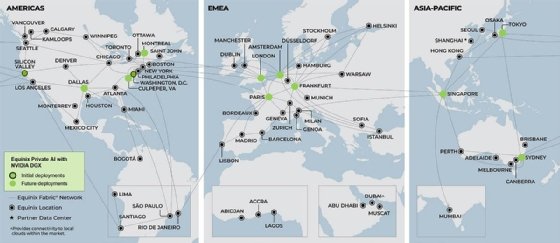

Equinix declined to name the customers or release pricing. It launched the managed service in data centers in Silicon Valley and northern Virginia and plans to roll out the offering to other regions in the United States, Europe and Asia later.

Many enterprises that tested generative AI models on public clouds last year want to use their knowledge to deploy them this year. In most cases, enterprises can accomplish their goals using data sets on models with as few as 7 billion parameters, Gartner analyst Chirag Dekate said. The model size is magnitudes less than OpenAi's general-purpose ChatGPT-3, which has 175 billion parameters and requires a tiny fraction of the infrastructure.

Enterprises that prefer to deploy small language models in a colocation facility instead of in their data centers could consider the Equinix-Nvidia offering, which is "as close to a turnkey experience as possible," Dekate said.

Nvidia provides its DGX AI infrastructure to other data center providers, including Digital Realty, EdgeConneX and Flexential. However, none of the Nvidia partners in its DGX-Ready Data Center program can match cloud providers' single interface for adding services that sit above model infrastructure, such as security, data governance and indemnification, Dekate said.

Today, enterprises are willing to run models and build higher-level services on-premises because it's more cost-effective than the cloud. However, cloud prices should decline as competition intensifies and AI chip constraints ease, Dekate said. Nvidia competitors AMD and Intel are developing product lines, and cloud providers have started designing their own AI chips.

"As the supply-demand imbalance normalizes, you're likely going to see the center of gravity shift back to the clouds," Dekate said. "Colocation provider ecosystems are bare bones. Cloud providers are a comprehensive [software] stack."

However, the chip scarcity feeding high cloud prices won't end soon, Forrester Research analyst Naveen Chhabra said. "It's going to be beyond 2025."

For enterprises considering Nvidia DGX in an Equinix data center, Dekate recommends determining the cost of building out an Nvidia AI environment plus the price of the managed service and comparing it with a discounted cloud subscription. Cloud providers often agree to less than the list price based on the contract size.

Another consideration is how often the model will process new data to produce up-to-date results. Such a process, called inference, is more expensive in the cloud than in a private data center. However, if such occurrences are infrequent, then the cloud might be less expensive, Dekate said.

Antone Gonsalves is an editor at large for TechTarget Editorial, reporting on industry trends critical to enterprise tech buyers. He has worked in tech journalism for 25 years and is based in San Francisco.