Fossil's experience building an internal AI image generator

AI hallucinations are generally considered a bug of the technology to be worked out as it evolves. But uncanny and imaginative AI-generated output can sometimes be a benefit.

NEW YORK -- Generative AI's tendency to produce inaccurate output, or hallucinate, can risk reputational damage in applications like customer service chatbots. But for fashion brand Fossil, the sometimes-fanciful creations of image generators have aided designers' creativity rather than become a bug.

Fossil has found generative AI useful in a range of areas, from market insights to copywriting to automatically adjusting different advertising formats, said Damian Fernandez-Lamela, Fossil's global vice president of data science and analytics, in the presentation "Making GenAI Responsibly Fashionable" at the AI Summit New York 2023. On the creative side, Fossil has found value in generative AI as inspiration for design ideas.

Many recent conversations around enterprise generative AI have focused on chatbots based on LLMs. But Fossil fine-tuned an open source image generator on the company's own images, creating a custom tool used to generate product inspiration. Keeping the resulting tool internal let designers derive inspiration from AI output while minimizing costs, potential compliance issues and security risks.

Hallucinations as a feature, not a bug

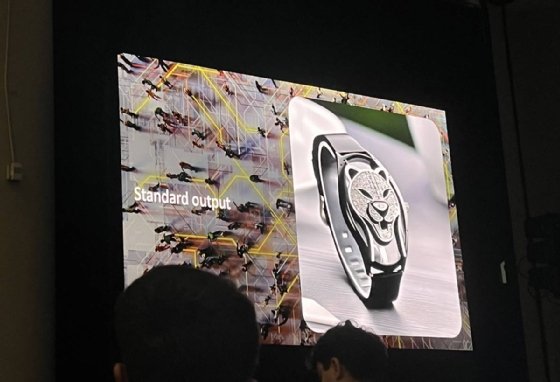

Generative AI makes errors, including some that are obvious to human observers. As an example, Fernandez-Lamela and co-presenter John Dubois, consumer data and analytics leader at professional services firm EY, discussed the output that resulted from prompting Fossil's image model to produce a watch design inspired by the movie Black Panther.

The design is visually striking, featuring a wristband embedded with a jeweled, stylized face of a panther. But upon closer examination, "you'll see there's something missing, right?" Dubois pointed out in the presentation. Namely, despite the watch's style appeal, the design didn't show any way to tell the time.

This is because generative AI is "really bad" at understanding watch components, despite strong overall performance in generating fashion product images, Fernandez-Lamela explained. For example, problems can arise if a designer prompts an image model to create an image of a watch using common industry jargon.

"There's not a clean way for a standard model to understand if a designer says, 'I need a watch with three subdials,'" Dubois told TechTarget Editorial in an interview, referring to the small dials that appear within the main area of a watch face. "What does that mean, and what typically goes in those subdials?"

Perhaps counterintuitively, one way to address this issue is to enable generative AI to be more creative -- even impractical -- rather than less. Inaccurate output is a common problem in generative AI, with worrying implications for many enterprise use cases. But in creative fields like fashion, hallucinations can actually be a benefit, as they can lead to unusual, striking designs that differ from what a human creator might produce.

Initially, Fossil's designers found the generative AI model's output uninteresting due to its lack of creativity. But they were excited by the results when they were able to turn up the temperature -- a parameter used to control the randomness of an AI model's output. "Not surprisingly, because designers are creative people, they loved that option," Fernandez-Lamela said.

Rather than using generative AI to create product designs from start to finish, designers could use it for inspiration, idea generation and initial prototyping. Unlike with an ecommerce chatbot, for example, where hallucinations could be reputationally catastrophic, using generative AI for inspiration in internal design processes was safer.

"In this particular use case, hallucinations were fine," Fernandez-Lamela said. "[They] were a feature that designers actually wanted."

This is one way in which handling generative AI differs from the norm in many other areas of software development, said Matt Barrington, Americas emerging technologies leader at EY. Typically, software development follows a deterministic pattern where developers design, build and test a piece of software. "If it doesn't work, it's a bug, and you go back and you fix it," he said.

But with generative AI models, in some cases, "the bug is actually the feature, like we were seeing when [Fossil designers] were tweaking the temperature," Barrington said. "So you actually have to think about these software patterns in a very different way because they're non-deterministic."

Using model output for inspiration rather than as a final product also had security and compliance benefits, in addition to safeguarding designers' jobs and retaining the element of human creativity. "We're not using the outcome of these models directly," Fernandez-Lamela pointed out, which reduces the risk of copyright infringement.

Building a customized internal model with fine-tuning

Fossil also addressed generative AI's limitations in understanding watch components by training a customized model on the company's own data in a process known as fine-tuning. In addition to improving accuracy, this approach had various privacy and security benefits.

Training its AI tool on industry-specific content helped Fossil ensure that the model's output would be relevant for internal users' needs. Fashion product pages are often particularly well suited as sources of training data, as they showcase reliably clean images organized into categories. "We had tens of thousands of labeled images that we were able to use to essentially get what we needed out of the model," Dubois said.

This is one of the benefits of building on top of an open source model, Dubois explained -- in Fossil's case, Stable Diffusion. Fine-tuning a foundation model on Fossil's data enabled the organization to create a customized, in-house tool while reducing compute and data requirements compared with building a model from scratch.

"You don't actually need that much training data because you're relying on the features that have been abstracted or that have been calculated by the foundational model," Dubois said. "With 500 -- maybe even less -- images, you can actually get it to understand a feature."

This means that enterprises can use the core structure provided by popular LLMs and image generators with broader capabilities as a jumping-off point for creating a task-specific, in-house tool. Dubois compared it to higher education, where the material in a graduate course builds on the foundational knowledge attained from elementary school through an undergraduate program.

"That's essentially what we're doing with these foundational models," he said. "We're giving it a higher-level education -- in this case, enterprise knowledge."

Mitigating generative AI risks and cost challenges

Introducing generative AI into Fossil's design process involved a months-long collaboration with lawyers to address the potential risks, Fernandez-Lamela said. "We didn't want our intellectual property to be exposed to the world, so we didn't want to use a public model," he said.

Instead, the organization went with a private, localized option, using their own training images and hosted on a private cloud using Google Cloud Platform. This had multiple benefits, including reducing vulnerability to cyber threats such as data poisoning and exposure to third parties. "If you have a localized, privatized environment, then you don't have to worry about those data leakage issues," Barrington said.

Barrington added that, for many enterprises, fine-tuning and running a model in-house might not be worth the expense. But for Fossil's use case, it turned out to be more cost effective than paying for image API access over time, Dubois said.

"Rather than paying for API access to another vendor, [Fossil] could actually predict costs based on the GPUs needed for both training and for the real-time generation of images," Dubois said. "And we could actually calculate the type of GPU needed depending on the number of concurrent designers that were using the system."

Down the line, having a streamlined internal model could also be easier to manage from a legal and risk perspective. "If you have hundreds of models serving, doing small things, you have to figure out a way to govern all of those," Dubois said. "How do you mitigate risk when it's a black box?"

Lev Craig covers AI and machine learning as the site editor for TechTarget Enterprise AI.