spainter_vfx - stock.adobe.com

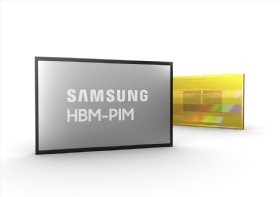

Samsung adds AI processor in memory to high-bandwidth memory

Samsung's new in-memory processing architecture brings compute capability to high-bandwidth memory to address data bottlenecks with AI and HPC workloads.

New processor-in-memory technology from Samsung takes aim at the bottlenecks that slow AI and high-performance computing workloads handling massive volumes of data.

Samsung developed its processor-in-memory (PIM) architecture to bring computing capability to high-bandwidth memory (HBM) chips. HBM packages, such as Samsung's Aquabolt, use stacks of interconnected dynamic RAM (DRAM) chips and a logic die to speed the movement of data in and out of memory, improve overall system performance and save power.

Most computing systems rely on separate processor and memory units to perform data processing tasks in sequential mode, with data continually moving back and forth, causing a bottleneck when data volumes escalate with AI and high-performance computing (HPC) workloads. Samsung said its new HBM-PIM architecture would integrate a programmable computing unit into each memory bank to facilitate parallel processing and minimize data movement. The upshot is more than twice the system performance and a 70% reduction in energy consumption when applied to Samsung's latest HBM2 Aquabolt product, the company claimed.

Processor in memory was long-time research subject

"The idea of a PIM is not new. That's been a research topic for 20 some odd years," said Rick Stevens, associate laboratory director for computing, environment and life sciences at Argonne National Laboratory in Lemont, Ill (ANL). "What Samsung is doing is bringing that idea into the high-bandwidth memory architecture. That's what's new."

ANL plans to test Samsung's HBM-PIM technology when it becomes available. The lab runs some of the largest HPC systems in the world for research projects, ranging from drug design and materials science to climate modeling and cosmology. Over the last five to 10 years, AI has factored into a larger percentage of the ANL workloads, according to Stevens.

Stevens, who is also a computer science professor at the University of Chicago, said the ability to execute certain operations and AI inferencing tasks in the HBM-PIM could provide a significant performance boost when operating on the raw bandwidth of the memory rather than the CPU's or GPU's bandwidth. He estimated that 20% to 30% of use cases could benefit and, potentially, even whole applications could move into the PIM.

Integrating logic layer into memory

The basic challenge in high-performance computing over the last few decades has been increasing memory bandwidth and reducing memory access latency to keep up with the growth in compute performance, Stevens said. He noted many vendors -- including IBM, AMD and Micron Technology -- have also explored the concept of putting a logic layer into or closer to the memory to offload computational tasks or make memory access smarter.

"The hard part here isn't getting some logic integrated into the memory. That's relatively easy," Stevens said. "The hard part is designing precisely how it should work in a way that it can benefit many applications, and the amount of software that has to be changed is minimized."

The "beauty of HBM," Stevens said, is that users don't have to rewrite applications to gain the benefits. He said PIMs, on the other hand, could require changes unless there's a relatively easy way, such as a good set of libraries, to adapt applications to use the functionality.

Stevens said no vendor has solved the problem in a viable commercial product at a price that doesn't add much cost to the memory, and Samsung has yet to release precise details on how its HBM-PIM will work. He said a key for Samsung would be getting the computational capability into a mainstream memory product in such a way that it's invisible when it's not in use and doesn't change the behavior of the system when it is.

AI stands to benefit from processor in memory

"AI workloads have different access patterns of memory, and they have different low-level functions that become targets for execution in the PIM. That represents a new opportunity that wasn't there three or four years ago," Stevens said. "It may be that this is precisely the right time and the right kind of conditions for it to become commercially viable. Samsung has got a great position in the memory space. They are in an excellent position to push this into the broader marketplace."

Samsung presented a paper on its HBM-PIM last month at the virtual International Solid-State Circuits Conference. The company said the new technology is in testing inside AI accelerators with leading AI partners.

A Samsung spokesperson described the new HBM-PIM as a technology -- "not a product that is mass-produced and sold." He said it would be available through discussions with each potential customer, starting with the design of a proof of concept, followed by tailoring to meet specific data processing needs.

Jim Handy, general director and semiconductor analyst at Objective Analysis, said the HBM-PIM is a "lab prototype" that Samsung is showing as a way to address a problem "by bringing some of the logic that you'd expect to be in a processor, like a GPU, into the DRAM chips instead."

"They're going to go to places that could use a large volume of these things and say, 'What do you think? Should we make a whole bunch of these, or should we not?'" Handy said. "If enough people sign on that Samsung can sell tens or hundreds of millions of these things, then Samsung will turn it into a product. But if they don't find that large of a market, then it's probably not going to be worthwhile for them."

Handy said customers that would be most intrigued by Samsung's HBM-PIM include internet titans using AI to target advertising and autonomous vehicle manufacturers that face bottlenecks in their AI algorithms caused by the GPUs. He said the HBM-PIM could help them lower costs by enabling them to use less powerful processors.

Handy has seen other vendors target the same AI problem that Samsung is trying to address, including IBM, with a new hardware accelerator chip; Gyrfalcon Technology, with a chip that is based on magnetoresistive RAM, or MRAM; and GSI Technology, with an Associative Processing Unit. PIM vendors include Micron spinoff Natural Intelligence Semiconductor, Upmem and Venray Technology, Handy added. He said computational storage devices can also reduce the amount of data that needs to move around by enabling data processing on solid-state drives.