ekkasit919 - stock.adobe.com

Cornell Tech puts computer vision into practice on clothing

A team at Cornell Tech built a clothing recognition dataset, and recently turned to Samasource, which provides AI training data services, to annotate tens of thousands of images.

In the summer of 2019, a team from Cornell Tech finished an annotated fashion image dataset and created a challenge to develop an algorithm with the dataset to automatically annotate similar images.

The "iMaterialist-Fashion" dataset, posted to GitHub, consists of some 50,000 annotated images of clothing, along with a standardized taxonomy of dozens of apparel objects.

Moving towards clothing recognition

Cornell Tech, part of the technology, business, design and law campus of Cornell University, hosted its challenge on Kaggle and made it part of a workshop at the 2019 Conference on Computer Vision and Pattern Recognition in June. Competitors were asked to use that data to create an algorithm to automatically and accurately assign attribute labels and segmentations to fashion images -- a big step to ultimately use AI and computer vision for clothing recognition.

The competition saw over 240 teams submit thousands of entries, including many innovative algorithms. The competition, as these types of challenges do, lasted for only weeks. Cornell Tech's training data, however, represents months and months of work from the Cornell Tech team, as well as from Samasource, the AI training service provider that helped manually annotate the 50 thousand images used in the dataset.

"The aim of our work is to cultivate research connections between the computer vision and fashion communities through the creation of a high-quality dataset and associated open competitions, thereby advancing the state-of-the-art in fine-grained visual recognition for fashion and apparel," explained Menglin Jia, a PhD researcher at Cornell Tech who worked on the iMaterialist-Fashion dataset.

"Recent breakthroughs in the field of computer vision have given rise to increased interest in the visual analysis of fashion components," she continued. "Being able to recognize apparel products and associated attributes from pictures could enhance the shopping experience for consumers, and increase work efficiency for fashion professionals."

For the Cornell Tech team, which consists of students, professors and outside collaborators, including Google AI team lead Mikhail Sirotenko, the project represents a step forward in mapping out the visual aspects of the fashion world, Jia said.

That includes clothing recognition, which exists even at a consumer level – see Amazon's StyleSnap or Google's Lens -- but mostly, doesn't work nearly as accurately as retailers, designers and AI vendors hope it might someday.

Creating the dataset

To create the iMaterialist-Fashion dataset, the team had to develop a fashion taxonomy -- a hierarchical structure of fashion objects, as Jia explained. The taxonomy, according to the iMaterialist-Fashion GitHub page, contained "46 apparel objects (27 main apparel items and 19 apparel parts), and 92 related fine-grained attributes."

The team sent that, as well as detailed tutorials and a data pipeline, to Samasource. Samasource is a hybrid for-profit, nonprofit AI training services vendor that, using its SamaHub annotation and task management platform, employs low-income, non-technical people from around the world to do annotation, data sampling, quality assurance and other no-code or low-code work. According to the vendor, it pays employees local living wages.

"We definitely built a platform around annotation," Loic Juillard, vice president of engineering at Samasource, explained. "It's all about trying things and in small steps moving towards a goal."

Samasource trained their annotators based on the training materials from Cornell Tech and helped the team annotate about 50,000 street and runway fashion images.

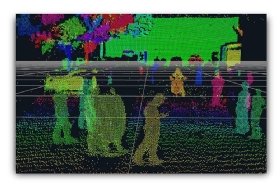

According to the GitHub page, about 10,000 images were annotated with both semantic segmentation and fine-grained attributes, and about 40,000 were annotated with apparel instance segmentation.

Used in image annotation, semantic segmentation labels each separate classes of objects (i.e. a person, a tree, a road) down to the pixel-level. Instance segmentation mostly does the same thing, except it doesn't just label by class; it identifies individual entities within the same class (i.e. Bob, a maple tree, Main Street).

"Each week, Samasource sent us the finished annotations, and we randomly checked a portion of the annotations, and summarized any mistakes we found. Samasource corrected those mistakes and sent the revised annotations to us the following week," Jia said.

The entire annotation process with Samasource took around three months, she said, and the results were helpful.

"Based on our analysis, the annotators from Samasource were able to produce masks that closely follow the boundaries of garments and accessories, therefore, allowing resulting machine learning algorithms to produce precise segmentations as well," she said.

Perhaps in the future, the data will help contribute to more accurate clothing recognition algorithms.