The future scope of chatbots begins with addressing flaws

Chatbots are hot software in the enterprise, but to maintain longevity and relevance, developers need to take a look at the barriers to entry, interface options and NLP issues.

From gauging purchase intent to answering questions about IT issues, chatbots are on track to play a major role in the contemporary enterprise. Chatbots are fully functioning, semi-autonomous systems that can assist customer service experiences and response time.

But that doesn't mean their future in the enterprise is secure. For chatbots to withstand the rapidly increasing technological shifts and become mainstays in the enterprise, developers need to examine the issues that have popped up with increased implementation.

The future scope of chatbots could include many benefits for enterprises, but experts say they will need to be gently nudged in the right direction for businesses to reap these benefits.

Removing barriers to entry

Currently chatbots are growing at a rate of 24% annually, and the industry is projected to be a $1.25 billion market by 2025, according to Grand View Research Inc. Beyond the next five years, however, the future of chatbots relies on widespread adoption of the technology; enterprise use has to go far beyond common industries (technology, finance, healthcare) and become universal. The first step to universal adoption is removing the current barriers to entry for chatbot usage.

Andy Peart, chief marketing and strategy officer of Artificial Solutions, a multinational software company, sees training data as a major barrier to entry for enterprises seeking chatbots. This is especially true for more advanced chatbots that seek to understand intent and respond in human-mimicking natural language.

"Using machine learning to understand humans takes a staggering amount of data," Peart said.

"What comes naturally to us -- the relationships between words, phrases, sentences, synonyms, lexical entities, concepts, etc. -- must be learned by a machine. For enterprises that don't have a significant amount of relevant and categorized data readily available, this is a costly and time-consuming part of building conversational AI applications," he said.

The answer most analysts and experts turn to is democratizing data and focusing on smaller sets of cleaned training data that augments chatbot platforms and helps develop natural language understanding. Increasing the cost-effectiveness of chatbots and removing the data barriers to entry is the next stage for developing the future scope of chatbots.

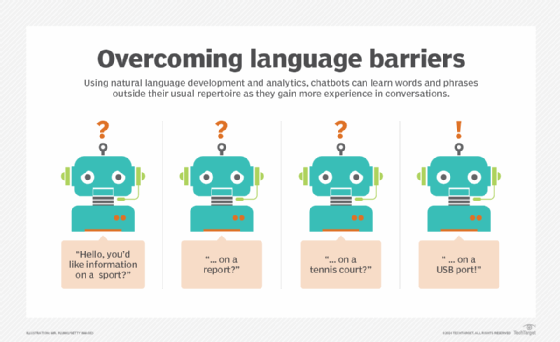

Linguistic and conversational ability must improve

Chatbots are terrible conversationalists. The anticipated benefits of chatbots often fall short due to their notoriously robotic language, inflexibility and difficulty in understanding the intent and nuance of language. Experts say that stifled customer interactions with chatbots are throttling the success of the technology.

"The art and practice of user experience in this area is really in its infancy. It's a complex and careful process that needs to be undertaken to ensure that you create a high-value, useful experience for people using your conversational interface," said Tim Deeson, co-founder and CEO of London-based chatbot development studio GreenShoot Labs.

User experience demands a consistent, clear and focused personality that mimics human interaction and makes them feel at ease. Chatbots should eventually be able to pass the Turing test, but in the meantime, they should at least be able to function as well-developed customer service assistants that have flexible and coherent natural language processing (NLP) skills.

"Machine learning systems have no consistent personality because the dialogue answers are all amalgamated text fragments from different sources," Peart said.

"However, the problem of a consistent personality is nevertheless dwarfed by the problem of semantics," he continued. "In a linguistic based conversational system, humans can ensure that questions with the same meaning receive the same answer. A machine learning system might well fail to recognize similar questions phrased in different ways, even within the same conversation."

Looking ahead to the future scope of chatbots, bots need to further develop their NLP and ability to go off-script. In companies with many options, products or services, users will naturally be slow, forgetful and interruptive. Chatbots will need to reflect the nuances of conversation, human memory and development in order to become a valid replacement for customer service agents who -- even despite language barriers -- can exhibit patience, intelligence, understanding and flexibility.

Voice interface

If the future demands advanced chatbots that do more than use scripted, single-turn exchanges, then their method of interface will also have to advance. A voice interface can assist users with disabilities or those who are skeptical of technology, but it also requires another layer of NLP development.

While some experts such as Ramesh Hariharan, co-founder and CTO of LatentView Analytics, say that using a voice interface is foundational to the future success of chatbots, other developers argue for a separation between fully functioning chatbots and voice-based digital assistants.

Wai Wong, founder and CEO of Serviceaide, a California-based software company, advised developers to approach voice interface on a case-by-case basis. The future is not all voice interfaces, but instead it should focus on working within device capabilities to boost user accessibility and flexibility. Some chatbots can offer voice-to-text interfaces, voice-to-voice interfaces or text-to-voice interfaces, all depending on customer need or brand decision.

"The important question is how a conversational platform interacts with the voice capability already existing: supplementing what is there or integrating into the on-device capability," Wong said.

Deeson and Peart both noted that voice interfaces will need to be finely developed in order for the tool to become a functional priority for the future scope of chatbots. Voice interface can be a useful chatbot tool, but it has to understand sensitive content and user context.

"Voice will get more and more sophisticated and become more effective -- it has a great use case in cars. But voice is just another tool and interface, and it won't replace everything," Deeson said.

Futurebots

While voice interface may be optional, chatbots have been in the enterprise long enough for developers and experts to begin identifying what elements of chatbots are mainstay requirements. NLP development, human-like conversational flexibility and 24/7 service are crucial to maintaining chatbots' longevity in enterprise settings. Chatbots are AI devices and, looking ahead, they need to keep up with AI trends, such as automated machine learning, easy system integration and developing intelligence.

Hariharan said, above all else, chatbots need to have a basic foundation of natural language processing, learning and understanding. This extends to interpreting user intent, developing domain-specific language and improving their functionality to adapt and change based on the specifics of any conversation. As the chatbot novelty wears off and users become more demanding of operational ability, adaptability will become increasingly important.