Rawpixel.com - stock.adobe.com

Responsible AI vs. ethical AI: What's the difference?

Ethical AI establishes principles for AI development and use, while responsible AI ensures they're implemented in practice. Learn how the two differ and complement each other.

Responsible AI and ethical AI are closely related, and each offers distinct but overlapping principles for AI development and use. Successful organizations cannot have one without the other.

Responsible AI focuses on accountability, transparency and compliance with regulations, while ethical AI -- sometimes referred to as AI ethics -- emphasizes broader moral principles like fairness, privacy and societal impact. Recently, discussions about their importance have intensified, pushing organizations to consider the nuances and benefits of integrating both frameworks.

Responsible AI and ethical AI work hand in hand. The loftiest ethical AI ambitions can amount to nothing without practical implementation; likewise, responsible AI needs to be grounded in clear and purposeful ethical principles. And AI ethics concerns often inform the regulatory frameworks that responsible AI initiatives must adhere to, highlighting their mutual influence.

By combining both approaches, organizations can build and deploy AI systems in ways that are not only legally sound, but also aligned with human values and designed to minimize harm.

The need for ethical AI

Ethical AI refers to the values and moral expectations governing AI use. These principles can evolve and vary: What is acceptable today might not be tomorrow, and ethical standards can differ by culture and country. However, many ethical principles, such as fairness, transparency and avoidance of harm, tend to be consistent across regions and over time.

Hundreds, if not thousands, of organizations have expressed interest in ethical AI by developing ethical frameworks, an important first step. AI and automation technologies can fundamentally alter existing relationships and dynamics among stakeholders, possibly requiring an update to the social contract -- that implicit agreement on how society should function.

Ethical AI informs and drives these discussions, helping define the contours of an AI social contract by establishing what is and isn't acceptable. AI ethics frameworks often serve as a precursor to AI regulation, although some regulation is emerging alongside or even ahead of formal ethical frameworks.

This evolution requires input from multiple stakeholders, including consumers, citizens, activists, academics, researchers, employers, technologists, lawmakers and regulators. Existing power dynamics can also influence whose voices shape the ethical AI landscape, with certain groups having more influence than others.

Ethical AI vs. responsible AI

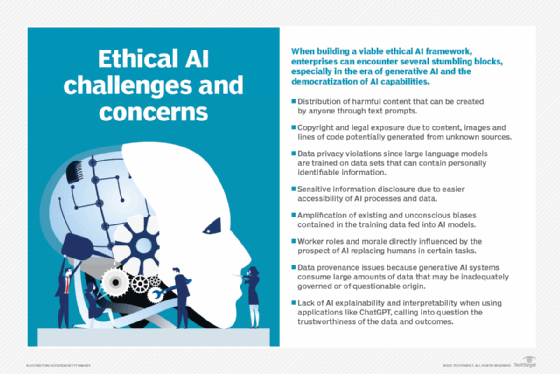

Ethical AI is aspirational, focusing on AI's long-term effects and societal impact. Numerous ethical concerns around AI have surfaced in recent years, especially following the rise of generative AI.

One important issue is machine learning bias, which occurs when AI systems produce biased, stereotyped or harmful outputs due to flawed, unrepresentative or biased training data sets and model designs. This bias is particularly dangerous for high-stakes use cases such as loan approvals and police surveillance, where biased output and decision-making can cause serious harm and perpetuate existing inequalities.

Other ethical concerns include AI hallucinations, where systems generate false information, and generative AI deepfakes, which can be used to spread disinformation. The common thread of these ethical AI issues is that they all threaten basic human values, such as safety, dignity, equality and democracy.

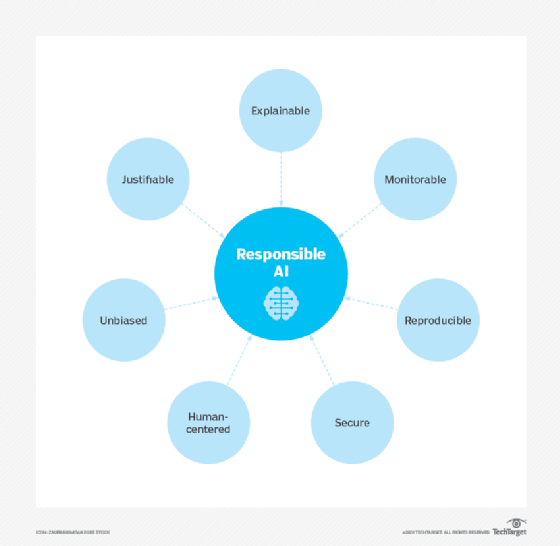

Responsible AI, in contrast, addresses both ethical concerns and business risks -- issues like data protection, security, transparency and regulatory compliance. It provides concrete ways to operationalize ethical AI aspirations as responsible AI practices for each phase of the AI lifecycle, from design and development to monitoring and usage.

The relationship between ethical AI and responsible AI is like the relationship between a company's vision and the operational playbooks used to achieve it. Ethical AI provides the high-level principles, while responsible AI shows how to implement those principles in practice throughout the AI lifecycle.

The challenges of putting principles into practice

Modern enterprises rely on codified business processes and practices. To be sure, there is room for human discretion, but standardized processes are the norm to ensure efficiency, consistency and scale. This applies to software development, including AI, where following standard methodologies and processes leads to many organizational benefits.

Although ethical AI can sometimes be treated as a separate initiative focused on broader societal impacts, ethical principles are frequently included in responsible AI frameworks. To implement these principles, organizations must integrate them into existing processes, routines and development practices. This is often done through user-friendly checklists, standardized methodologies, reusable templates and evaluation guides. For this reason, AI ethics are often included within a comprehensive responsible AI checklist.

Implementing responsible AI

While ethical AI is top of mind for many organizations, it is usually embedded into responsible AI practices. Organizations should focus on the following areas when implementing responsible AI:

- Transparency. Both technical and nontechnical measures can increase transparency. Explainable AI techniques can help make models more transparent, although not all complex AI systems can be fully explained. In addition to comprehensive technical documentation, transparency includes clearly communicating with users about system limitations, biases and appropriate use.

- Stakeholder involvement. Responsible AI requires input from multiple stakeholders in the organization. These might include technical teams, legal and compliance, quality assurance, risk management, privacy and security, data governance, procurement and vendor management. Some implementations might also call for insights from subject matter experts in areas such as finance, HR, operations and marketing.

- Documentation. A RACI matrix -- Responsible, Accountable, Consulted, Informed -- should outline the roles and responsibilities of each stakeholder throughout the AI lifecycle. To equip stakeholders to effectively contribute, organizations must create templates, checklists and other tools for each function involved.

- Regulation and compliance. Organizations must stay agile and up to date as AI regulations evolve globally. In addition to complying with the EU AI Act, businesses should pay attention to emerging regulatory frameworks in other regions, such as the U.S. and China, as well as relevant local or state regulations. Both internal and third-party audits can help organizations assess and validate their compliance.

- Third-party tools. Some organizations build their own AI systems, while others use third-party AI applications; many do a mix of both. Organizations must develop and enforce guidelines and requirements for AI procurement from external suppliers, specifying vendor obligations and system compliance.

Kashyap Kompella is an industry analyst, author, educator and AI adviser to leading companies and startups across the U.S., Europe and the Asia-Pacific region. Currently, he is the CEO of RPA2AI Research, a global technology industry analyst firm.