Reinforcement learning and deep learning pairing pushes AI limits

The pairing of reinforcement and deep learning is enabling researchers to push the boundaries of what AI can do and could help contribute to advanced applications.

Reinforcement learning and deep learning arose as separate disciplines within AI, but researchers are increasingly finding that pairing the two can deliver promising applications.

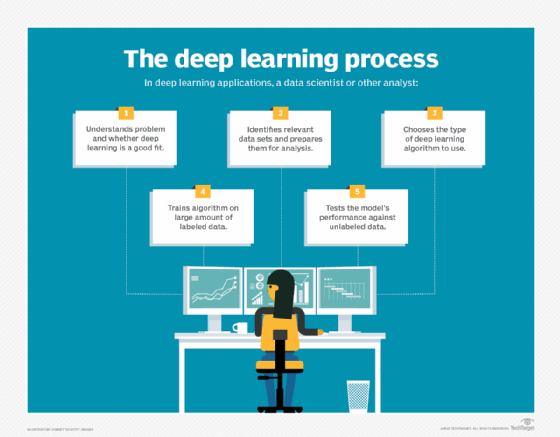

Deep learning has excelled at tasks like training classifiers for image and speech recognition. Reinforcement learning techniques have excelled at creating AI systems that improve through trial and error to produce game-playing bots and recommendation engines.

At the Re•Work Deep Reinforcement Learning Summit in San Francisco, researchers explored how the two approaches are being combined to craft more automated and optimized reinforcement learning algorithms.

"In the last six years, we've been really focusing on getting this combination of deep networks and reinforcement learning to be more stable, more reliable, more predictable," said Marc Bellemare, research scientist at Google Brain, in an interview.

A lot of his team's early work in reinforcement learning was focused on crafting the features used in algorithms for applications like playing video games or recommending medical treatments.

"Now, with deep networks, we can automate that process and basically allow the system to discover its features by itself, and that's proven really powerful," Bellemare said.

Video games like Atari have been a natural fit for much of this research, because they are simple to set up and make it easy to measure the accuracy and performance of various approaches to developing and executing the algorithms. These environments are easy to run inside of larger reinforcement learning and deep learning infrastructure for developing the fundamentals. Bellemare said they are hoping to transfer these new approaches out to new tasks for real-world problems.

Google has created an open source framework called Dopamine for developing and testing deep reinforcement learning algorithms. Bellemare said the name pays homage to the dopamine molecule that appears to drive reinforcement learning in humans.

Reinforcement learning has been around for over 30 years, but real progress picked up over the last six years with the advent of deep learning. Bellemare estimated adding deep learning to reinforcement learning models has led to a threefold performance increase.

One key advance has been the development of techniques for distributional reinforcement learning. Rather than just focusing on the outcome of an algorithm's action, these techniques also try to predict the variety and diversity of outcomes, as well.

Researchers are also seeing dramatic improvements in the ability to automate the development of reinforcement learning algorithms and the ability to scale up their development and execution across distributed computing clusters.

Enabling generalization

Overfitting is a common challenge in the development of more accurate reinforcement learning algorithms. As the algorithms become better at solving a given set of problems, like completing levels in a video game, they can sometimes become less accurate when confronted with new challenges, like playing new levels in that game.

To address this problem, Karl Cobbe, a research scientist at OpenAI, has been exploring different approaches for explicitly testing for generalization. His team's early work on training algorithms to play Sonic the Hedgehog showed strong results when the algorithm played similar levels, but would fare poorly on different ones.

In contrast, a human would only need a couple of levels of a new video game to really understand what is going on, Cobbe said. So, the researchers developed a mock-up of Sonic, called CoinRun, which emulated many of the elements of game play, but allowed new levels to be procedurally generated. A procedurally generated environment starts with a random seed and decision execution framework to dynamically generate a new game level on which to train.

Cobbe observed that most reinforcement learning and deep learning researchers are not explicitly evaluating generalization, because many of the benchmarks encourage training and testing on the same set of environments.

The initial research paid off. Cobbe and his team found the algorithms trained on these procedurally generated levels worked better on new levels. Surprisingly, they found training did not slow down much, either, compared with algorithms trained on smaller sets of levels.

In the long run, Cobbe said he believes similar techniques could be adapted to procedurally generate data for other kinds of tasks, such as robot simulations or generating conversations, which could help in training bots.

Giving robots a sense of touch

Another area of interest lies in crafting deep reinforcement learning algorithms that can be trained on data from across different types of sensors. For example, Facebook researchers are working on giving robotic arms a sense of touch to improve robotic hand dexterity and precision.

"We have shown that grasping can be greatly improved by using tactile sensing compared to just using vision," said Roberto Calandra, research scientist at Facebook, in an interview.

The research takes advantage of new tactile sensors developed at MIT, called GelSight. The real challenge is finding out how to craft algorithms that make the most of data from different sensory inputs in a way that makes sense. This is important for activities like picking strawberries, where you want the robot applying the minimal force necessary, so it doesn't crush the strawberry.

Ultimately, this could lead to better algorithms in other domains.

"Our hope is that by investigating this sort of very difficult problem of how to understand multiple sensor modalities, we can develop algorithms that can then be used in different scenarios," Calandra said.

For example, a mobile app might use deep reinforcement learning algorithms that combine visual and audio data. Other apps in the home, office or hospital could use various types of IoT sensor data.

"This sort of research would allow you to find ways of using that data to understand what's going on and maybe make better decisions," Calandra said.