Getty Images/iStockphoto

More to machine learning platforms than meets the AI

To reach full analytics potential, machine learning platforms powered by AI must provide scalability, handle multiple models, integrate with data sources and be cloud-friendly.

Evidence is mounting that AI is moving out of the shadows of predominantly niche applications and into the mainstream spotlight. And as companies transition from proof-of-concept pilots and individual use cases to broader, enterprise-wide AI strategies, the machine learning platforms at the heart of many of those strategies often change as well.

"We're moving out of the phase of narrow AI into the beginnings of the phase of broader AI use," said Jamie Thomas, general manager of systems strategy and development at IBM.

For production-level projects, machine learning platforms need to be scalable as well as provide the ability to compare and retrain models and integrate with enterprise data systems and other technology infrastructure. More often than not, that means migrating to the cloud.

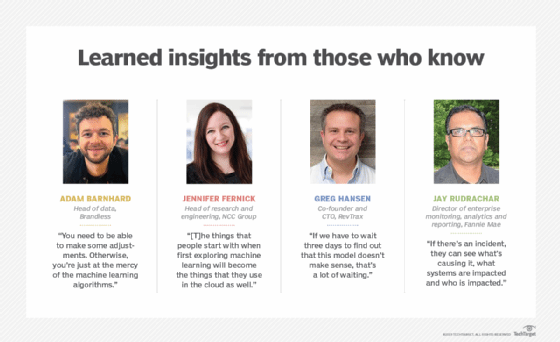

Companies can now store their data, run their applications and access extensive machine learning and AI tool sets and libraries in cloud systems. "All the main cloud computing platforms are working to provide [these capabilities]," said Jennifer Fernick, head of research and engineering at NCC Group, an IT security company.

Cloud providers are also making it easier to move small, on-premises projects -- often built with open source tools -- into cloud environments. "Google supports the use of TensorFlow natively in the cloud," Fernick noted. "That's because, often, the things that people start with when first exploring machine learning will become the things that they use in the cloud as well."

Machine learning the holistic way

Fannie Mae chose Tableau Software's platform for business analytics and data visualization and Splunk for its IT operations data lake and analytics. These are two very different types of machine learning projects, said Jay Rudrachar, Fannie Mae's director of enterprise monitoring, analytics and reporting.

On the business side, the company is looking at client data, mortgage assets, employment information and similar data points "to improve the underwriting engine," he explained. But for IT operations, the data is composed of log entries from networks, applications, security and other IT systems. "Our IT operations team is now getting everything in one place," he said. "If there's an incident, they can see what's causing it, what systems are impacted and who is impacted."

The company decided not to allow individual teams to choose the platforms that best suited their specific immediate projects. "We're dealing with multiple technology groups, multiple technology products," Rudrachar said. "But if we allow each [group] to build their own minimum viable solution, you will not see the overall end goal. It has to be a holistic approach."

If each individual problem required its own data set, collection of sensors and sampling frequency, it would be hard to correlate that data across the enterprise to find the root cause of problems. And some important data might be missing altogether. "Enterprise monitoring has to be a centralized function," he surmised.

One model is not enough

It's important to choose a machine learning platform that can handle multiple models at once so they can be compared to each other. Consumer products maker and online retailer Brandless Inc. began moving from custom-built Python notebooks running in on-premises machines to a scalable, cloud-based data science architecture using Amazon SageMaker, MLflow and Databricks.

"Before, we had one model in place," said Adam Barnhard, head of data at Brandless. "Now, we can be training two different models in parallel and can push both of those models to MLflow and deploy into SageMaker. That's a really big win for us because we can try different versions."

Since the new machine learning platform entered production mode earlier this year, the e-commerce company has iterated through roughly 10 different models, Barnhard said, "and we've seen a 15% to 20% increase in performance metrics as a result of these different tests." Post-processing is also important. "You need to be able to make some adjustments," he added. "Otherwise, you're just at the mercy of the machine learning algorithms."

When customers purchase a single item from a specific line of household goods, for example, "we want to make sure that people get exposure to other products as well," he explained. "So we might want to manually add, say, the next most likely product category to their view. Right now, we have a filter at the end to bring those other categories in."

The AI system continually monitors the different models to determine how well they're working for customers and whether manual override produces better results. The models are then retrained manually once a week. "We're looking to make [the training process] daily and fully automated," said Barnhard. The company is currently testing that, he noted.

Beware of exploding data sets

Machine learning platforms and AI systems are extremely data hungry. When they start adding advanced analytics, many companies find that their existing data processes might not scale up, and even if they can, it may take too long or cost too much money to expand them.

Software vendor RevTrax, which helps retailers like Men's Wearhouse run marketing promotions and offer coupons, discounts and other incentives, began offering its clients a way to personalize these promotions to individual customers. To gather insights on customer behavior patterns and buying habits, RevTrax first called on data already housed in its traditional analytics system.

"But we found that the data set explodes," said Greg Hansen, the company's co-founder and CTO. "The machines we had for day-to-day use weren't anywhere near big enough. Nowhere near close."

The company discovered that it's not enough to collect individual data points only. Machine learning also requires combinations of different pieces of data that are created as part of the learning process and dramatically expand the amount of information being processed.

"We're talking exponential growth," Hansen said. "If we have terabytes of data from transactions for the models, it will explode into petabytes of data."

RevTrax needed to get the data into a scalable cloud platform to provide enough memory for processing -- and not just any cloud. According to Hansen, the company ran into performance walls that slowed the process with Microsoft Azure, but not so with Amazon Web Services.

"On AWS," he said, "we just deployed much larger machines and kept a close eye to make sure they would get turned off, so we would only keep them on when we needed them to run."

As a result, the time to build a model was reduced from days to just hours. "If we have to wait three days to find out that this model doesn't make sense, that's a lot of waiting," Hansen conjectured. "But if it's three hours, it's not the end of the world; we can do it two or three times a day."

Machine learning's many talents

Machine learning platforms have to provide data scientists with the functionality to integrate with data sources and popular open source tools as well as interfaces for nontechnical users and compatibility with a company's overall IT architecture. "There are some industry solutions that don't plug into your cloud platform or other vendor systems," said Anand Rao, global AI lead at PricewaterhouseCoopers. The platforms also must provide auditability and oversight, so companies can monitor them for compliance, bias and other potential problems, he said, adding, "We don't want black boxes."