More curiosity could help narrow AI tools handle broader uses

Today, engineers are developing AI tools primarily for individual applications, but programming a facsimile of curiosity into algorithms could help make them more general purpose.

Artificial intelligence has made significant strides in recent years, but it still has a major constraint: narrowness.

The inherent limitation of AI today is its specificity. You can get a narrow AI application to take orders at the McDonald's drive-thru or beat the world's best chess and Go players. You can build AI software that drives cars with fewer accidents than humans. You can also use AI for quality control in product assembly, diagnosing cancer or prequalifying mortgage applicants. However, the moment you change up the task even slightly, the AI tool is at a loss as to how to respond.

To understand the specificity issue of AI, it is key to look at the underlying core principle of how AI works today. The issue is rooted in the conceptual approach of approximating human decision-making by combining sets of algorithms into a model that is then rewarded for good responses to environmental stimuli and punished for bad ones.

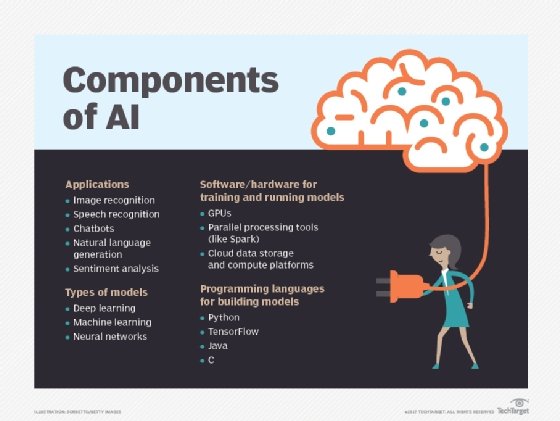

AI equation: Algorithms plus compute power

The narrow AI of today is based on sophisticated algorithms and incredible amounts of brute force compute power. We define AI as an accumulation of better and better algorithms relying on better and better hardware to calculate their way out of narrowly defined challenges.

For example, when looking for a tumor on a CT image, an AI application sees combinations of individual pictures that have no meaning by themselves. However, different combinations of pixels in an image can be correlated with different probabilities of cancer being present.

This is a fundamentally different approach from how a human doctor looks for cancer. Instead of using abstract processing power, the doctor leverages her experience, skill and intuition to look for indications of cancer where they are most likely to occur. Ultimately, this can lead her to identify a few corner cases of cancer and, hopefully, save some lives by doing so.

On the other hand, the AI application is relentless in examining all of the available data, potentially yielding more thorough results than a human doctor. But the same AI program that can identify the rarest corner cases of lung cancer will not be able to diagnose bone cancer or even a broken leg because its set of training images were strictly focused on lung cancer.

Igniting the spark of curiosity in AI

Expert Go players are able to use sophisticated strategies and diversions when playing the board game, but they can't think through every obscure set of moves that is likely to lead to a game-winning trap much later down the road. An AI program can.

In its 2016 match against human champion Lee Sedol, Google subsidiary DeepMind Technologies Ltd.'s AlphaGo algorithm deployed a long-range game plan that didn't produce any immediate measurable gains on the board. However, DeepMind managed to incentivize the algorithm to explore unconventional strategies that increased the probability of it gaining significant advantages later in the game.

Of course, once AlphaGo used this spark of curiosity to abstractly calculate large sets of permutations that would play out over many turns, it knew how to win games by exploiting the limitations of the human brain. It is exactly this combination of the ability to tap into a full repository of what has worked in past games, explore strategies that are a bit out there, and then mercilessly implement the ones best suited to the situation on the board that makes AI algorithms unbeatable in most games.

DeepMind trained AlphaGo in a similar manner to how Amazon Web Services trains its SageMaker machine learning platform to automatically apply the optimal set of hyper-parameters for a specific model and use case.

First, the AI software takes a series of different guesses and observes the predictive accuracy of each guess as the model training begins. It then combines successful configurations from different models into one winning set of hyper-parameters that can be expected to yield the best result for the specific human-defined task.

The path from narrow AI to general uses

This type of algorithmic curiosity is similar to our oncologist knowing where to start looking when evaluating a CT image for cancer. However, the auto-tuning of hyper-parameters or AlphaGo's auto-detection of human weaknesses still can't shake the brute force, iterative character of AI.

The AlphaGo developers made the correct assumption based on evolution theory that some degree of variation is needed to come up with spectacular wins. However, these guesses are initially random, without the human intuition -- or bias -- accumulated through experience and training.

The cheaper that compute power becomes, the more this variation can be introduced without too much cost impact, which could push AI past its lack of intuition. But can all of this lead to general artificial intelligence?

Conceptually, if we assume unlimited compute power and humans preparing a sufficiently large training set of text and multimedia data, a narrow AI application could jump from playing chess to playing Go, and from there to taking orders at your local burger joint. However, the dependent variable is still missing for a lot of potential AI use cases. And, in many cases, it has to be provided manually by humans as part of the training process.

It doesn't even end there. How does a human train a self-driving car on whether it's OK to run over an animal in a certain situation, or if it's better to apply the emergency brake when doing so could mean getting rear-ended by an 18-wheeler? The racist chatbot that Microsoft inadvertently deployed in 2016 is only one of many examples where humans can provide a problematic frame of reference for an AI tool.

These questions that extend beyond the purely technical aspects of AI will continue to challenge the technology as it looks to extend beyond current applications that are narrow in scope.