freshidea - Fotolia

Machine learning limitations marked by data demands

Machine learning has impressive capabilities in the enterprise, but with high-data requirements and struggles with explainability, it remains unable to reach widespread use.

While machine learning has a variety of use cases and the capability of deep analysis it is not without limitations.

With large data requirements coupled with challenges in transparency and explainability, getting the most out of machine learning can be difficult for organizations to achieve. Understanding these realities of machine learning and establishing realistic expectations is the only way to put your organization in a stronger position.

High potential but high data demands

Data is the core of machine learning. The very nature of machine learning is to train an algorithm on clean and prepared sample data. Through this repeated process, it can learn from the data set and create and apply generalizations to data it has never seen before.

One of the more impressive feats of machine learning to date is represented by the remarkable performance and capability of OpenAI's GPT-3 model, which can generate surprisingly humanlike text output from just a small amount of starter text. While the results are noteworthy, the reality is that petabytes of data, millions of dollars of CPU and GPU power and many hours of training time went into creating the resulting model.

This quantity of data and computing is not available to the average machine learning model developer and highlights one of the major challenges with the current state of the art for machine learning: an extreme dependency on data.

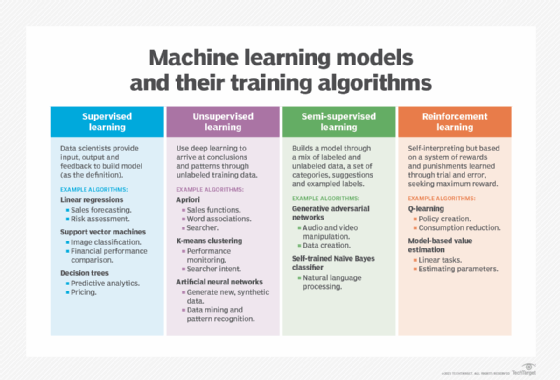

All forms of machine learning are heavily dependent on data, from supervised and unsupervised learning to even reinforcement learning that can teach itself across multiple iterations. But to accomplish many of the tasks of machine learning with acceptable levels of accuracy it requires copious amounts of data to train machine learning algorithms, especially the currently favored deep learning neural networks.

Deep learning neural networks consist of many layers of individual artificial neurons that are connected to subsequent layers with many connections per neuron. Each of these connections consist of weights and biases that must be determined by the machine learning algorithm over multiple iterations of training for the overall network to be adequately trained. The more layers, the more weights and biases that need to be calculated. These calculations take a significant amount of computing power, as well as data and time.

These data limitations aren't the same for many conversational applications such as chatbots or object detection and recognition systems, however. Organizations can either use these pretrained networks outright or simply retrain the networks using transfer learning approaches.

However, this transfer learning approach only works if the new training domain is somewhat similar to the existing network. It's possible to retrain an image recognition network that's already been trained on general images to be used for radiology images, but it's not possible to retrain a speech recognition network to do credit card fraud detection.

The data problems expand past the need for sufficient quantities of data. Organizations also have challenges in cleaning, preparing and labeling that data. Unfortunately, machines are limited to the extent in which they can prepare, collect and label data on their own without human labor and support.

Issues of interpretability and transparency

Trust is crucial when it comes to public embracement of AI and machine learning. The best way to build that trust is through results that are reliable, accurate and explainable. Not being able to understand how these systems get their results only fosters doubt.

The most popular forms of machine learning use deep learning neural networks, and as explained above, produce their results through complicated interconnections, sometimes numbering into the millions of parameters. Looking at the neural network provides very little visibility into understanding how specific inputs contribute to specific outputs.

These sophisticated neural networks are essentially black boxes, because we have no visibility or transparency as to how they work. For many use cases, this sort of lack of explainability is a significant problem. Machine learning systems used in critical systems such as in medical applications, autonomous vehicles or making important life-changing decisions can only be trusted if their results can be easily verified.

Without such easy verification, it becomes necessary to keep the human in the loop or find less accurate but more explainable machine-based approaches. While not all machine learning algorithms face the same lack of explainability, the current push to make greater use of deep learning systems or machine learning models from third parties will only become more pressing.

Challenges on applications of machine learning

Generally, intelligent systems remain out of the grasp of the present, so each machine learning model tends to be built with a narrow application window specific to the problem domain it's solving.

While machine learning has proved to be adept at handling numerous tasks, including performing image and object recognition, as well as identifying patterns and spotting anomalies, its use cases aren't applicable to every scenario. Machine learning systems work best when they are being applied to a task that a human would otherwise do. If the machine isn't being asked to be creative, have intuition or apply common sense, it can excel.

At the end of the day machines are still far from having human capabilities. As can be seen by the ongoing challenges with fully autonomous vehicles, machines are challenged in many situations that humans can handle easily. Machine learning is good at learning from explicit data, but they don't struggle with understanding the world and how it works. A machine learning system might be taught what a vase looks like, but it doesn't inherently understand that it holds water.

These common sense and intuition limitations are felt in applications where humans need to interact with a machine. Chatbots and voice assistants often fail when asked fairly common-sense questions. Autonomous systems have gaping blind spots when they don't recognize a potentially critical issue that a human can spot right away. Recognition systems can literally not spot the elephant in the room.

We don't yet have fully autonomous systems that we can fully trust. As such, AI and machine learning systems are being put to the best use in 'augmented intelligence' roles where the human is still in the loop. The power of machine learning is applied to help humans do their jobs and live their lives better, rather than replace them with something that performs those tasks inadequately.

Machine learning systems have exhibited significant capabilities over the past decade and are clearly here to stay. However, it's still too early to tell what the long-term impacts and adoption trends of machine learning will be, and as such, we must all take into account the limitations of machine learning so we don't over promise their capabilities and underdeliver on those promises.