yoshitaka272 - Fotolia

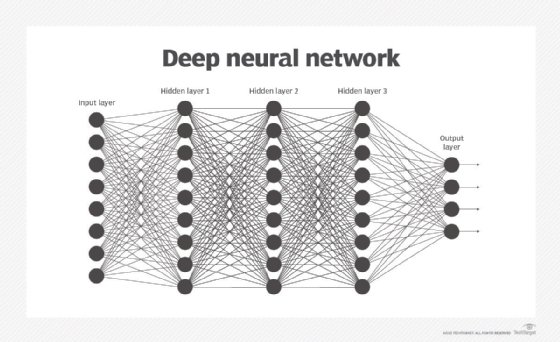

Limitations of neural networks grow clearer in business

AI often means neural networks, but intensive training requirements are prompting enterprises to look for alternatives to neural networks that are easier to implement.

The rise in prominence AI today can be credited largely to improvements in one algorithm category: the neural network. But experts say that the limitations neural networks mean enterprises will need to embrace a fuller lineup algorithms to advance AI.

"With neural networks, there's this huge complication," said David Barrett, founder and CEO Expensify Inc. "You end up with trillions dimensions. If you want to change something, you need to start entirely from scratch. The more we tried [neural networks], we still couldn't get them to work."

Neural network technology is seen as cutting-edge today, but the underlying algorithms are nothing new. They were proposed as theoretically possible decades ago.

What's new is that we now have the massive stores data needed to train algorithms and the robust compute power to process all this data in a reasonable period time. As neural networks have moved from theoretical to practical, they've come to power some the most advanced AI applications, like computer vision, language translation and self-driving cars.

Training requirements for neural networks are too high

But the problem, as Barrett and others see it, is that neural networks simply require too much brute force. For example, if you show the algorithm a billion examples images containing certain objects, it will learn to classify that object in new images effectively. But that's a high bar for training, and meeting that requirement is sometimes impossible.

That was the case for Barrett and his team. At the 2018 Artificial Intelligence Conference in New York, he described how Expensify is using natural language processing to automate customer service for its expense reporting software. Neural networks weren't a good fit for Expensify because the San Francisco company didn't have the corpus of historical data necessary.

Expensify's customer inquiries are often esoteric, Barrett said. Even when customers' concerns map to common problems, their phrasing is unique and, therefore, hard to classify using a system that demands many training examples.

So, Barrett and his team developed their own approach. He didn't identify the specific type of algorithms their tool is based on, but he said it compares pieces of conversations to conversations that have proceeded successfully in the past. It doesn't need to classify queries with precision like a neural network would because it's more focused on moving the conversation along a path rather than delivering the right response to a given query. This gives the bot a chance to ask clarifying questions that reduce ambiguity.

"The challenge of AI is it's built to answer perfectly formed questions," Barrett said. "The challenge of the real world is different."

A 'broad church' of algorithms is needed in AI

Part of the reason for the enthusiasm around neural network technology is that many people are just finding out about it, said Zoubin Ghahramani, chief scientist at Uber. But for those that have known about and used it for years, the limitations of neural networks are well known.

That doesn't mean it's time for people to ignore neural networks, however. Instead, Ghahramani said it comes down to using the right tool for the right job. He described an approach to incorporating Bayesian inference, in which the estimated probability of something occurring is updated when more evidence becomes available, into machine learning models.

"To have successful AI applications that solve challenging real-world problems, you have to have a broad church of methods," he said in a press conference. "You can't come in with one hammer trying to solve all problems."

Another alternative to neural network technology is deep reinforcement learning, which is optimized to achieve a goal over many steps by incentivizing effective steps and penalizing unfavorable steps. The AlphaGo program, which beat human champions at the game Go, used a combination of neural networks and deep reinforcement learning to learn the game.

Deep reinforcement learning algorithms essentially learn through trial and error, whereas neural networks learn through example. This means deep reinforcement requires less labeled training data upfront.

Kathryn Hume, vice president of product and strategy at Integrate.ai Inc., a Toronto-based software company that helps enterprises integrate AI into existing business processes, said any type of model that reduces the reliance on labeled training data is important. She mentioned Bayesian parametric models, which assess the probability of an occurrence based on existing data rather than requiring some minimum threshold of prior examples, one of the primary limitations of neural networks.

"We need not rely on just throwing a bunch of information into a pot," she said. "It can move us away from the reliance on labeled training data when we can infer the structure of data," rather than using algorithms like neural networks, which require millions or billions of examples of labeled training before they can make predictions.