How to solve deep learning challenges through interoperability

The challenges of training and overseeing advanced neural networks is leading to an implementation bottleneck in deep learning technology.

AI, machine learning and deep learning are terms heard in every industry today, but they're not synonymous. AI gets human performance out of a computer, and machine learning consists of the methods by which that human performance is achieved. Deep learning goes much further than mimicking human performance -- it's about performing actions that are out of the realm of human possibility.

Those complex programs include high-end facial recognition, deep language translation, real-time high-resolution vision systems for driverless vehicles and accelerated research into genetics and pharmaceuticals. These actions are all post-algorithmic, not reducible to discrete steps, and require deep learning solutions that can't be written up neatly in code.

However, advanced deep learning challenges go beyond what conventional AI and machine learning techniques face, and those challenges are leading to an implementation bottleneck.

The problems of deep learning

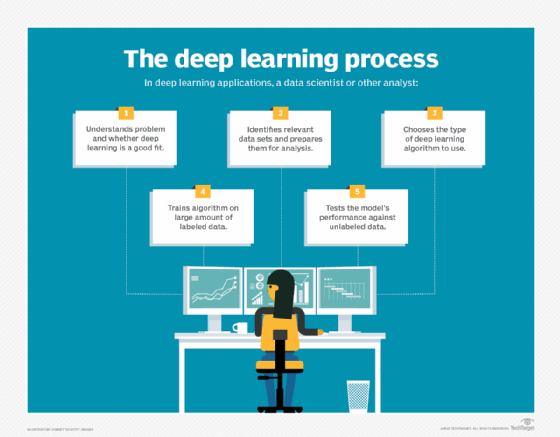

The mechanism that makes deep learning work is modeled on the human brain itself -- neural networks -- and includes nodal systems that self-modify to fine tune outputs as new inputs are presented, just as neurons do in the brain. Over time, the system learns itself while becoming more accurate and resilient.

However, this level of advanced modeling produces several deep learning challenges. A big one is that while a deep learning neural net system can solve problems that can't be solved algorithmically, it's also not always clear what the neural network has learned -- or how the problem is being solved. It's a black box solution which leaves the model hard to tweak, use and understand.

The issues only increase in scope when considering that neural net systems themselves are all proprietary -- no industry standard exists for such systems, and there are many different neural net models that a deep learning system can be based on, from the Feedforward Network to the Markov Chain. Similarly, the vendors building systems on implementations of these networks have been working independently with their own interpretations of best practices.

Because of this, enterprises wishing to invest in a deep learning solution have to be married to both the methodology and the vendor, because both aren't likely to be compatible with any other.

Achieving interoperability

In order for deep learning to grow rapidly in enterprise use, the technologies need interoperability between neural net systems. When the core technology being developed by individual vendors standardizes toward compatibility, the risk of adopting any one system will drop -- and an enterprise investment in multiple deep learning systems for differing applications will become practical and economical.

While such a standard doesn't yet exist, it's being meaningfully pursued. The open neural network exchange (ONNX) introduced by Facebook and Microsoft in late 2017 is a deep learning ecosystem that enables easy switching between deep learning frameworks, with tools to assist developers in integrating them across a range of touch points in processing. Interoperable deep learning systems can optimize each other once integrated, reducing the risk of enterprise investment in the technology.

As an open source program, ONNX includes an extensible framework modeling tool that organizes dataflow into nodal graphs, with inputs and outputs, operators and standard datatypes. Though still in its early stages, ONNX is the kind of initiative that mediates deep learning challenges, making the technology ultimately safer for enterprise use.

Facing future threats

The problem that neural networks are, in many cases, black boxes can not only be a business risk, but a physical danger. When a woman was killed in Arizona last year by a self-driving Uber car, representatives said the cause was a modification in the braking system made by humans, with no fault applied to the car's AI. But the fact is, failures of self-driving vehicles relying on deep learning can't be that easily explained. Chipmaker NVIDIA produced a car driven by a neural network that wrote its own instructions -- there isn't a single line of code written by a human in the system. Instead, the car created its own driving algorithm simply by watching a human drive. In this example, no data scientist knows the car's decision making process, and it's perfectly possible for the vehicle to decide to crash into a tree for reasons that make sense only to itself.

Self-driving neural network cars dredge up a plethora of questions: How can disastrous performance be anticipated before it occurs? What are the legal implications? Can such systems ever improve without making mistakes -- which is an integral part of how they learn -- and what will the cost of those mistakes turn out to be?

Interoperability can help answer these questions. If deep learning systems -- and in particular the neural nets within them -- can become interoperable, then it's possible to create systems whose task is to learn about other systems. If the behavior of the nodes in any one deep learning system becomes an object of interest to another, then the behavioral decision making process becomes discoverable. If interoperable, deep learning systems can monitor other systems and keep them accountable.

The allure of deep learning and the rewards of mastering it and applying it creatively and productively will prevail, but they shouldn't blind an industry to its dangers or hamper the uptake of wise and universally beneficial initiatives.