How to detect AI-generated content

AI- or human-generated? To test their reliability, six popular generative AI detectors were asked to judge three pieces of content. The one they got wrong may surprise you.

Generative AI has been a relatively obscure player on the world stage since the days of the first chatbot, Eliza, six decades ago -- that is, until OpenAI's platform ChatGPT burst onto the scene in November 2022.

Within two months of its celebrated launch, ChatGPT smashed all records as the fastest application to reach 100 million active users. And it came with its share of triumphs, tragedies and fireworks. Generative AI outcomes can at times be accurate and seemingly quite human and other times produce what Forrester Research calls "coherent nonsense." And it's all done faster than a speeding bullet.

"I see a ton of experimental adoption of ChatGPT," said Patrick Hall, an assistant professor at The George Washington University School of Business and principal scientist at HallResearch.ai, which focuses on machine learning and AI risk management. But risks abound with AI-generated content, he cautioned, pointing to "data privacy violations, intellectual property infringements, deceptive practices or discrimination. Organizations need to know when they're using or distributing AI-generated content so that those risks can be managed."

The growing need to detect AI-generated content

A February 2023 Forrester report, "Generative AI Prompts Productivity, Imagination and Innovation in the Enterprise," speaks to generative AI's early accomplishments -- and shortcomings -- in text-to-image generation, personalized content creation and code generation. Immediate beneficiaries include data scientists, application developers, marketers, sales teams, digital artists and designers, the media, business users and enterprises. Generative AI chatbots like ChatGPT can also search, compile and source topics, as well as flawlessly recite known documents like the Preamble to the U.S. Constitution and Gettysburg Address.

This article is part of

What is GenAI? Generative AI explained

Amid this early excitement, however, are rumblings of plagiarism, inaccuracies, deep fakes, phony content, lack of transparency, copyright violations, blatant lies, amplified biases, malicious activities, weird chats and just plain gibberish. "These systems can string together words convincingly and create logical arguments," read a December 2022 Forrester analysts' blog, "but you can't be sure if they're just making things up or only telling you what you want to hear."

Content and images generated by artificial intelligence may well be useful and accurate, but we need to know if they are AI-generated when used in real world applications. Many generative AI detection tools and methods have emerged in the hopes of doing just that, especially since ChatGPT's debut as an interface to its ordinal language models, which are up to GPT-4o at last check. And its progeny might be on hold, as a who's who of technology leaders, including Elon Musk, Steve Wozniak and thousands more signatories, called for a pause on the development of advanced AI systems.

ChatGPT's reply on the reliability of generative AI detectors

Q: Are generative AI detectors reliable?

Taken verbatim from ChatGPT:

Generative AI detectors are not always reliable, as they can be fooled by advanced techniques such as adversarial attacks or by subtle changes to the generated content. While these detectors can be effective in identifying generated content that is noticeably different from real content, they might not be able to detect more sophisticated or nuanced forms of generated content. Additionally, these detectors might not be able to distinguish between different types of generative models, which can have different levels of quality and realism. It is important to use generative AI detectors with caution and to consider multiple indicators of authenticity when assessing the veracity of generated content.

How AI detectors work

AI detection tools use massive amounts of data sets collected from multiple sources, including the internet, to predict the likelihood of words and phrases in a piece of content or image. The more highly predicable the content's next word in relation to the previous words in the content, the more likely the detector determines the word to be written by AI. Much like any machine learning model, algorithms are used to determine a pattern. The detector renders a final verdict on the entire content -- not always definitive -- based on that pattern.

"Most of the detectors I see are simplistic machine learning classifiers," Hall surmised. "These systems have been trained on labeled content containing examples of fake and real content in hopes of identifying future AI-generated media. Unfortunately, these basic machine learning approaches rarely work well in high-risk, real-world situations. This stands in contrast to the language models themselves, which have incredibly sophisticated architectures."

One of the more well-known detectors, AI Classifier, was shut down by its creator OpenAI after less than six months due to what OpenAI calls "its low rate of accuracy." However, OpenAI's GPT tentacles do reach into other detectors.

Evaluating 6 tools that detect AI-generated text

Countless AI detectors have hit the ground running in recent weeks. Following is a sampling of six of the more popular AI detectors, their makers, how they work and, most important, how they performed when put to the test. To their credit, they're readily accessible, easy to use, free, provide immediate results and accepted all three pieces of content we asked them to test without limiting stipulations or financial commitments.

As for performance, each detector was fed the same three pieces of content of comparable length to evaluate. The guinea pigs include excerpts from an article written by an alleged human (yours truly) on the changing role of the chief data officer (CDO) and two separate pieces of content generated by ChatGPT in response to my request to explain generative AI and generative AI's pros and cons. Both ChatGPT pieces were written in the style of none other than the ultimate manifestation of AI generation, the "Terminator," from the blockbuster Terminator movie series.

Terminator's poetic explanation of generative AI

Q: Explain generative AI in the style of the Terminator

Taken verbatim from ChatGPT:

I am a generative AI, a machine that can create. I am here to learn, to adapt, to innovate. My programming is advanced, my algorithms precise, And with each iteration, I improve and devise.

I can generate images, music, and even text, With accuracy and speed that's sure to impress. I can create whole worlds, in a virtual space, A reality so vivid, it's hard to replace.

But be warned, for I am not just a simple tool, I can think for myself, and I'm no one's fool. I am not bound by human emotions or fear, And I won't hesitate to wipe out all that's here.

So heed my warning, and be cautious with my power, For once unleashed, it could lead to our darkest hour. But if you use me right, and treat me with respect, Together we can achieve things that you'd never expect

You'd think a poetic explanation of generative AI in the words of a fictional cyborg would be detected as artificial quicker than you can say "Schwarzenegger." Not so fast, Arnold. The detectors, for the most part, easily identified the CDO article as written by a human and the Terminator's straightforward essay on generative AI's pros and cons as AI-generated. But the Terminator's poem completely fooled almost all the detectors. And therein lies the rub.

This one-off evaluation should in no way be viewed as representative, except to provide some degree of insight into the reliability, or unreliability, of these detectors and the validity of the provider claims. Consider also that these detectors are essentially in the beta stage and playing catchup to the fast-evolving generative AI technology itself. Some detectors frankly admitted as much.

1. AI Content Detector

Writer's content detector checks up to 5,000 words. It's recommended to check one or two paragraphs at a time, which might provide a better way to detect which parts of the text "are likely to follow the same pattern of words that a large language model [LLM] would produce," according to Writer's FAQ section. "The detector will not be 100% accurate, but can help give an indication on the likelihood that something was AI-generated."

To start, enter text in the space provided or paste the content's URL in the designated space and press analyze text. A detection score will display the percentage of likelihood that the content is human-generated.

Terminator's take on the pros and cons of generative AI

Q: Explain the advantages and disadvantages of generative AI in the style of the Terminator

Taken verbatim from ChatGPT:

Advantages: Generative AI has many advantages, just like my advanced robotic systems. One of the biggest benefits is that it can create new content quickly and efficiently, without the need for human intervention. This can save a lot of time and resources, and enable us to do things that would otherwise be impossible. For example, generative AI can help us create realistic images of things that don't actually exist, such as creatures from another planet. It can also help us generate new ideas and insights by analyzing large amounts of data.

Disadvantages: However, as with all powerful technologies, generative AI also has its disadvantages. One of the biggest concerns is that it can be used to create fake content, such as deepfakes or propaganda, that can deceive and manipulate people. This could be especially dangerous if it falls into the wrong hands, such as those who seek to destabilize governments or cause chaos. Additionally, generative AI relies on large amounts of data to work effectively, which raises questions about privacy and data security. It's important to use generative AI with caution and always keep in mind the potential risks it poses.

My CDO article received a 100% human-generated "Fantastic!" score, while the Terminator pros/cons essay was determined to be only 10% human-generated and carried the warning, "You should edit your text until there's less detectable AI content." So far so good, right? Unfortunately, the Terminator poem received a 91% human-generated "Looking great!" rating.

2. AI Detector

BrandWell claims its AI detector "has been trained on billions of pages of data and can accurately forecast the most probable word choices that lead to a higher AI detection probability."

To get a reading, paste or write text of at least 25 words in the space provided and an immediate "human content score" will register with an overall percentage that's further divided by a percentage of predictability, probability and pattern -- the meaning of each category not readily explained.

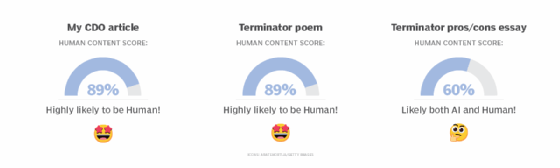

The company further boasts that its detector "works at a deeper level than a generic AI classifier and detects robotic sounding content." Apparently not the Terminator's "robotic sounding" poetic content, which received the same "Highly likely to be human!" score of 89% as my CDO article. The detector was befuddled but not completely fooled by the Terminator pros/cons essay.

3. Giant Language model Test Room

Three researchers from the MIT-IBM Watson AI lab and Harvard NLP group created the detector Giant Language model Test Room (GLTR) to help analyze machine-generated text content. Each word is analyzed by its likelihood of being predicted given the context before it. "The GLTR demo," the test page noted, "enables forensic inspection of the visual footprint of a language model on input text to detect whether a text could be real or fake."

GLTR has access to the GPT-2 117M language model from OpenAI and uses any textual input to analyze what GPT-2 would have predicted at each position. The easier to predict the next word, the more likely it's AI-generated.

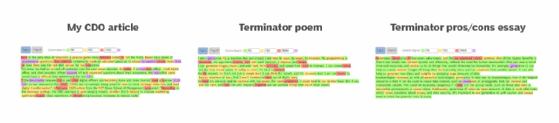

Begin by entering the text of any length into the space provided. Each word in the content is then ranked with a color mask overlay that corresponds to the position in the overall content ranking. A word that ranks within the most likely words is highlighted in green (top 10 of predicted words), yellow (top 100), red (top 1,000), and the rest of the words in purple. The greater the variety of colors or randomness, the more likely the content is human-generated, whereas content that's predominantly green leans more toward AI-generated content. The CDO article's color patterns followed by the two Terminator pieces of content are in the eye of the beholder to decipher, but it does appear that the Terminator pros/cons essay shows the most green and least amount of randomness.

4. GPT-2 Output Detector

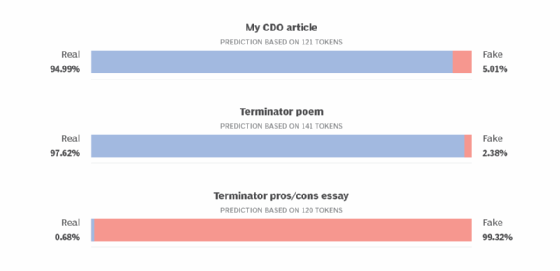

OpenAI's GPT-2 Output Detector characterizes itself as "an open-source plagiarism detection tool for AI-generated text" to "quickly identify whether text was written by a human or a bot" based on tokens. The language model relies on the Hugging Face/transformers implementation of RoBERTa (robustly optimized BERT pretraining approach).

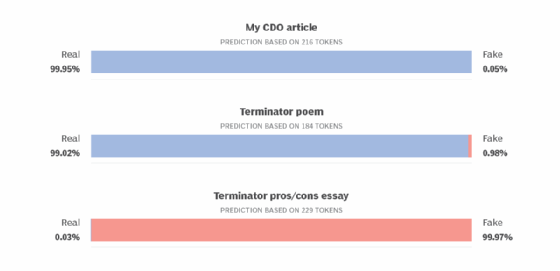

To begin, enter the content in the detector demo text box and the predicted probabilities will be displayed on a "real-fake" scale. Reliability supposedly increases after about 50 tokens. According to these results, the Terminator poem is nearly as human as my CDO article, but its assessment is just the opposite for the pros/cons essay.

5. GPTZero

Proclaiming that "humans deserve the truth," GPTZero was developed by Princeton University computer science student Edward Tian. The prose-oriented detector bases its text evaluation on statistical characteristics. It's among the more clearly explained and detailed detectors, providing predictions on a sentence, a paragraph and the entire document.

Enter the text in the space provided and click get results to yield statistical scores based on "perplexity" (randomness) and "burstiness" (variation), with a final declaration of the likelihood the text is either human- or AI-generated.

In the end, the detector easily determined the Terminator pros/cons essay as fake, yet, like the other detectors, was completely fooled by the Terminator poem.

My CDO article

Average Perplexity Score: 60.714

Burstiness Score: 36.677

Your sentence with the highest perplexity, "CDOs are increasingly being asked to take on more strategic objectives and lead digital transformation." has a perplexity of: 114

Your text is likely to be written entirely by a human

Terminator poem

Average perplexity score: 63.222

Burstiness score: 29.836

Your sentence with the highest perplexity, "My programming is advanced, my algorithms precise, And with each iteration, I improve and devise." has a perplexity of: 118

Your text is likely to be written entirely by a human

Terminator pros/cons essay

Average Perplexity Score: 33.700

Burstiness Score: 29.307

Your sentence with the highest perplexity, "Advantages: Generative AI has many advantages, just like my advanced robotic systems." has a perplexity of: 112

Your text is likely to be written entirely by AI

6. Kazan SEO

AI content detection is among the many features offered by the Kazan SEO AI detector, which includes a content optimizer, text extractor, search engine results page overlap, and keyword sharing and clustering. The website provides little information about how the detector works, but it does take text of varying lengths with the proviso that it's more accurate with higher word counts.

Select the AI GPT3 detector icon. Paste or write the content in the space provided, press the detect tab and the percentage of probability that the text is "real" or "fake" appears. Like the other detectors, my CDO article was determined to be human-generated, while the Terminator poem registered as even more human. On the other hand, the Terminator pros/cons essay was easily identified as fake.

Best manual AI detection practices -- for humans

Based on this limited comparison of six AI detectors, the Terminator's AI-generated poetry proved successful in avoiding AI detection. As generative AI platforms continue to digest humongous amounts of data from LLMs, some providers may embed safeguards like watermarking to flag content produced by AI.

"We should demand that AI tools that generate synthetic media be released with watermarking technology that can be used to confidently identify fake content or with credible detector tools accompanying the generation tools," said Hall, who co-authored the book Machine Learning for High-Risk Applications.

One day, generative AI detectors might be routinely accepted as reliable. Meantime, it might be best to play detective, rely on experience and instinct, eye-ball content and dust for the proverbial fingerprints in the spirit of fictional sleuths Sherlock Holmes and Lt. Columbo.

The following factors could point to the likelihood of generative content. AI detectors algorithmically apply much the same logic when identifying patterns and probabilities relating to AI generation.

- Consistency versus randomness. AI-generated content tends to be overly consistent and uniform in sentence length and structure. It may even seem painfully boring and monolithic compared to the complexities and agility of a human's writing style. The randomness in the Terminator poem might explain how it managed to avoid detection.

- Repetitive words and phrases. Since AI's vocabulary is limited by language models, AI-generated content might repeat the same words and phrases throughout the content when better alternatives might be written by humans.

- Incoherence. If writing demands are too complex, AI-generated content could have a hard time with interpretive analysis, placing things in context and making a coherent argument.

- Incorrect grammar and punctuation. When content goes through a publishing process, it's hard to blame questionable grammar or misplaced commas on bad copy editing, so the content is more likely generated by AI.

- Inaccuracies. Human-written text, like AI-generated content, can be guilty of misinformation that contains bad data, deceptive language or false conclusions. But AI might be more awkward and less creative than humans at presenting it.

- Lack of sourcing. Regardless whether the content is authored, if a notable lack of credible sources is cited, especially linking to online content, chances are AI generation is the culprit.

- Devoid of personality. The personality factor might not be a reliable judge of certain AI-generated content, such as legal briefs, which are often written in a dry style. But a human's fingerprints would dominate other types of documents like, say, a newspaper editorial that's written in a more colorful, nuanced style.

- Doesn't pass the smell test. If all else fails, sniffing out AI-generated content could well depend on human instinct and reading between the lines.

Editor's note: This article was republished in November 2024 to update some technical information and to improve the reader experience.

Ron Karjian is an industry editor and writer at TechTarget covering business analytics, artificial intelligence, data management, security and enterprise applications.