How has generative AI affected cybersecurity?

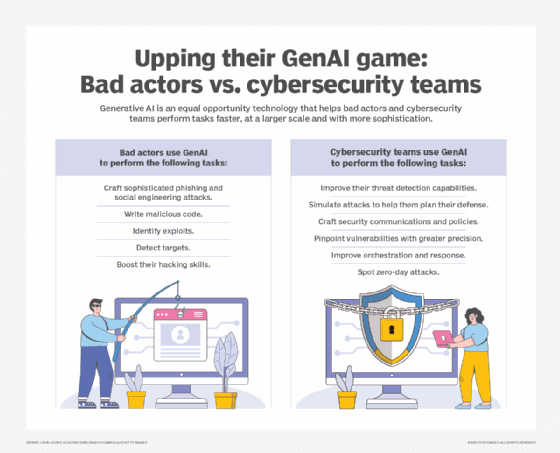

Generative AI helps security teams defend against threats, but it also lets bad actors infiltrate organizations. Learn about how each side is tapping into GenAI to gain an edge.

Generative AI is proving to be a game changer in cybersecurity, enabling both bad actors and defenders to operate faster, at a higher level and at a larger scale.

For example, hackers are using GenAI to craft more sophisticated phishing campaigns and develop code that's more likely to evade detection.

On the other side, enterprise security teams are using GenAI to more accurately identify vulnerabilities and boost their abilities to spot zero-day attacks.

"Generative AI is useful for both good actors and the bad actors, and right now we see both sides of the aisle are innovating with GenAI," said Joseph Nwankpa, director of cybersecurity initiatives and an associate professor of information systems and analytics at Miami University's Farmer School of Business.

The intersection of generative AI and cybersecurity

The "Voice of SecOps 5th Edition 2024" report from cybersecurity company Deep Instinct -- conducted by Sapio Research -- surveyed 500 senior cybersecurity experts from companies with 1,000-plus employees in the U.S. The report found that 97% of security professionals worry their organization will fall victim to an AI-generated cybersecurity incident; 33% of respondents said they view adversarial AI as a major or critical threat to their organization.

This article is part of

What is GenAI? Generative AI explained

In response, cybersecurity teams are looking to GenAI tools to sharpen their defenses.

Cybersecurity tech company CrowdStrike and ViB Research surveyed 1,000-plus cybersecurity professionals for the "2024 State of AI in Cybersecurity Survey." The report, conducted in June and July, found that 64% of respondents were either researching or had already purchased GenAI tools, while 69% of those looking for a tool said they intended to make a purchase in the next 12 months.

Examples of how hackers use GenAI

Some high-profile incidents show how hackers are using GenAI to launch successful attacks.

In one notable incident in early 2024, fraudsters convinced a finance worker at a multinational firm to pay out $25 million after using a deepfake of the company's CFO requesting the funds.

That's the tip of the iceberg, according to Nwankpa and others, who said bad actors are using GenAI for the following tasks.

Crafting sophisticated phishing and social engineering attacks

The GenAI tools -- such as ChatGPT -- that help workers draft smooth-flowing text also help hackers create emails that are as high quality as actual corporate communications, with near-flawless language and logos that are more likely to convince targets the messages are legit and not a phishing attack.

"The text and the images and the overall messages are a lot better because of GenAI," said Ken Frantz, a managing director at assurance and advisory firm BPM.

Hackers can also use GenAI to write phishing emails in languages that are less universally spoken or harder to master than English, experts said. This lets them target a larger portion of the globe than before GenAI became a widely available technology.

And, according to Tony Velleca, CEO at UST subsidiary CyberProof and chief information security officer at UST, hackers are using GenAI to launch social engineering campaigns beyond just phishing emails.

Writing malicious code

Developers everywhere can use GenAI to write computer code, and threat actors are no exception.

They can instruct GenAI with the right prompts to write new malicious code or tweak existing malware so that it's more effective at evading detection or more likely to succeed at achieving its goal, Nwankpa said. They can also use it to more easily and quickly create malware tailored to its target, upping their chances of success.

This, Velleca and others said, essentially equates to a growing volume of zero-day type attacks.

Identifying exploits

GenAI can help threat actors identify vulnerabilities that they can exploit -- and at a speed and scale they couldn't before.

"Using generative AI, they're able to really analyze a particular system or software so they can tailor their attacks and launch more sophisticated attacks," Nwankpa said.

Detecting targets

Similarly, Frantz said hackers use GenAI for research "on what has value, to understand organizations and to create target lists. Then asking GenAI, 'With this information and based on what I'm trying to access, what is the best way to attack?'"

Frantz acknowledged that LLM tools such as ChatGPT and Claude have guardrails meant to prevent such uses but said malicious groups are finding ways around those protections.

Boosting their hacking skills

Enterprise teams use GenAI to supplement their skills, boosting their expertise in the process. Hackers do the same, Velleca said.

"GenAI enables more people who aren't skilled in attacks to now launch attacks, so you're going to see a wide-scale increase," he explained.

What are some examples of generative AI being used in cybersecurity?

GenAI is also helping enterprise cybersecurity teams up their game. Security leaders said GenAI can boost enterprise security by offering the following capabilities.

Improving threat detection capabilities

One of GenAI's biggest benefits to enterprise security is its ability to aid with threat detection and response, Frantz and others said.

It's especially beneficial in how it automates more tasks in the process. As Nwankpa noted, the technology "significantly reduces the time it takes to detect a threat."

Simulating attacks to help teams plan their defense

Defense teams can use GenAI to simulate advanced attack scenarios, Nwankpa said. That helps them pinpoint vulnerabilities that might otherwise go unaddressed and think about how to defend against such scenarios should they happen.

Crafting security communications and policies

Enterprise security leaders can use GenAI to write policies and tailor security communications to various audiences, Nwankpa said. This helps cybersecurity officials save time and develop and disseminate more effective communications.

AI-powered coding can exact a toll

The adversarial use of generative AI isn't the only way the technology increases enterprise risk. Enterprise use of GenAI can also pose threats, said Joseph Nwankpa, director of cybersecurity initiatives and an associate professor of information systems and analytics at Miami University's Farmer School of Business.

The use of GenAI to write code is one example. The technology has greatly democratized programming for business users and sped up the process for experts. But GenAI, while evolving rapidly, isn't perfect and can make up results -- known as AI hallucinations -- that could end up in production if a skilled human isn't part of the process, Nwankpa explained. Those vulnerabilities can then be exploited.

The use of GenAI for coding could also erode the foundational skills programmers need to become expert enough to spot problems.

"My fear is, as we continue to move in that direction, we are losing the knowledge base that comes from traditional code writing," he said.

Pinpointing vulnerabilities with greater precision

Teams can use GenAI to identify vulnerabilities and perform vulnerability assessments, Velleca said.

For example, cybersecurity professionals can use GenAI to review code more quickly and precisely than manual efforts or other tools can, boosting workers' efficiency and the organization's security posture.

Teams similarly can use GenAI to configure and reconfigure security software such as firewalls to help eliminate weak spots and strengthen defenses overall, Velleca added, as the technology can identify misconfigurations in addition to vulnerabilities.

Improving orchestration and response

One promising area for GenAI is security orchestration, Frantz said.

He explained that the technology is particularly useful in providing teams working in a security operations center with step-by-step instructions in everyday terms that workers can follow as they respond to alerts. These instructions reduce manual efforts and increase the speed and accuracy of the response, especially for less-experienced teams.

"With the skills shortage in security, and considering that defensive security has to get its response right all the time, GenAI provides a force multiplier from a defense perspective. It can react at machine speed, not human speed, to have a list of things to do and to execute in part on its own," Frantz said. "It all helps the defense side keep up."

Spotting zero-day attacks

GenAI can identify patterns that look like -- but are not identical to -- known attack patterns, making it more capable than other AI-powered security tools at spotting zero-day attacks, said Rebecca Herold, a member of the nonprofit professional association Institute of Electrical and Electronics Engineers (IEEE) and CEO at The Privacy Professor, an information security, privacy, IT and compliance services firm.

"GenAI is much better at relating all the different types of past experiences with each other, so it's able to say, 'It looks like there's something wrong here based on all the other zero days we've seen,'" she said.

That's a much more advanced capability than conventional security tools that search for known attack patterns and malicious code and can't alert to a new attack type.

Risks and challenges of using GenAI for cybersecurity

Enterprise security departments generally obtain GenAI capabilities as part of their security software; very few have the resources to build their own AI models.

Although enterprise security departments aren't developing their own GenAI capabilities, they still have work to do to get optimal results from their vendor-supplied GenAI, Herold said.

She said GenAI -- like nearly all AI capabilities in the enterprise -- must be trained and tuned to each organization's unique environment.

That can be a challenge for security teams that might be understaffed and lack the necessary skills to do such work, Herold said.

Moreover, those teams must ensure they don't violate any data privacy regulations or data security laws during that training, she added.

Those teams also must confirm that data used to train the AI is the right quality in the right quantity; otherwise, the AI outputs will be faulty, Herold said.

Finally, security teams must guard against unintended biases and hallucinations when using AI of any kind and be cognizant of the unknowns that come with vendor-supplied AI. "Vendors work in their own black box environment and we don't always have transparency into how the model was trained," Frantz said. "We don't want it to go rogue, but there is potential for it to do that."

Mary K. Pratt is an award-winning freelance journalist with a focus on covering enterprise IT and cybersecurity management.