Getty Images/iStockphoto

How data quality shapes machine learning and AI outcomes

Data quality directly influences the success of machine learning models and AI initiatives. But a comprehensive approach requires considering real-world outcomes and data privacy.

"Garbage in, garbage out" is a familiar adage in programming. But it's especially apt in AI and machine learning, where model performance often hinges on the quality and relevance of training data.

AI and ML developers use prepared data sets to train the models and algorithms they create to produce outputs. Those outputs can be detailed analyses of data that reveal trends and insights or -- in the case of tools such as ChatGPT -- a platform that promises to answer users' questions on a seemingly limitless range of topics.

Business leaders who are considering adopting AI tools should be aware of the abundance of data sets available to serve as inputs, encompassing domains such as healthcare, automotive and autonomous vehicle (AV) manufacturing, and finance and banking. The scope of data sets used to train AI models is broad, and data must be both relevant and sufficient in quality to meet end users' needs -- particularly in the era of generative AI.

"Data quality has been extremely important in the realm of data science, machine learning [and] AI since time immemorial," said Kjell Carlsson, head of data science strategy at enterprise MLOps platform Domino Data Lab. "But now more people are aware of it and more people are discussing it in the context of generative AI."

Model performance depends on data quality and specificity

Although techniques such as feature engineering and ensemble modeling can partially compensate for inadequate or insufficient training data, the quality of input data typically sets an upper limit on a model's potential performance.

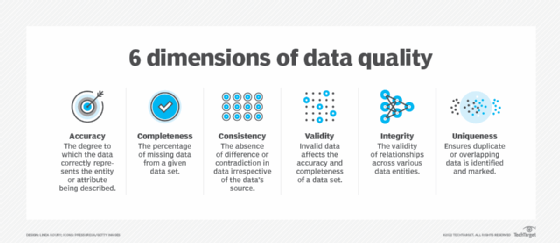

Ensuring data quality is therefore crucial for success in business AI and ML initiatives.

"Obviously, you can make a terrible, terrible model off of high-quality data," Carlsson said. "But the quality of your data limits what you're going to be able to do with your models."

Companies use AI models for specific reasons, meaning that enterprise models require training with tailored, relevant data sets. Consequently, when evaluating what data to acquire and use, it's important to consider the end system that will consume that data. "Until you figure out what you want to use your data for, how do you know what quality you're trying to achieve?" Carlsson said.

Due to the importance of data relevance and specificity, popular but highly general models such as GPT-4 aren't always the best fit for enterprise use cases. A model trained on a massive but nonspecific data set is unlikely to have a good representative sample of the kinds of conversations, tasks and data relevant for a particular industry or organizational workflow.

Rather than viewing data as objectively good or bad, consider data quality as a relative characteristic, closely tied to the model's real-world purpose. Even if a data set itself is comprehensive, unique and well structured, achieving the organization's desired outcome might prove impossible if teams can't use it to make the predictions necessary for a planned use case.

As an example, Carlsson recounted his experience on a previous project for an electronic health record platform. Despite having extensive data on how doctors used the platform, his team found that they couldn't predict when a customer would leave the service. The decision to switch services was made by practice managers, who didn't directly use the platform -- meaning that their behavior wasn't tracked.

"So, you can have incredibly high-quality data which is completely useless," Carlsson said. "It was bad-quality data for what we wanted to use it for."

Specialized data sets exist for a wide range of industries

As resource-intensive and time-consuming as it is for organizations to train AI models effectively, industry-specific data sets themselves have become easily accessible.

In the financial sector, sites such as Data.gov and the American Economic Association feature data sets that provide macroeconomic data on employment, economic output, trade and many other related topics within the U.S. Meanwhile, the official International Monetary Fund and World Bank sites have data sets covering global financial markets and institutions.

Specific data sets found in Data.gov's massive catalog include titles such as "Auto Sales" and "Food Price Outlook." These types of data sets -- provided by the U.S. Departments of Transportation and Agriculture, respectively -- are useful for certain business use cases within the financial sector.

Many of these data sets are free for enterprise use. In the same way that ChatGPT was trained on text culled from various websites, articles and online forums, expect enterprises to look online and at data marketplaces for information to get their models up to speed.

Ethics and privacy considerations are part of determining data quality

But as organizations look to incorporate external data sets and models, underlying data collection practices are receiving increasing scrutiny.

"The challenge is, when we talk about AI, are the models generated as a result of information that didn't have consent?" said Daniel Barber, CEO of privacy management platform DataGrail.

ChatGPT creator OpenAI is already beginning to face lawsuits over its use of individuals' data. When evaluating whether to use or collect data from outside the organization, it's essential for organizations to factor in data ethics and privacy considerations in a structured way from the start.

"The first step to ensuring that your business is taking the right approach is establishing an ethical policy around how a business operates using AI," Barber said. This internal ethics policy should be formulated by an AI ethics council and regularly reviewed to ensure it's working as intended. Likewise, the organization should designate a data protection officer to weigh in on decisions to use or acquire data from outside the organization.

When developing ethics policies and planning AI initiatives, incorporate diverse viewpoints. Groups composed of individuals from a wide range of backgrounds and job functions can anticipate potential outcomes that technical teams alone might not consider.

"If your data quality initiatives are often a silo, just trying to do their own thing and work against historic goals, and are very disconnected from what that outcome is, the likelihood that you're going to get to something successful is much lower," Carlsson said.

Ensuring data quality is necessary to avoid real-world ramifications

In certain sectors, the need for reliable data becomes even more apparent when a lack thereof can cause harm to consumers.

For example, quality data is an imperative in the automotive industry when developing AV algorithms. Companies are consistently working to improve the capabilities of AVs to prevent serious real-world ramifications. Available data sets for AV algorithms typically feature data captured from real autonomous vehicles' lidar and camera systems to improve object detection and motion prediction.

In the healthcare industry, AI and ML have been enthusiastically embraced as a way to not just deal with burdensome administrative tasks, but also assist with diagnoses. Quality data sets thus become especially important when training AI to understand health problems well enough to avoid misdiagnoses.

The website HealthData.gov features data sets on the effects of the COVID-19 pandemic within the U.S. Text isn't the only type of data relevant to the healthcare sector either -- thousands upon thousands of chest X-ray images, for example, are also available for analysis.

When evaluating whether and how to use medical data sets, keep in mind that these are often the areas where user privacy and data ethics are most important. Barber pointed out that health-related information and biometric data are among the most sensitive types of data that can be collected about an individual.

"I think most people understand why that information is particularly sensitive to an individual," he said. "And so, how is that information collected, and was consent included in that process? That will be very important for businesses to understand."

Failing to ensure data privacy and security has business consequences

Using data that's later found to violate privacy laws and industry standards could also have significant repercussions for businesses.

And businesses shouldn't dismiss the issue as simply risking a fine down the road. In addition to the financial and reputational consequences of violating security and privacy regulations, businesses could be forced to remove algorithms and software that rely on unlawfully and unethically obtained data.

In May, the Federal Trade Commission (FTC) settled a case against Ring, an Amazon-owned company that sells internet-connected home security cameras, which alleged that Ring violated user privacy by failing to restrict internal access to customers' videos. One employee, for example, viewed thousands of videos from female users' devices surveilling areas such as bathrooms and bedrooms, according to the complaint.

"Ring's disregard for privacy and security exposed consumers to spying and harassment," said Samuel Levine, director of the FTC's Bureau of Consumer Protection, in a press release.

And because Ring used those videos to train algorithms without obtaining users' consent, as described in the complaint, there could be far-reaching consequences for the company. Under the proposed settlement order, currently pending court approval, Ring would be required to delete any data, models and algorithms derived from unlawfully reviewed videos.

If such consequences become the norm, "the actual business risk here is greater than just the compliance component," Barber said. "Rather, the business value of the entire model itself that you may have spent hundreds of hours building could be removed if implemented incorrectly."