Kit Wai Chan - Fotolia

GPU analytics speeds up deep learning, other data insights

GPU-based systems have become a popular platform for deep learning applications, and they're now also being used to accelerate analysis of IoT and geospatial data.

Business intelligence and analytics applications have changed significantly over the past three decades. As they expanded from basic statistical analysis and tabular reports to complex analytics with increasingly rich data visualizations, they've brought more and more insight to business decision-making.

Now, there's growing use of machine learning, natural language processing and other AI technologies to analyze data sets -- and a corresponding move that we're seeing from CPUs to GPUs in advanced analytics systems.

The path to today's GPU analytics platforms began with the advent of both the PC and gaming systems in the 1980s, which increased interest in developing ways to improve graphics on computer monitors and television screens.

General-purpose CPUs do a lot of things but are linear in their approach to computational processing, even with parallelism incorporated into them. To display information on a screen, the typical method with CPUs was to process pixels line by line, one pixel at a time. But the people who were creating games and other visual applications wanted a faster way to do graphics.

Rather than drawing pixels one at a time in a line, what if an entire image was developed in memory in the form of a matrix and pushed to the screen, and then only pixels that needed to be updated were redrawn to change the image? That's a simplistic way of looking at the basics of GPU processing. But, put into practice, it rapidly improved graphics performance.

From gaming to deep learning on GPUs

While there were other early devices, the Nvidia GeForce 256, introduced in 1999, was the one that first popularized the GPU. Today, Nvidia continues to be the most prominent provider of GPUs. For many years, it focused almost exclusively on computer gaming. Then the concept of deep learning began to take hold in data science and analytics teams.

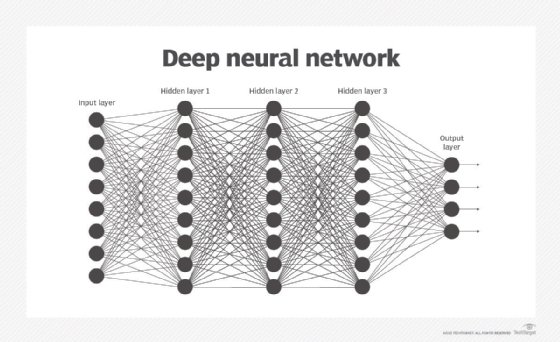

An advanced form of machine learning, deep learning uses neural networks that are designed to work like the human brain does. In deep learning applications, neural networks contain multiple layers of nodes in a matrix, with each node performing calculations as part of an analytical model.

What some programmers realized is that they could use the matrix multiplication power of GPUs to increase the performance of models across a deep learning matrix, taking advantage of far more parallelism than is possible with conventional CPUs. They first used standard graphics-focused GPUs, and vendors soon created servers with arrays of the devices for even more processing power.

Eventually, the vendors realized the full market potential for GPU analytics technology in deep learning applications. Nvidia, Intel, AMD and others began to create GPUs for deep learning uses in particular, along with related software. While an argument could be made that doing so no longer makes those devices GPUs, the name hasn't been changed. What is changing now is that developers of other types of analytics applications have realized GPUs can help them, too.

For example, GPUs are well suited to process and analyze geospatial data, which uses a similar matrix format when it's arrayed in raster data models. In addition, the growth of IoT networks means there are a lot of connected devices scattered over a large landscape in organizations, transmitting operational data that's also a good match for GPU-based platforms -- often combined with Geospatial analysis.

New platforms target broader use of GPUs

A group of startup vendors has begun offering GPU analytics and database systems outside of the deep learning market. Those companies include BlazingDB, Brytlyt, Kinetica, SQream DB and OmniSci, which provides a platform for analytical processing with GPUs both on premises and in the cloud. While OmniSci clearly is addressing geospatial applications, its OmniSciDB platform isn't limited to them.

"Geospatial analysis is only one key area of analytics that can be improved through the use of GPUs," said Todd Mostak, co-founder and CEO of OmniSci, which originally was known as MapD Technologies. GPU-driven analytics is also being applied to help optimize operational performance and customer service "in complex environments," such as telecommunications and utility companies, Mostak added.

Those are two sectors where managing and analyzing all the data collected from sources like cell towers and smart meters is a critical challenge. For a telecom company, combining usage data from existing cell towers with topography data is a key element in planning the optimal placement of new towers.

In the utility industry, sensors and smart meters capture information from the power generation plant through the transmission network and into the homes and offices of customers. As the use of green technology grows, more generation sites are added and utilities face the need to track consumption of electricity from multiple providers, the complexity of the data that's being captured continues to increase. The oil and gas sector has similar issues, with sensors producing massive amounts of data during exploration, production, transmission and refining.

In those cases and others, data scientists and analysts in companies need a way to rapidly analyze the incoming data. GPUs can help accelerate the analysis process. Deep learning on GPUs remains a core use for the devices, but what has been learned from their deployment in deep learning applications is now being adapted to provide a broader set of GPU analytics capabilities for users.