GPT-3 AI language model sharpens complex text generation

GPT-3 is the latest natural language generation model, but its acquisition by Microsoft leaves developers wondering when, and how, they'll be able to use the model.

The art of a good magic trick is to make the audience believe the illusion is real. The magician didn't bend the laws of physics by making something disappear, but drew our attention elsewhere using sleight of hand. Artificial intelligence uses a similar approach -- if we can create an illusion that looks real, then we can make a machine seem to act just like a human.

One of AI's greatest magic tricks is making machines communicate as clearly and fluidly as humans. It's become easy for machines to have short one- or two-sentence conversations with humans that answer specific questions or handle specific tasks. However, if you ask the machine to explain something complicated without using previously human-generated text, it will start to lose track of the conversation.

It's in this world of complicated natural language generation (NLG) that the magic of GPT-3 emerged to suspend our disbelief about what machines are capable of.

The big data AI magic trick: GPT-3

The third generation Generative Pre-trained Transformer (GPT-3) is a neural network machine learning model that has been trained to generate text in multiple formats while requiring only a small amount of input text. The GPT-3 AI model was trained on an immense amount of data that resulted in more than 175 billion machine learning parameters.

To put things into scale, the largest trained language model before GPT-3 was Microsoft's Turing-NLG model, which had 10 billion parameters. GPT-3, trained largely on digital data, smashes past that number with more than 17 times the number of parameters.

If "generative" in the term GPT-3 refers to generating text, and "pre-trained" relates to the model being trained on tons of input text, what does "transformer" stand for? Transformer architecture is a configuration of a deep learning neural network that arranges the flow of data to accomplish a specific learning task in a specific way. In this case, the transformer architecture uses what's known as encoder-decoder architecture, which enables encoders to process inputs in layers to create higher-level representations and the decoders to take the outputs of these encoders and process them to generate output sequences.

Transformer architectures are a relatively recent innovation in deep learning neural nets. Previous approaches used an architectural form known as recurrent neural networks (RNNs) that used input-output loops of various types to create systems to handle sequences of information needed for speech processing, machine translation and text summarization, or to generate unique image captions. However, RNNs are sequential in nature, meaning they can only effectively learn sequences of certain length. While RNNs might be good at generating a sentence or two that maintains consistency, they fail when asked to do so over longer text sequences.

Transformer architectures avoid these problems because instead of relying on sequences of a specific duration, they use attention mechanisms to generalize sequences they have learned. An earlier pre-trained model using this approach, the Bidirectional Encoder Representations from Transformers (BERT), demonstrated the viability of this method and showed the power neural networks have to generate long strings of text that previously seemed unachievable.

GPT-3 use cases

Now, what can we do with GPT-3? At the most basic level, GPT-3 AI systems can create text for a wide range of purposes, including full length articles, poetry, stories and dialogue. AI researcher Gwern Branwen showcased some GPT-3 creations of AI-generated writing in the style of Maya Angelou, William Shakespeare and Edgar Allan Poe.

Poetry and prose are just the tip of the iceberg of what GPT-3 is capable of. Using just a few snippets of code, GPT-3 can create workable code that can be run without error. Many other practical examples of GPT-3 can include having the system generate Excel functions, recipes, role playing game conversational systems and a breadth of other programs that users can test out.

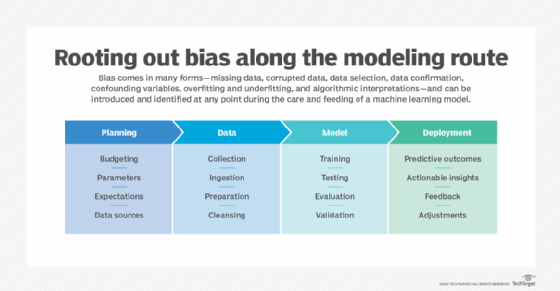

The generated text is of such high quality that people have started a discourse about its eventual use. Those who are wary of GPT-3 worry about its ability to possibly create fake news articles and fool humans in conversational systems. The technology might even fall victim to its predecessor's use of language bias.

Before releasing GPT-3 AI tools to enterprises, OpenAI and Microsoft are testing the technology within their own applications to ensure security, safety and responsible use.

The origins of GPT-3

GPT-3 emerged as a research project by OpenAI. Formed in 2015 by a litany of technology heavyweights, including Elon Musk, Ilya Sutskever, Sam Altman and Greg Brockman, OpenAI was initially conceived as a nonprofit research-focused counterweight to Google's DeepMind. The work of OpenAI ranges from reinforcement learning research to metalearning robots.

As part of its work on very large generative pre-trained networks, the company released GPT-2, which showed unprecedented capabilities. When returning to the model, developers thought that simply throwing more data at the GPT model would show diminished returns, as testing the limits of neural networks often does. However, GPT-3 surprised researchers by performing increasingly better at scale, and moving past simple natural language tasks such as text summarization and task-based activities, to learning how to follow instructions given in the text and performing complex tasks.

Initially wary about releasing the much more powerful GPT-3 AI models into the wild, OpenAI released access to the model in increments to see how it would be used in the real world. A few months later, the laboratory opened the model up for broad use to those who applied for access during the beta period, which ended on October 1, 2020. Use of GPT-3 is now priced on a tiered credit-based system. Small-scale users can try it free for three months, while large-scale production users pay hundreds of dollars per month.

In 2019, OpenAI changed from a 501(c)(3) nonprofit to a for-profit company controlled by its nonprofit entity in something the organization calls a "capped-profit" model. This new model enables the company to raise investor money from outside sources and give its employees a stake in the overall company, something the nonprofit model would not allow.

The Microsoft GPT-3 exclusive license

Since the creation of models such as GPT-3 require a huge amount of capital to finance their large computing, data, storage, bandwidth and skills needs, it's no surprise that OpenAI sought investors. In June 2019, soon after the formal change in company structure, Microsoft invested $1 billion into OpenAI, which the company said it would spend completely within five years. At the time of the investment, it seemed that Microsoft was simply interested in helping to further efforts into pushing the boundaries of AI's capabilities, but it soon became clear that Microsoft was interested in more.

In September 2020, OpenAI announced that Microsoft had an exclusive license on the GPT-3 model. While OpenAI can still make GPT-3 available to the public on a pay-to-access model, Microsoft owns the exclusive use and control of the GPT-3 source code, model and data. This exclusive control means other companies can't acquire that model for their own uses.

What does this mean for users? For the casual GPT-3 user, Microsoft's ownership might not mean much if they access the model for short-term needs. However, in the long term, Microsoft could choose to prevent GPT-3's use by competitors or any other party. Microsoft could further develop GPT-3 into a GPT-4 model that it won't make accessible to others or embed GPT-3 capabilities into its products in ways that competitors can't match.

Future of GPT-3

The big question for data scientists is whether GPT-3 represents a strong move toward realizing the goals of artificial general intelligence or another big data magic trick. GPT-3 can generate impressive text that can solve a wide range of needs for machine-generated content, but as impressive as that is, are these machines capable of understanding the text and nuances, or is the model just a very impressive computer-generated parrot?

Imagine that you could talk to a parrot in the same way as GPT-3, giving it some starter text and having the parrot provide long responses. A parrot might even be able to rattle off original Shakespeare-like text, but does it comprehend what it's saying? Most AI researchers would admit that mimicry, as magical as it may seem, is not the same thing as understanding the text.

There are a wide range of needs for natural language generation, from chatbots to contract writing and code generation. GPT-3 represents the latest state-of-the-art text generation, combining enormous volumes of data with computing to reach spectacular levels of accurate output. Microsoft's exclusive license further hints at the value of this model.

However, there is more to the AI landscape than NLG, and there certainly will be more models developed that can perform similarly. While Microsoft's exclusive license might cause concern regarding future access to advanced NLG technologies, if the past is any indication, we'll see continued investment and research by others that will provide even more innovation in the space of AI.