Designing systems that reduce the environmental impact of AI

Understanding AI's full climate impact means looking past model training to real-world usage, but developers can take tangible steps to improve efficiency and monitor emissions.

AI's capabilities and popularity have skyrocketed over the past year. But developing large, powerful models such as ChatGPT is a resource-intensive process that has a significant environmental impact.

AI affects the climate in complex ways, including through its ability to advance environmental research and optimize power grids. However, in addition to the emissions associated with creating, transporting and disposing of AI hardware, each computation on that hardware also comes with an environmental cost.

The tech sector has an important role to play in addressing the climate crisis, and that includes AI developers. Building more sustainable AI will require a multifaceted approach that encompasses everything from model architecture to underlying infrastructure to how AI is ultimately applied.

Unpacking the factors behind AI's environmental impact

Machine learning models make predictions and decisions using variables called parameters that are adjusted during training. In many cases, adding parameters enables models to capture more complex patterns and relationships in data, incentivizing businesses and researchers to build ever-larger models.

But energy is expended each time a parameter is updated during model training. A 2019 paper estimated that training a big transformer model on GPUs using neural architecture search produced around 313 tons of carbon dioxide emissions, equivalent to the amount of emissions from the electricity that 55 American homes use over the course of a year.

The big transformer model analyzed in that paper had an already sizable 213 million parameters -- and in the years since, language models have only gotten more massive. GPT-3, the original model behind the free version of ChatGPT, is multiple orders of magnitude larger at 175 billion parameters.

In addition to consuming power, model training generates substantial heat, driving water consumption via data center cooling. And e-waste can pile up when AI developers churn through hardware upgrades in pursuit of larger and faster models.

AI's environmental impact goes beyond model training

To date, analysis of AI's carbon footprint has focused on model development and training -- typically the most computationally expensive stage of the machine learning lifecycle. However, emissions from individual usage could also be relatively high for large models with millions of daily users, such as ChatGPT or AI-powered Google Search.

There's also the question of how AI is used after development. Many tech companies have made public commitments to reduce the emissions associated with their computations and hardware. While doing so is undoubtedly valuable, focusing only on those areas lets companies off the hook for the downstream effects of their applications.

The full picture of AI's environmental impact includes how AI is contributing to societal trends as a whole, including changing consumption of products and services in an unsustainable direction. For example, using AI to accelerate oil and gas exploration or facilitate on-demand delivery with emissions-intensive vehicles could cause potentially significant increases in carbon emissions.

Designing more efficient and sustainable AI systems

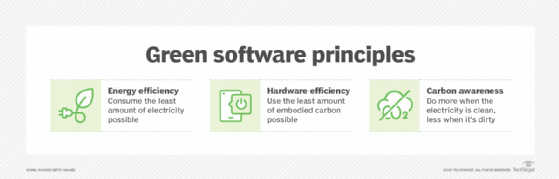

It remains difficult to accurately estimate the environmental impact of AI. But although obtaining accurate sustainability metrics is important, developers of machine learning models and AI-driven software can make more energy-efficient design choices even without knowing specific emissions figures.

For example, eliminating unnecessary calculations in model training can reduce overall emissions. Large, computationally intensive models aren't necessary for all applications; in some use cases, a smaller model might do just as well or even better. It's also important to view sustainability as a worthwhile metric in its own right, rather than pursuing marginal performance improvements at any emissions cost.

Developers training models using cloud infrastructure can also perform computations at times and in data center regions where more clean energy is available. Checking for unused resources and utilizing servers to their full capacity can cut down on unnecessary energy expenditures as well: If a server is on, it's consuming power, even if it's not running a useful workload.

Incorporating sustainability monitoring tools can help technical teams better understand the environmental impact of their models and the applications where those models are used. For example, the Python package CodeCarbon and open source tool Cloud Carbon Footprint offer developers snapshots of a program or workload's estimated carbon emissions. Similarly, IBM Red Hat's Kepler tool for Kubernetes aims to help DevOps and IT teams manage the energy consumption of Kubernetes clusters.

How new policy and regulations could affect AI and sustainability

New and impending climate legislation might make sustainable practices more of a necessity than a choice for many companies. But it's still unclear exactly what that will look like, especially when it comes to AI.

Moving forward, climate risk could become a standard component of risk assessments. The EU AI Act, for example, asks companies to report on the impacts of particularly "high-risk" AI applications. The nonprofit organization Climate Change AI proposed explicitly accounting for AI's climate impacts by classifying high-emissions systems as high risk. Such a designation would activate requirements around transparency, similar to existing reporting requirements for financial disclosures, requiring AI vendors to report greenhouse gas emissions data.

Getting the business side on board with sustainable AI

Explaining the business value of sustainability can help get buy-in from leadership, especially in tightly regulated and slow-moving industries such as banking and telecommunications. AI professionals hoping to make their work more sustainable can emphasize the cost savings associated with energy-efficient models, for example, as well as the risks associated with violating sustainability protocols.

Moreover, companies that genuinely prioritize sustainability are likely to find themselves in a better position over the long term. As regulations evolve, taking a sustainability-first approach will set companies up for success compared with simply playing whack-a-mole with each new policy that comes out. Thus, putting in the upfront effort to build more sustainable AI systems could help avoid the need to make costly changes to infrastructure and processes down the line.

Sustainability commitments can also help companies appeal to talent in a still-competitive tech job market. Candidates are increasingly interested in potential employers' approaches to sustainability and environmental goals. As a result, setting a clear sustainability mission -- and taking the real-world action to back it up -- can help companies attract the talent they need.