Getty Images/iStockphoto

Deep learning and neural networks gain commercial footing

Deep learning and neural networks are picking up steam in applications like self-driving cars, radiology image processing, supply chain monitoring and cybersecurity threat detection.

The news these days is full of stories about AI. Lately, we're seeing how deepfake techniques create altered and convincing videos, photos or audio of people and how deep learning and neural networks win at the highly complex strategy board game Go.

Despite these types of applications, companies continue to struggle to apply AI to real-world business problems. In addition, neural networks and deep learning technologies -- as opposed to the more tangible, statistics-based machine learning -- are difficult to understand and explain, creating potential bias, compliance and safety issues. Even so, deep learning and neural networks are being deployed and affecting the bottom line of companies.

Applications run wide and deep

Deep learning shines when performing image analysis, but it also works with other multimedia data sources, including videos, audio files and unstructured text. In fact, the technology can find uses almost anywhere in the enterprise.

Tesla, which embeds fairly advanced driver assistance technology in its cars and has other self-driving car initiatives in the works, makes heavy use of deep learning so their cars can accurately understand the world around them. In addition, hospitals use deep learning to process radiology images, e-commerce sites use the technology to locate similar products, customer service systems use it to analyze customer questions and complaints and digital assistants understand spoken text with the aid of deep learning.

At Intel, deep learning and natural language processing (NLP) are used by the company's sourcing operation to monitor world events that could affect its supply chain and develop recommendations. In the past, Intel employees manually looked through industry news and economic and market data reports, according to Gopalan Oppiliappan, Intel's group head of advanced insights and enterprise analytics. "It was a manual and time-consuming effort to process the data and respond to supply chain events," he said.

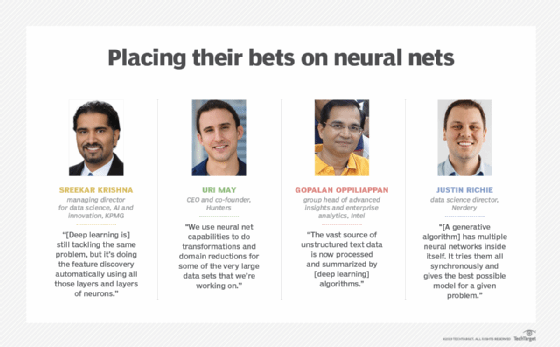

Deep learning allowed Intel to monitor supply chain events in real time. "The vast source of unstructured text data is now processed and summarized by these algorithms," Oppiliappan explained. "And the news related to these events is ranked based on risk." The algorithm also learns from user feedback to fine-tune the risk assessment, he added.

Intel and other manufacturing companies are also using AI-powered image processing to identify defects in the production process." And it's not just visual," said Sreekar Krishna, managing director for data science, AI and innovation at KPMG LLP, a professional services firm. "[Manufacturers are] using cameras of different wavelengths, such as infrared, and they use that for discovering anomalies in the wafer."

Intel, Tesla and other companies on the cutting edge are expected to use technologies like deep learning and neural networks, but some very traditional industries, like wineries, are embracing them as well. "Winemakers are using it to look for defects in their bottles," Krishna said. "Previously, the bottles had to be visually checked by people. Now, winemakers are using computer vision to automatically detect the defects."

Companies are just starting to scratch the surface in applying AI to image analysis. "The world around us is pretty visual," Krishna conjectured. "But the proliferation of cameras in our lives is currently very passive -- just recording the images. There's huge, tremendous opportunity to leverage deep learning to move from passive collection to making business sense of the images. It may not be the coolest thing that happens, but it will be a huge opportunity."

Beyond machine learning

Deep learning is also moving into the domain of traditional machine learning algorithms, where the data points are discrete and quantifiable -- and for good reason. It takes time for data scientists to analyze data sets, identify the important features and create the scoring mechanisms that are optimized for the best results. "Even in the best of companies," Krishna noted, "it would take two to three months of experimentation before you can push an algorithm to the production environment."

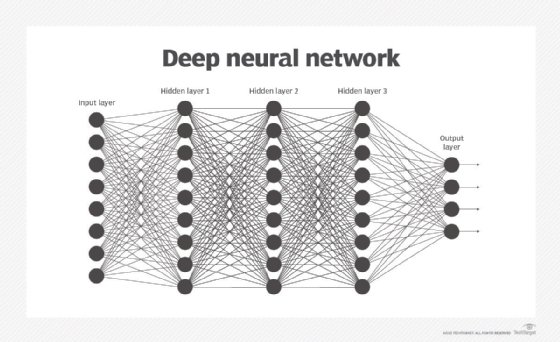

Deep learning replaces some of the work of data scientists spending time going through large numbers of algorithms to produce the best predictive models. "It's still tackling the same problem, but it's doing the feature discovery automatically using all those layers and layers of neurons," Krishna said. "It's replacing some of the older machine learning technologies."

Recommendation algorithms used by e-commerce sites that typically depended on traditional machine learning are now being upgraded with deep learning, resulting in faster deployments and better recommendations and rankings, according to Krishna.

Building a DIY neural network expert system

In cybersecurity, deep learning and neural networks are useful in analyzing large amounts of data at scale. Companies collect vast amounts of data from network sensors, endpoint devices, applications and firewalls. Traditional machine learning spots anomalies and identifies suspicious behavior patterns, which can be problematic since data is imbalanced, said Uri May, CEO and co-founder of Hunters, a cybersecurity firm based in Israel.

"When a breach happens, there are traces everywhere," he explained. "But the signal-to-noise ratio is very low." It takes human ingenuity and a great deal of experience to fully understand what happens in an attack and trace it back to the source -- a situation compounded by a huge shortage in cybersecurity talent.

Hunters, May said, is using the deep domain expertise of its cybersecurity employees to build neural network-powered expert systems. "We're trying to use AI to do more sophisticated and creativity-based tasks," he noted.

When using standard machine learning, data scientists figure out what factors are important and assign weights to them. With deep learning, AI determines the important factors but requires a huge set of correctly labeled training data. "We don't use deep learning," May said, "but we use neural networks. The main differentiator is that deep learning usually requires very little feature engineering" even though it's essential in cybersecurity.

Hunters has domain expertise, so they do the feature engineering to determine factors that would help identify an actual attack. Then, neural networks are used to expand on that foundation, adding a layer of analysis.

"When you look at cybersecurity, there isn't any training data," May explained. "But from our background and expertise, we have a pretty good sense of how attackers operate, what attackers do. Most of the people who work with us have the privilege of being in one of the main services that Israel has for intelligence. That gives us an understanding of how things are operating behind the scenes, and the technology helps us scale that domain expertise."

Even though the amount of labeled training data is low, the total volume of data coming from network logs and other sensors is enormous and can be too large for humans to analyze. "We use neural net capabilities to do transformations and domain reductions for some of the very large data sets that we're working on," May said. "You need the ability to take the data set and look at it in a very narrow and focused perspective so that you can now get threat signals out of it."

Tools of the trade

Deep learning requires huge amounts of data and massive storage and computing costs, said Ramesh Hariharan, co-founder and CTO at AI platform maker LatentView Analytics. "It's more expensive than traditional machine learning," he said. "So it's best to be clear about the use case and expected benefits before you embark on an initiative."

The shortage of experts in deep learning and neural networks can be partly eased by advances in AI tools, including pre-trained models published by Google, Facebook, OpenAI and many other companies, Hariharan said, adding that "[d]eep learning is also available as an API from cloud-based platforms" -- for example, Amazon, Google and Microsoft.

"For a faster approach, new tools such as Google's AutoML have emerged," he said. These tools provide recommendations for the right architecture to adopt for deep learning so that developers don't have to experiment with a lot of different approaches.

"Deep learning is a hard thing to conceptualize," said Justin Richie, data science director at digital services consultancy Nerdery. But a number of companies are working on the problem. IBM released an open source toolkit, AI Explainability 360, which includes algorithms to interpret and explain all major types of machine learning used today as well as some common deep learning systems, including image analysis and neural network classifications.

Beyond the beyond

The next step in the evolution of AI is generative algorithms, according to Richie. "It has multiple neural networks inside itself," he said. "It tries them all synchronously and gives the best possible model for a given problem."

One example of this approach is generative adversarial networks, which can be used to create deepfake videos. They can also learn to play games like Go, previously thought to be too difficult for computers to handle.

Generative algorithms could be used to automatically learn how to translate languages even without the benefit of the known translations of words or entire texts. "We're still at the early stages of this learning," Richie said. "Businesses are just starting to get their heads around it."