Choosing the right chip foundation for AI-optimized hardware

Every enterprise is trying to implement AI and machine learning. But, before AI, before clean data and before platform comparison, enterprises need to find the best hardware to support AI.

Companies need to evaluate hardware before considering how to utilize AI software and products. This hardware evaluation needs to include memory and processing requirements and whether conventional CPUs or more specialized GPUs and AI chips are necessary. Your initial choice in hardware -- and, most importantly, your chip selection -- will branch out and affect your long-term AI strategy.

CPUs

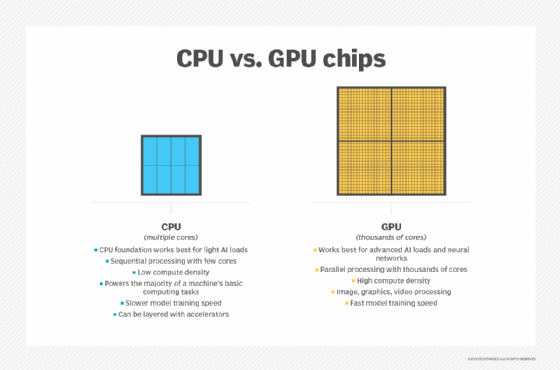

CPUs power the majority of a machine's basic computing tasks. Before specialized processing units, like GPUs and tensor processing units, gained traction in the machine learning field, CPUs did most of the heavy lifting. However, one of the most important factors in choosing AI-optimized hardware is processing speed. A CPU-based machine can take longer than a GPU-based one to train AI models because it has fewer cores and doesn't take advantage of parallel processing the way GPUs do.

Now, developers and vendors are creating multicore CPUs and layering accelerators -- pieces of software that prioritize jobs from specific applications and allocate compute resources accordingly -- atop existing CPUs in order to boost performance, processing speed and memory. But they still generally can't match the processing power of GPUs.

Though the power of one CPU chip can't support advanced AI workloads, Gadi Singer, VP of AI Products Group and general manager of architecture at Intel, said that starting with a CPU chip foundation can be an excellent strategy.

"If you want to do light deep learning or you want to do a mix of deep learning and general purpose, CPU is the best machine to do that," Singer said.

If your machines need to function for a variety of purposes, a CPU foundation that you can accelerate with software can be a flexible tool.

"CPUs are for people who don't have a large IT department. They want to focus on their differentiation and want the software underneath them [to] do the work," Singer said.

However, adding accelerators to your multicore CPUs isn't always optimal for AI. If a company wants to run advanced level deep learning and neural networks or simply has dedicated machines to run AI without the need for general-purpose processing, then a system of GPUs may work in its favor.

GPUs

If a company knows its AI strategy includes advanced neural networks and AI algorithms, choosing a CPU chip processor would require numerous accelerators to match the multicore, fast processing speed of a GPU.

The current industry standard is to build your AI system using GPUs. GPUs are optimized to render graphics and images but have the speed and computational power to support AI, machine learning and neural network development. Nvidia, Intel and Arm are some of the primary GPU vendors.

"Although for classic machine learning workloads CPUs are usually enough in order to run compute-intensive deep learning tasks, companies need to factor in GPUs and advanced AI-specific hardware," said Omri Geller, CEO of acceleration platform Run:AI, based in Tel Aviv, Israel.

The parameter tuning and constant iterations that deep learning requires mean that high processing power and faster processing rate are necessary for the large data sample sizes and the size of the deep learning model itself.

"GPUs are very effective for training. If you have enough deep learning [models that you're training], use an architecture that [is] designed for that: GPUs," Singer said.

How to choose

If there are roles for both CPUs and GPUs, developers may wonder where each fits as they build their AI-optimized hardware infrastructure.

Both Singer and Geller encourage evaluating your machine learning and AI goals and building your hardware infrastructure to match. If you want a basic AI strategy, choosing CPUs with accelerators and software as needed could be enough to power your machine's general-purpose computing and a light AI workload.

If you want to develop deep learning AI, choosing GPUs is likely going to deliver the best results. GPUs, as they are fairly new to enterprise AI use cases, can get pricey. But, if you want to train your own deep learning and AI models, the processing efficiency and speed of GPUs might be worth it.

Despite the variety of choice in hardware, choosing the best hardware for your company is about optimizing computational resources, creating realistic goals and recognizing what software you need to support.

"Infrastructure usually isn't optimized to share resources efficiently between all the different AI teams, data scientists and engineers working on different deep learning tasks," Geller said. "This means companies often end up spending a lot more money on compute, while training takes even longer because of suboptimal GPU allocation and utilization."