ChatGPT vs. GPT: How are they different?

Although the terms ChatGPT and GPT are both used to talk about generative pre-trained transformers, there are significant technical differences to consider.

ChatGPT and GPT are natural language processing tools introduced by OpenAI, but their technological capabilities and pricing differ. To complicate matters, the term GPT also refers to any product that uses any generative pre-trained transformer -- not just the versions that come from OpenAI.

Here are their chief differences:

- GPT is the underlying technology -- called a language model -- designed to process and generate language. ChatGPT is the web interface -- a chatbot application -- that enables users to interact with a GPT model.

- The U.S. copyright office has decided that GPT is a generic term that anyone can use for chat, integration into coding tools and other use cases. In contrast, ChatGPT is a copyrighted brand OpenAI uses to access its line of large language models using a chat interface.

- One of the cliches used by definitions is that GPT is the engine inside a car and ChatGPT is the car.

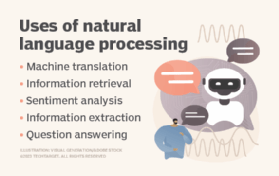

Before discussing the differences between GPT and ChatGPT, it's useful to understand how these natural language processing tools were developed.

History of GPT and ChatGPT

OpenAI revealed the first GPT in 2018. More capable versions followed quickly, including GPT-2 in 2019, GPT-3 in 2020, GPT-4 in 2023 and GPT-4o in 2024. Newer versions are only accessible using an API, while GPT-2 is still open source software.

OpenAI introduced ChatGPT as a consumer-facing service in late 2022. In a month, it attracted more than 50 million users, making it one of the fastest-growing consumer services in history.

"While technological improvements have been advancing steadily for a few years now, OpenAI and the release of ChatGPT specifically made large language models widely available," said Donncha Carroll, a partner at Lotis Blue Consulting who leads the firm's data science center of excellence. "The real genius was providing a simple-to-use interface making the technology accessible to the average person."

This article is part of

What is GenAI? Generative AI explained

The first version of ChatGPT was built on GPT-3.5, although paying subscribers can also gain access to GPT-4 through the same ChatGPT interface. OpenAI said its Chat API can write emails and code, answer questions about documents, create conversational agents, tutor and translate. GPT can do many of these same things, including completing text, writing code, summarizing text and generating new content.

OpenAI claims GPT-4 can answer questions more accurately than prior versions, as measured by scores on tests such as the SAT, LSAT and Uniform Bar Exam. It's also more expensive to use than prior versions.

In the early days, OpenAI reported on the number of features in its GPT models as a proxy metric for capabilities. For example, GPT had 117 million parameters, GPT-2 had up to 1.5 billion parameters and GPT-3 had up to 175 billion parameters. However, bigger isn't always better. In fact, bigger might be slower and more expensive to run. OpenAI decided not to publicly report model feature sizes for GPT-3.5 or GPT-4 models. Also, OpenAI charges more for larger models.

There is a wide range of GPT-based models and services for processing text, transcribing audio (Whisper) and generating images (Dall-E). Initially, ChatGPT only answered typed questions entered into a prompt. However, OpenAI has added ChatGPT support for pictures and video. The API access to both tools opens new opportunities for enterprises to customize and enhance their offerings.

"Enabling API access will enable enterprises to more easily use ChatGPT in their own products and environments," said Lori Witzel, senior product marketing manager at Flexera. In addition, fine-tuning can let models learn more about business-specific domains.

What is the difference between ChatGPT and GPT?

Let's explore how the two terms and their salient differences are sometimes confused.

Terminology differences

The term ChatGPT is often used to describe the process of adding chat capabilities to a product. In its public-facing communications, for example, OpenAI describes a ChatGPT API that powers new services from Instagram, Snap, Quizlet and Instacart.

"The ChatGPT app for Slack combines the knowledge found in Slack with the intelligence of ChatGPT, making AI more accessible in a place we're already working," said Jackie Rocca, product lead at Notion. The app is integrated into the natural workflow and provides instant conversation summaries, research tools and writing assistance directly into Slack.

The generic term GPT is also used at the end of product names to advertise the introduction of new AI capabilities. These might refer to generative pre-trained transformers in a generic sense rather than a specific OpenAI model. Google first developed the transformer technology that underlies GPT but has been less consistent in branding its various implementations, including BERT, Lamda and Palm. Google's first ChatGPT-like implementation, Bard, has been rebranded as Google Gemini and was only pushed to market after the success of ChatGPT.

Likewise, Microsoft has adopted the term copilot to signify the embedding of GPT-powered code completion capabilities into GitHub and task completion into its productivity tools.

Technical differences

OpenAI's characterization of the technical differences between ChatGPT and GPT is also messy. There is no specific ChatGPT model, although OpenAI notes that o3-mini, o1, GPT-4o and GPT-4.5 models power ChatGPT. Its pricing page includes different service categories for GPT-4; Chat, which lists only GPT-3.5 Turbo; InstructGPT; and embedding, image and audio models.

There are technical differences between OpenAI's many models, some of which apply to ChatGPT and some that don't. First, companies can only fine-tune GPT-4, GPT-4o, GPT-4o mini and GPT-3.5 Turbo models. This means developers can't customize the GPT-4.5 models to work more efficiently with their own data. Fine-tuning is the process of submitting queries and responses to a pre-trained model to improve the accuracy of questions likely to be encountered in a particular use case, such as a call center.

Another big difference is the age of the training data. GPT-4.5, GPT-4o, o3-mini and o1 were all trained on data up to September 2023, while GPT-4 was trained on data up to November 2023. Davinci has a knowledge cutoff of September 2021, while GPT-3.5 Turbo has a knowledge cutoff of August 2021. This is important because the newer models might be more capable of understanding and responding to queries about current events.

The last big difference is that OpenAI introduced ChatML, a new API query method for its ChatGPT-capable models. In the older API, an app sends a text string to the API; however, this also allowed hackers to send malformed queries for various kinds of attacks. In the new ChatML, each message contains a header identifying the source of each string and its contents. The contents are only text but could include data such as images, 3D objects or audio in the future.

Pricing differences

There are also some noteworthy pricing differences between the services behind ChatGPT and GPT models. The ChatGPT service has three tiers:

- A free version.

- A ChatGPT Plus subscription plan at $20 per month that improves performance and adds access to more recent models.

- A ChatGPT Pro plan at $200 per month that increases access limits for the latest models and new deep research features.

Currently, the paid services are trained on data that is as old as the free version.

GPT-4o mini costs $0.15 per million tokens input and $0.60 per million tokens output. The o3-mini is $1.10 per million tokens input and $4.40 per million tokens output. In contrast, o1-pro costs $150 per million tokens input and $600 per million tokens output.

Other GPT services include embedding for classifying words for as little as $0.02 per million input and output tokens. Whisper transcription is $0.006 per minute, while pricing for image generation for larger, high-quality images is $0.08 per image for Dall-E 3.

What's the future of GPT?

The ChatGPT and GPT ecosystem of development tools and services continues to evolve, and software vendors are excited about the road ahead.

"We expect GPT and ChatGPT to keep getting smarter, more efficient and have fewer issues as more people embrace AI and the technology learns more," Rocca said.

Recent multimodal innovations to support voice within ChatGPT will make it significantly more accessible, said Tony Jiang, founding principal engineer at Brevian. "This should also reduce user friction and speed up the AI-human interaction. This has the potential to greatly improve the learning experience for everybody, from kindergarteners to experienced professionals," he said. "But other important uses are likely, too, as ways to provide entertainment or even for psychological well-being."

But it's also essential to keep the evolution of these offerings in context.

"It will become ever more seemingly intelligent but still will not actually understand the prompt nor what it's saying," said Aaron Kalb, entrepreneur in residence at Accel.

These models are a bit like a person responding to questions in a social setting without thinking. More competent services might require building more sophisticated models of meaning informed by business-specific data to eliminate inaccuracies.

Editor's note: This article was updated in March 2025 to update model and pricing information and to improve the reader experience.

George Lawton is a journalist based in London. Over the last 30 years, he has written more than 3,000 stories about computers, communications, knowledge management, business, health and other areas that interest him.