Getty Images/iStockphoto

Compare GPUs vs. CPUs for AI and machine learning use cases

Choosing which AI hardware option makes more sense for an AI or machine learning project depends on multiple factors, including speed, memory and cost.

One of the most critical decisions when deploying AI workloads is whether to power them with a graphics processing unit or a central processing unit. The choice has implications not just for workload performance, but also for cost-effectiveness.

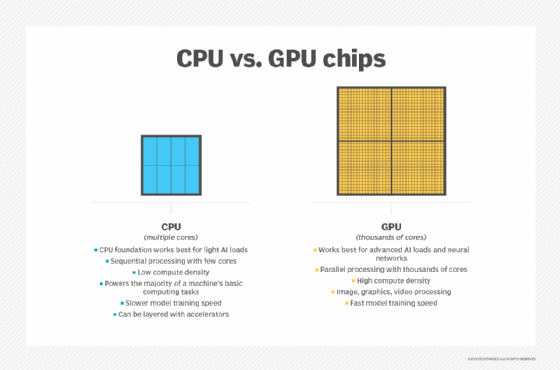

GPUs and CPUs are computer processors -- the hardware that processes and executes the instructions that drive a computer. CPUs are general-purpose processors that mainly use sequential processing, while GPUs are designed for the parallel processing of graphics and other complex tasks. Both GPUs and CPUs can power AI and machine learning (ML) systems.

Understanding the differences between GPUs and CPUs helps organizations decide which processing unit best supports different AI workloads. In addition to considering the core distinctions between these options, organizations must also consider the availability of GPUs vs. CPUs in the cloud, as well as the implications of custom silicon and AI accelerators for deploying AI and ML workloads.

Parallelization in GPUs vs. CPUs

Although GPUs and CPUs are both computer processors, they are different mainly because they are optimized for different types of processing.

A GPU is designed to perform a large number of computations in parallel. This means that rather than executing instructions one by one, a GPU can complete many computations -- typically, thousands -- simultaneously. A GPU can do this because it contains thousands of cores, which are the components within a processor that execute instructions.

In contrast, CPUs are designed mainly for sequential processing. This means that they complete one instruction before moving on to the next one.

However, modern CPUs are typically capable of some level of parallel processing. This is because most of today's CPUs include multiple cores, and each core can process instructions at the same time as other cores. However, a CPU usually contains no more than a few dozen cores, so its ability to support parallelization is much less than that of a GPU with thousands of cores.

Memory in GPUs vs. CPUs

Both GPUs and CPUs have memory that is built into the processor. This differs from system memory, otherwise known as RAM. While both types of processors have built-in memory that enables them to store frequently accessed data, CPUs usually have less of this type of memory.

As a result, it might take a CPU longer to complete tasks that require repeated reference to the same data. This is because the CPU might have to read/write the data from system memory, which takes longer than accessing data stored in the CPU's built-in cache memory.

GPUs vs. CPUs for AI and machine learning

A GPU or a CPU can handle most AI and ML workloads. However, GPUs tend to be faster because AI and ML workloads often require the execution of many instructions in parallel.

Consider model training and inference, which are key to most AI workloads that use a machine learning approach:

Model training

To train a machine learning model, developers typically expose it to a large volume of data and have it parse the data to identify relevant relationships or patterns. If the model can process many different parts of the data set in parallel, rather than having to parse item by item, it will complete the training process much faster.

Inference

Inference, the process of having a trained model make decisions based on new input, is usually faster if the model can interpret and respond to different parts of the prompt simultaneously. This is possible when the model can access hardware that supports parallel processing.

When to choose a CPU instead of a GPU

Although GPUs are generally faster and more efficient than CPUs for AI and ML workloads, there are four instances where a CPU might be preferable:

1. Small data sets

The smaller the amount of data a model needs to process during training and inference, the less benefit a GPU offers. Thus, a GPU might be unnecessary for a scaled-down model that only needs to handle a small range of parameters.

2. Rule-based AI

Not all AI workloads use machine learning. Some instead use a rule-based approach, which means that they process information based on predefined, sequential instructions. Rule-based AI often doesn't benefit from GPUs because it doesn't need to perform large-scale parallel processing.

3. Edge workloads

When deploying AI workloads on edge infrastructure instead of a central data center, it might be cost-prohibitive or impossible to use GPUs because the edge hardware devices might not support them. In contrast, a CPU can run in virtually any type of device.

4. Cost-optimization goals

On average, CPUs cost less than GPUs. Using CPUs for AI might help save money when a project's budget is tight. However, GPUs might be more cost-effective overall if parallel processing enables the project to proceed faster. This is especially true in cases where an organization can repurpose GPUs for other tasks after one project is complete, or where it rents GPUs -- such as by using GPU-enabled cloud server instances -- rather than investing in expensive GPU hardware outright.

3 recent updates in AI hardware options

The guidance above is generally accurate when selecting between GPUs and CPUs for AI and ML workloads. However, additional options to consider include cloud-based hardware, custom silicon and AI accelerators:

1. Cloud-based vs. on-premises GPUs

Traditionally, AI practitioners had to purchase and install GPUs on their own servers if they wanted to use them for AI and ML workloads. Today, cloud service providers offer an increasingly wide selection of cloud server instances that include GPUs.

These options make GPUs even more compelling as a hardware option for AI and machine learning because they provide access to GPU-enabled servers on a pay-as-you-go basis. Thus, even if standard CPUs could support a workload, it might be more straightforward, faster and -- in some cases -- more cost-effective to use cloud-based GPU servers.

2. Custom silicon

Some major cloud service providers have developed custom silicon hardware, such as Graviton processors from Amazon or tensor processing units (TPUs) from Google. These are specialized types of processors -- some of which are CPUs, and others are application-specific integrated circuits, or ASICs -- that, in general, are available only through each cloud provider's platform.

Not all custom silicon is optimized for AI -- although some, like Google TPUs, is. However, cloud servers equipped with these processors typically are less expensive than traditional cloud servers, which use x86-based CPUs from vendors like Intel and AMD. Plus, some custom silicon CPUs have more cores than standard CPUs. For instance, some of Amazon's Graviton processors feature up to 96 cores. Therefore, they can achieve higher levels of parallelization.

Given these factors, AI and ML workloads might run faster and prove more cost-effective when using custom silicon hardware instead of conventional CPUs. In some cases, this factor might tip the scales in favor of using a CPU instead of a GPU.

3. AI accelerators

A growing variety of AI accelerators is becoming available. Unlike generic GPUs -- which were designed originally for rendering video, and often still target video rendering as a top use case -- AI accelerators are optimized for AI and machine learning alone. Moreover, many focus on specific types of AI and ML use cases or priorities, like minimizing latency or supporting operations on edge infrastructure.

AI accelerators include, but are not limited to, AI-centric custom silicon hardware from cloud service providers, like TPUs. GPUs might also be considered a type of AI accelerator. However, most other AI accelerators are categorized as either ASICs or field-programmable gate arrays, both of which are specialized processors.

To support a specialized AI use case beyond generic model training or inference, an AI accelerator tailored for that use case might offer a faster, more cost-effective option than either a CPU or a generic GPU.

Chris Tozzi is a freelance writer, research adviser, and professor of IT and society. He has previously worked as a journalist and Linux systems administrator.