ÐндÑей ЯланÑкий -

Bias in machine learning examples: Policing, banking, COVID-19

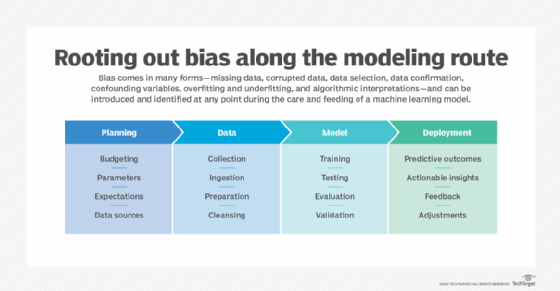

Human bias, missing data, data selection, data confirmation, hidden variables and unexpected crises can contribute to distorted machine learning models, outcomes and insights.

Relying on tainted, inherently biased data to make critical business decisions and formulate strategies is tantamount to building a house of cards. Yet, recognizing and neutralizing bias in machine learning data sets is easier said than done because bias can come in many forms and in various degrees.

Among the more common bias in machine learning examples, human bias can be introduced during the data collection, prepping and cleansing phases, as well as the model building, testing and deployment phases. And there's no shortage of examples.

Some U.S. cities have adopted predictive policing systems to optimize their use of resources. A recent study by New York University's AI Now Institute focused on the use of such systems in Chicago, New Orleans and Maricopa County, Ariz. The report found that biased police practices are reflected in biased training data.

"[N]umerous jurisdictions suffer under ongoing and pervasive police practices replete with unlawful, unethical and biased conduct," the report observed. "This conduct does not just influence the data used to build and maintain predictive systems; it supports a wider culture of suspect police practices and ongoing data manipulation."

A troubling aspect is the feedback loop that has been created. Since police behavior is mirrored in the training data, the predictive systems anticipate that more crime will occur in the very neighborhoods that have been disproportionally targeted in the first place, regardless of the crime rate.

Data bias often results in discrimination -- a huge ethical issue. At the same time, organizations of all types across various industries need to make distinctions between groups of people -- for example, who are the best and worst customers, who is likely or unlikely to pay bills on time, or who is likely or unlikely to commit a crime.

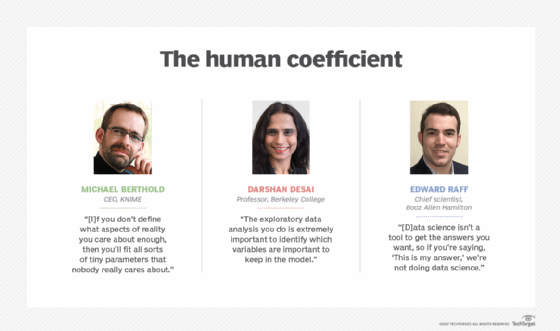

"It's extremely hard to make sure that you have nothing discriminatory in there anymore," said Michael Berthold, CEO of data science platform provider KNIME. "If I take out where you come from, how much you earn, where you live, your education [level] and I don't know what else about you, there's nothing left that allows me to discriminate between you and someone else."

Missing critical data

Machine learning models are commonly used in cybersecurity systems to identify anomalous behavior, mislead crooks, do threat modeling and more. Since bad actors must continually innovate to avoid detection, they're constantly changing their tactics.

Banks set transaction thresholds for account activity so account holders can be notified of a sizable fund transfer in case the transaction is fraudulent. Knowing this, a group of hackers successfully stole a total of $1 billion by taking subdollar amounts from millions of account holders, according to Ronald Coifman, Phillips professor of mathematics and computer science at Yale University. The transactions went unnoticed because they were too subtle for the existing cybersecurity systems to detect.

The elephant in the room is false positives. Bank customers don't mind receiving alerts about sizable transactions, even if they initiated the transactions themselves. But, if every transaction resulted in an automatic alert, no matter how small, then customers might develop alert fatigue, and a bank's cybersecurity team may drown in excess noise. Taking all that into account, the bank implemented a new system that uses different algorithms -- at least one of which combines linear algebra with inferential geometry -- to better detect and respond to smaller types of transactions.

"Data might be missing due to methodological errors," said Edward Raff, chief scientist at Booz Allen Hamilton. "You're trying to build a representative model, but there's something you forgot to take into account that you weren't aware of. So, when you collect the data, you didn't have any procedure or plan to collect from some subpopulation."

One way to avoid the problem of missing data is to problem-solve collaboratively with people who have different backgrounds. As a result, each expert may tend to have a different perspective on the problem and suggest variables that the others might not consider.

Bias in selecting data

Medical and pharmaceutical researchers are desperately trying to identify approved drugs that can be used to combat COVID-19 symptoms by searching the growing body of research papers, according to KNIME's Berthold. "The problem that you have … the publications you have are mostly positive. Nobody publishes terrible results," he explained. Researchers, therefore, can't factor in the results of many drug testing failures.

Data selection figures prominently among bias in machine learning examples. It occurs when certain individuals, groups or data are selected in a way that fails to achieve proper randomization. "It's easy to fall into traps in going for what's easy or extreme," Raff said. "So, you're selecting on availability, which potentially leaves out a lot of things that you really care about."

Confirmation bias

In today's digital world, more business professionals are using data to prove or disprove something. But, instead of forming a hypothesis and testing it like data scientists are trained to do, it's human nature to cherry-pick data that aligns with the individual's point of view. Marketing and political research are obvious examples.

Confirmation bias also seeps into data sets in the form of human behavior. Individual U.S. citizens, for instance, are aligning with one of two COVID-19 tribal behaviors. One group willingly complies with the face mask mandate, while the other rebels against it. Individuals in either group can "prove" the correctness of their position using news stories and research that advances the individual's point of view. Since the face mask issue has been politicized, that issue is also making its way into data sets.

Data scientists can minimize the likelihood of confirmation bias in machine learning examples by being aware of its possibility and working with others to solve it. Some business leaders, however, sometimes reject what the data shows because they want the data to support whatever point they're trying to make. In some cases, data scientists have to choose between losing their jobs or torturing the data into saying whatever an executive wants it to say.

"That can happen unfortunately," Raff noted. "[D]ata science isn't a tool to get the answers you want, so if you're saying, 'This is my answer,' we're not doing data science."

Confounding variables

Researchers Alessandro Sette and Shane Crotty wrote in Nature Reviews that "[p]reexisting CD4 T cell memory could … influence [COVID-19] vaccination outcomes." And they suggested that "preexisting T cell memory could also act as a confounding factor. … For example, if subjects with preexisting reactivity were assorted unevenly in different vaccine dose groups, this might lead to erroneous conclusions."

A confounding or hidden variable in machine learning algorithms can negatively impact the accuracy of predictive analytics because it influences the dependent variable.

"Having [a] whole correlation matrix before the predictive modeling is very important," Berkeley College professor Darshan Desai explained. "The exploratory data analysis you do is extremely important to identify which variables are important to keep in the model, which are the ones that are highly correlated with one another and causing more issues in the model than adding additional insight."

A confounding variable, Raff added, can be one of the more difficult bias in machine learning examples to fully resolve because data scientists and others don't necessarily know what the external factor is. "That's why it's important to have not just data scientists, but domain experts on the problem as well," he reasoned.

Overfitting models

The past is not necessarily indicative of the future, yet predictive models use historical data to predict future events. For the last few months, some researchers have been trying to predict COVID-19 impacts in one location based on research conducted elsewhere in the world.

"Generalization," KNIME's Berthold explained, "means I'm interested in modeling a certain aspect of reality, and I want to use that model to make predictions about new data points. And, if you don't define what aspects of reality you care about enough, then you'll fit all sorts of tiny parameters that nobody really cares about. If you have all the other problems under control, [overfitting is] fairly easy to control because, when you train models, you have a totally independent sample you use for testing."

The independence of validation test data can sometimes be questionable. A recent study, for example, developed a risk score for critical COVID-19 illness based on a total population of 2,300 individuals. The training data represented 1,590 patients with lab-confirmed COVID-19 diagnoses who were hospitalized in one of 575 hospitals between Nov. 21, 2019, and Jan. 31, 2020. The test data represented 710 individuals from four sources, three of which had follow-up through Feb. 28, 2020.

A summary of the report, published by Johns Hopkins Bloomberg School of Public Health, noted: "The data for development and validation cohorts were from China, so the applicability of the model to populations outside of China is unknown. It is unclear whether the authors corrected for overfitting."

One way to recognize overfitting is when a model is demonstrating a high level of accuracy -- 90%, for example -- based on the training data, but its accuracy drops significantly -- say, to 55% or 60% -- when tested with the validation data.