AI regulation: What businesses need to know in 2026

Numerous local, regional and national AI regulations can help businesses govern AI, foster innovation and mitigate potential risks -- as long as their differences are understood.

AI is quickly proving to be one of the most disruptive and powerful technologies of the 21st century. AI agents, systems and platforms now enable businesses to apply vast amounts of historical and real-time data to make precise decisions, find relationships and opportunities, spot anomalies and create dynamic content on demand. AI can bolster enterprise security, improve business efficiency, drive revenue and vastly enhance customer experiences.

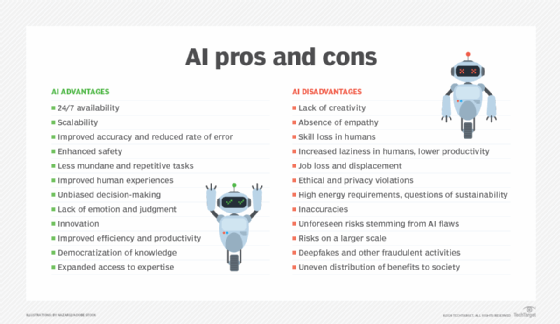

But there are downsides to these exciting capabilities. AI can hallucinate and exhibit bias. It can perform unexpected actions and be easily used for a wide range of malicious purposes. AI's faults can expose a business and its brand to compliance and legislative violations. Poor AI behaviors are often costly to executives, the organization and ultimately the customers or users. Just consider the life-threatening implications of a medical AI tool making improper diagnoses, recommending incorrect procedures or failing to note critical drug interactions.

With the rapid evolution in AI capabilities, there's a growing need for regulations that govern AI. Regulations can foster ongoing AI innovation while managing and mitigating potential risks.

Why AI regulation is necessary

The regulation of artificial intelligence establishes policies and laws intended to govern the creation and use of AI systems. While many industry verticals, such as the healthcare or financial industries, sponsor and support the creation of standards or governance principles, the broad adoption and powerful capabilities of AI demand regulation by the public sector, namely government bodies.

This article is part of

What is enterprise AI? A complete guide for businesses

AI regulation can involve numerous areas of business operations, including the following:

- AI's technical implications in supporting IT infrastructure, such as data centers.

- Economic aspects of AI, like energy use and costs for regions where AI data centers are located.

- Legal aspects of AI as it pertains to business risks, data security and privacy, as well as preventing the creation and use of AI for illegal purposes.

- Behavior and performance of AI systems, such as their accuracy and explainability.

- Ethical use of AI, such as mitigating bias in machine learning algorithms.

- Human oversight and control of AI.

- Limits on AI, such as restricting AI superintelligence (an AI that greatly exceeds human capabilities).

AI regulations can be created, implemented and enforced at most public sector levels, including state/province, federal/national or regional levels such as the EU, Organization for Economic Co-operation and Development (OECD) or African Union. Governing bodies are racing to understand and stay ahead of the ever-accelerating development of AI. In the U.S., federal agencies introduced 59 AI-related regulations in 2024 -- more than twice the number introduced in 2023, according to Stanford University's 2025 AI Index Report. Further, the Business Software Alliance (BSA) reported that U.S. states considered almost 700 legislative proposals covering a range of issues related to AI in 2024, compared to just 191 bills in 2023.

AI regulation advantages and challenges

Ideally, the purpose of AI regulation is to foster the development of AI and its supporting technologies while establishing legal frameworks and safeguarding the rights, freedoms and safety of users. Common benefits of AI regulation can promote the following:

- Ethical AI. Users can weaponize AI by using it to create deepfakes or launch cunning cyberattacks. Regulation can create a framework for ethical AI development and use, preventing potentially dangerous or illegal practices.

- Data privacy and security. AI can access enormous amounts of data. This data often contains sensitive and personally identifiable information, such as patient health records or background information about loan applicants. Regulation can ensure data is secure, used in acceptable ways and safeguarded from inappropriate disclosure.

- Responsibility and its limitations. Regulations can clarify who is responsible or liable when AI systems make mistakes or cause undesirable outcomes, such as improper medical diagnoses leading to patient harm. Regulation can also establish responsibility limitations based on an organization's documented adherence to best practices and prevailing regulations.

- Fairness and transparency. AI is only as good as the algorithms and data it uses. Regulation can ensure AI developers take steps to detect and mitigate bias to create fair and non-discriminatory outcomes. Regulation can also help promote transparency and explainability in machine learning models and AI systems, thereby garnering trust in AI decision-making that can lead to broader adoption, user satisfaction and investor confidence.

- Human in the loop. As trust in AI grows and human intervention in AI decisions wanes, users might have no recourse if, for instance, an AI outcome results in an unfairly biased decision. Human-in-the-loop requirements can ensure human oversight and support is always available as part of AI system operation.

Despite the potential benefits of AI regulation, business leaders and government policymakers must also carefully consider the challenges facing AI regulation, including the following:

- Costs of regulation. Regulations can impose costs on businesses, such as record-keeping, monitoring and auditing. The more complex and comprehensive the regulations, the more costly it is to ensure compliance. Larger enterprises can potentially absorb these costs more easily than smaller businesses and startups, putting new businesses at a financial disadvantage in AI development.

- Limited innovation. Regulation rarely strikes an ideal balance and can often be too loose or restrictive. Onerously restrictive AI regulations can stifle AI system innovation due to restrictions, excessive liabilities or exceedingly costly monitoring and documentation demands.

- Inapplicable or ineffective regulation. Lawmakers aren't AI developers or business leaders. Consequently, lawmakers typically have a limited understanding of AI technologies and supporting infrastructure. Dependence on input from special interest groups, like industry lobbyists, can result in inadequate regulation.

- Regulatory obsolescence. It can take years for a government to debate, create, refine and ratify regulations, and years more to implement those regulations and impose compliance deadlines for businesses. Given the incredible pace of AI development and adoption, the capabilities of tomorrow's AI systems might far outpace the intended purpose of AI regulations by the time they come into force.

- Inflexible rules. Regulations are typically rigid, inflexible and often a single government solution to a set of complex and diverse problems. Consequently, regulations might not apply uniformly to different AI designs, industry verticals or AI use cases. This can create burdens for some organizations while possibly allowing regulatory loopholes for others.

U.S. AI regulations

AI regulation in the U.S. is currently fragmented, with no overarching or comprehensive federal legislation to guide or limit AI development. U.S. AI regulation in 2026 encompasses a mix of executive orders (EO), existing laws, policies from individual federal agencies and state-level legislation.

President Biden, for example, signed EO 14110 for "Safe, Secure and Trustworthy Development and Use of Artificial Intelligence" in October 2023. The order was intended to address various AI issues, such as standards for critical infrastructure, AI-enhanced cybersecurity and federally funded biological synthesis projects. In effect, it seemed that federal regulation was coming. President Trump, however, repealed the Biden executive order in January 2025, suggesting the current administration's choice to deregulate AI in support of innovation over guardrails.

At the federal agency level, agency leaderships have issued guidance and position statements on AI. For example, the U.S. Department of Justice, Federal Trade Commission and other agencies issued a joint statement in 2023 asserting that current legal frameworks, such as those for consumer protection and civil rights, apply to AI systems and will be vigorously enforced.

State legislatures are taking varied measures to meet the regulatory challenges of AI, but the resulting patchwork of state-by-state laws can be difficult to navigate. Some states adopt broad and comprehensive laws, while others attempt to target specific AI issues. Examples of state-level AI regulation include the following:

- The New York City Bias Audit Law (Local Law 144), which was enacted in November 2021 and finally went into effect in July 2023, prohibits companies hiring in NYC from using automated tools to hire or promote employees unless the tools have been audited for bias.

- Utah's S.B. 149 Artificial Intelligence Policy Act, which was signed into law in March 2024 and went into effect two months later, establishes liability for companies that fail to disclose their use of generative AI as part of consumer protection or in the commission of a criminal offense.

- Tennessee's Ensuring Likeness Voice and Image Security (ELVIS) Act, which was signed in March 2024, regulates audio and video deepfakes and voice cloning to safeguard artists' rights.

- California's Senate Bill 53, the Transparency in Frontier Artificial Intelligence Act (TFAIA), which was signed in September 2025, enhances online safety by requiring guardrails on the development of AI models.

Global AI regulations

From an international perspective, many countries are considering, enacting and implementing AI regulations primarily pertaining to AI safety, responsible AI and legal liabilities. Several examples of AI regulations outside the U.S. include the following:

- The EU's AI Act of March 2024 provides sweeping, though complex, legislation to ensure AI technologies are used in a responsible and fair manner across the EU. The AI Act is expected to be fully implemented and enforced by June 2026.

- The U.K., Switzerland and Australia, much like the U.S., don't possess comprehensive AI legislation. Instead, they rely on their established assortment of legal requirements, standards and guidelines applied to AI. The U.K.'s relevant guidance includes the Data Protection Act 2018. Switzerland's goal is to add AI transparency rules into existing data protection laws and update local laws. Australia's existing laws, such as the Privacy Act 1988, Corporations Act 2001 and Online Safety Act 2021, apply to AI use.

- China has been extremely active in AI regulation and is creating sweeping AI regulatory frameworks that include Provisions on the Management of Algorithmic Recommendations in Internet Information Services, a position paper "Ethical Norms for New Generation AI" and a governance legislation called Measures for the Management of Generative AI Services.

Other nations are developing policies and considering the creation of AI legislation, including Finland, Germany, Brazil, Columbia, Israel and New Zealand.

Trends in AI regulation for 2026 and beyond

AI regulation will pose challenges for global organizations in 2026 and beyond. Regulation is expected to drive responsible AI initiatives, platforms and processes, but the national demands and conflicting interests of AI will create enormous friction.

Rising AI regulation and enforcement

By 2026, half of national governments will enact and enforce the use of responsible AI through new regulations, updated policies, and data security and privacy measures. Emerging regulations will require AI developers to prioritize AI ethics, transparency, explainability and data privacy in their AI systems. AI governance will become a mandated element of all worldwide sovereign AI laws and regulations by 2027, Gartner reported.

Regulatory fragmentation at every level

Gartner predicted 35% of countries will be locked into region-specific AI platforms using proprietary contextual data by 2027, resulting in a serious fragmentation of the AI landscape due to political and technical issues. Organizations will need to localize their AI systems and adapt to the pressures of strong regional regulations, prevailing languages and local culture, Gartner reported.

Enormous compliance challenges

Although AI regulation is still in its infancy, it's clear that many states, provinces, regions, nations and nation-state collectives will ultimately develop and implement AI legislation -- even those that refrain from AI-specific legislation will update current laws to include AI. This fierce fragmentation might force multinational companies to meet dozens of specific compliance and auditing requirements or risk hefty fines and legal sanctions.

Balancing regulatory benefits and risks

As local, national and regional governments scramble to enact AI regulations into 2026 and beyond, the biggest problem for businesses will be weighing the benefits and potential opportunities of regulation against the possible costs and challenges that regulation imposes. But failure to comply is not an option. Violating AI regulations will expose businesses to scrutiny by regulators, legislators, customers and the broader public, leading to serious financial, legal and brand reputation risks.

Regulatory adherence and the competitive advantage

Organizations that can demonstrate and document compliance with emerging AI regulations can use adherence as a competitive differentiator in the market. According to Gartner, 75% of AI platforms will incorporate strong AI governance and TRiSM (trust, risk and security management) capabilities by 2027.

Best practices for meeting AI regulations

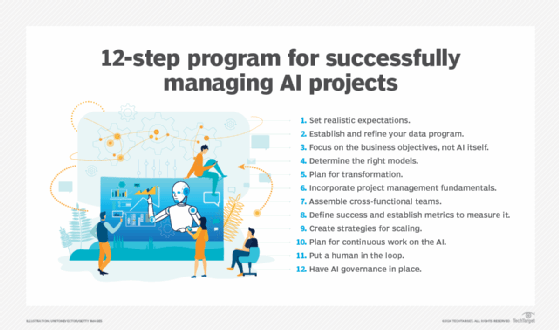

AI regulation is here, and more regulation is forthcoming as AI continues to expand its capabilities, enter new industries and affect everyday life. Businesses can ease the challenges of tomorrow's AI regulations by embracing the following best practices today:

1. Take the lead in AI governance

Business leaders can ease the integration of new AI regulations by establishing a strong governance posture now. Create clear AI policies that detail the ethical and responsible use of AI. Establish data security and privacy guidelines in relation to AI use. Understand where and how AI is used across the organization by taking inventory of AI agents, systems and platforms. Build an AI governance group that includes regulatory, data science, technology and business leaders within the organization.

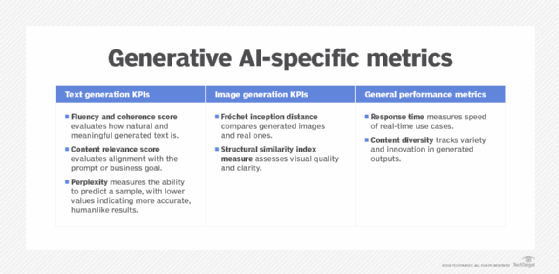

2. Focus on AI integrity

The goals are to manage data, build trust and demonstrate integrity. Start with comprehensive data controls such as data minimization, end-to-end data encryption and data anonymization technologies to ensure sensitive information is safeguarded. Ensure that machine learning models and AI systems preserve human-in-the-loop operation and remain fully transparent and explainable -- the cornerstones of many key AI regulations. Establish and refine methodologies for testing and evaluating AI systems on key regulatory issues, including bias, fairness, accuracy and performance.

3. Watch for regulations

Global companies will need to understand and comply with many different AI regulations. Participate in AI forums such as the CNAS AI Governance Forum. Follow the development, ratification and enforcement of new and changing regulations and carefully consider how to adjust current regulatory preparations to new AI regulations.

4. Prepare for AI compliance audits

Regulations often include audit requirements to demonstrate compliance. Keep careful documentation of AI processes, workflows and controls. Understand how that documentation maps into audits. Undertake routine test audits to validate preparedness and build confidence in prevailing audit processes. Review audit guides and recommendations, such as the AI Auditing Checklist for AI Auditing from the European Data Protection Board, as well as tools like Complyance that can help audit AI systems.

5. Involve the workforce in AI compliance

Invest in regular employee education programs on AI regulations, compliance requirements, ethical and responsible AI use, AI best practices and AI incident response protocols.

Stephen J. Bigelow, senior technology editor at TechTarget, has more than 30 years of technical writing experience in the PC and technology industry.