AI in call centers amplifies customer voice

Speech analytics use cases involving customer contact centers show how AI technology can make sense out of messy human language, helping businesses along the way.

Nearly all call centers are looking to improve customer experience, and some are starting to look to AI to identify common problems and give more feedback to call center agents to tackle this priority.

"[Speech analytics] can be useful in finding out what frustrates or pleases customers," said Bill Meisel, president of TMA Associates, a natural language technology consultancy.

AI in call centers is built on speech analytics that assesses the emotional quality of customer calls and clusters conversations into similar groups. By looking at examples of each group, managers can understand common characteristics. For example, product managers might be able to identify problems with a new offering more quickly by associating customer frustration with a particular subject.

Bill Meisel

Bill Meisel

Companies are also using speech analytics to help human agents identify and work on specific speaking skills that can improve customer interactions. For example, Maryland-based speech coaching software vendor VoiceVibes Inc. worked with the National Science Foundation to create software to measure the qualities correlated with building rapport with listeners.

This is subtly different than emotional analytics. This type of speech analysis can help call centers distinguish between how their agents feel during a call and how they are perceived by callers, said Debra Cancro, founder and CEO of VoiceVibes.

To develop the tool, VoiceVibes worked with a panel of expert listeners to score speakers, and then trained neural networks on this set of labeled data to automate the process of scoring new calls. Over time, the neural networks can help identify factors in call quality that may be hard for humans to gauge.

"We measure about 70 features related to pace or pitch, along with other features learned by deep learning directly," Cancro said. "When we make the models, we don't know what makes some speakers better in all cases."

Shining a light on more calls

ABC Financial Services, a payment processor for the health and fitness industry, is one early adopter of speech analytics tools, using a service from CallMiner Inc., a Florida-based software company that customer engagement analytics technology. The technology enables ABC to assess every agent call rather than just a few, as they did in the past.

"We now have the complete journey for every interaction," said Renisenb McGehee, BI analyst at ABC Financial. "Through the use of speech analytics, we have been able to identify and remove objections that prevent our agents from providing the level of service that our company expects."

Using AI in call centers had improved the ability of ABC Financial's analytics team to offer thoughtful recommendations to the call center team to improve their existing processes in order to consistently achieve their call quality expectations, McGehee said.

ABC Financial also uses speech analytics to capture intelligence -- data related to customers' emotional tone or the sentiment associated with their words -- from all customer interactions and make it available to other departments within the organization through dashboards and custom reports.

"This helps us to understand how the information can be useful for our entire company, as well as the health clubs it services," McGehee said

Different roles for AI

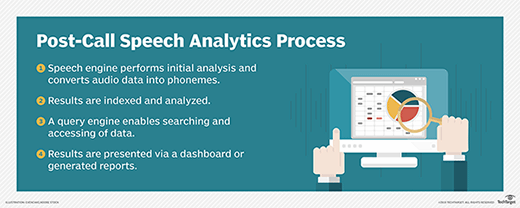

AI has been introduced to speech analytics a couple of different ways. It's used to analyze the emotional characteristics and content of call agents' interactions and to convert calls into transcripts. These transcripts can be analyzed for compliance, efficiency and quality control, said Mckay Bird, chief marketing officer at TCN.

"Based on the specific content of the call, agent managers can take the necessary steps to correct the issues through targeted agent coaching or direct customer intervention," said Bird.

Advanced speech recognition tools are built on deep learning neural networks, which improve their recognition of human language over time. Call transcript analysis uses semi-supervised machine learning and weighted rules logic to automatically classify and score calls.

These tools, when used together, produce sentiment and emotion scores by pairing spoken words with characteristics known to be associated with certain feelings -- speaking rate, stress inflection and volume. This helps call center managers understand where customers are demonstrating frustration or if agents are mishandling a call.

Automated scoring can be used to predict outcomes, such as the likelihood of a sale or customer cancellation. Natural language processing can be used to automatically identify topics within groups of conversations and can help to improve the development of call classification rules.

Start with a measurable outcome

"The biggest challenge to a speech analytics program is defining what you want to achieve with it," said Jeff Gallino, Founder and CTO of CallMiner.

Without clear objectives or goals, a speech analytics program can get off track.

Many companies are going from 1-2% call monitoring to 100% monitoring, which can be overwhelming without clearly defined policies and processes for using the information. Dedicating the appropriate budget to execute a plan and enrolling the organization to embrace change are critical to achieving success when implementing AI in call centers.

One common -- though long-term -- objective companies have when starting speech analytics projects is to reduce overall call volume, said TMA Associates' Meisel. The data can form a strong foundation for further automating the customer service process in the future.

"Speech analytics applied to call center records allow understanding [as to] why customers are contacting a company and provide the core data needed to create natural language chatbots and digital assistants," he said.