5 AI technologies in business that are making a big impact

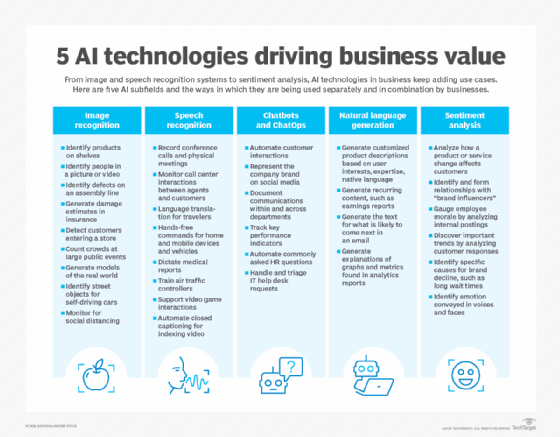

Learn how image recognition, speech recognition, chatbots, natural language generation and sentiment analysis are changing how businesses operate.

AI technologies are working themselves into virtually every aspect of business. Important AI technologies include image recognition, speech recognition, chatbots, natural language generation and sentiment analysis.

To be clear, each of these types of AI technologies represent broad categories, which often include dozens or even hundreds of underlying components. These components are, in turn, often recombined into even more sophisticated applications to deliver value to businesses.

For example, an in-store robot might use image recognition, video and speech recognition technology on the job. The image recognition software would enable it to check the placement, price and quantity of stock on shelves; video would help it avoid any obstacles in its path, as well as identify its location in the store; and the speech recognition component would allow it to listen to and direct (and entertain) customers.

To pull off such feats, these AI technologies in business often combine a variety of algorithms and techniques tailored to specific parts of the overall task. They include symbolic processing, statistical analysis, neural networks and many more.

Here is an in-depth review of five AI technologies that have evolved over time to dramatically change how businesses are processing, analyzing and generating data.

1. Image recognition

Some of the various ways businesses are using image recognition include automatic inspection on factory lines, generating damage estimates in insurance, identifying objects within images, counting people, controlling manufacturing processes, detecting events such as customers entering a store and generating models of the real world.

AI technologies must find a way to describe the world using numbers that can be processed by various types of AI algorithms. In the case of vision, researchers figured out how to break a picture into a grid of pixels, such that each pixel could be represented as a number. In the early days, one number was used to describe the brightness of each pixel. Later, people discovered they could use three or more numbers to describe the brightness of different colors in each pixel.

Researchers began exploring how to use image recognition to identify characters in digital documents in the 1960s, using primitive forms of optical character recognition (OCR) software. Other researchers began exploring techniques to interpret scenes based on images in an effort to reconstruct 3D-worlds from 2D pictures. Over the years, the techniques became part of the toolkit of the machine vision industry.

Later, researchers discovered that image recognition could be organized as a hierarchical process to make it easier to interpret ever more complex phenomena. For example, black and white pixels might be recognized as lines and squiggles, which are, in turn, are recognized as part of numbers and digits. It's much easier to train the algorithms to learn to interpret characters based on the patterns of these squiggles, rather than the brightness of each pixel. Similarly, it is easier to determine whether an image contains a cat based on whether the image contains two eyes and the appropriately shaped ears, rather than by the raw brightness of colors in each pixel.

This mode of processing promised to advance the field of image recognition. However, it was not until about 2012 with the development of AlexNet -- an eight-layer convolutional neural network designed to support image recognition -- that researchers figured out how to scale up this process to recognize thousands of different kinds of objects.

The beauty of deep learning techniques like AlexNet was that the model could automatically learn to do a wide variety of image recognition tasks in ways that did not require humans to programmatically specify each step. This capability fueled the use of deep learning in other types of applications as well, as researchers figured out how to apply neural networks to different types of problems.

Nowadays, image recognition is used to identify products on shelves, individuals in a picture or video, defects on the assembly line and objects on streets for self-driving cars. In the wake of COVID-19 applications are being developed to monitor for masks and social distancing rules.

One key insight for enterprise leaders is that it is often possible to create more value by using multiple types of image recognition in an application. For example, intelligent document processing and document intelligence combine a set of AI technologies, including natural language processing and machine learning, to capture and classify data from formats that are hard to read. Combined with OCR, intelligent document processing can analyze the visual layout of a document to determine which section represents a product, invoice amount or the terms of a sale and feed that information to other business apps.

It's worth noting that most image recognition applications in business are highly context-sensitive. Vendors and researchers often tout new image recognition improvements, citing software that beats human experts, for example, in identifying tumors in humans. But in practice, the AI only works on these radiological images with a certain set of equipment and only if the images are caught at just the right angle, whereas humans are adept at interpreting a large variety of images captured from many different angles.

Researchers are also finding bias creeping into some implementations of these image recognition applications. To mitigate bias, experts recommend training these applications on data that is representative of the specific kinds of images that will be processed.

2. Speech recognition

A variety of algorithms are needed to convert human speech into text and ready it for digital processing. Although speech recognition systems are getting better, even the best speech recognition systems today are still somewhat error prone, so some verification is required in safety-critical applications such as healthcare data capture.

The first speech recognition systems for identifying single digits were developed in 1952 by researchers at Bell Labs. By 1962, IBM pushed the envelope with its Shoebox machine that could understand 16 words. By the mid-1980s, researchers began using statistical techniques, such as hidden Markov models, to develop applications that could understand 20,000 words, but with pauses between words. The first consumer dictation product, Dragon Dictate, was released in 1990 to automatically "type" spoken text. Then AT&T rolled out a speech recognition application that could route calls without a human operator. These early systems either had small vocabularies suited for a particular context or required extensive training by an individual voice.

Researchers found ways to apply deep neural networks to speech recognition starting in 2010. One of the key drivers for this growth was the need to find better ways to represent the vocal characteristics of different kinds of speakers. This required teasing out better ways of transforming raw audio spectral data into the distinct sounds, called phonemes, that humans are used to listening for (e.g., "c" in the word "car.")

Researchers are also combining the basic speech recognition results with better context to distinguish between homonyms (bear/bare). A variety of core speech-to-text services are now provided by cloud services that developers then bake into various enterprise workflows.

Basic speech recognition capabilities are built into modern smartphones and computers via cloud services such as Microsoft Cortana, Google Now and Apple Siri. Amazon has used speech recognition to launch a new way of connecting with the internet outside of smartphones with its Alexa voice service. These services typically do the heavy lifting in the cloud. More recently, Google has upped the bar by developing more efficient algorithms that can run the speech recognition application natively on its Pixel phones.

The use of speech recognition technology in business is increasing. Several vendors are also starting to develop applications for automatically recording conference calls and physical meetings for compliance purposes or to better document the decision-making process. Automated speech recognition can also help to monitor call center activity to ensure workers are following correct procedures so that managers do not have to listen to each call. Speech recognition applications are also being used to automate verbal translations for travelers. Other business applications of this AI technology include home automation, video game interaction and automated closed captioning for indexing videos.

3. Chatbots

Conversational AI techniques allow applications to interact with humans in a natural way. The first chatbot, Eliza, was developed at the MIT Artificial Intelligence Laboratory from 1964-1966. The earliest chatbots were limited in terms of vocabulary and the types of interactions they could allow. These applications used a decision tree which proceeded down various paths based on queries or a user's answer to a question. In the 1980s and 1990s, these techniques were expanded to automated telephone applications in which interaction was controlled via dial tone responses or simple vocabularies using interactive voice response (IVR) technology.

More recently, chatbot applications have exploded, owing to better natural language processing technologies for interpreting and responding to text queries, and better integration with other services that make it easier for businesses to automatically set up chatbots that can respond to frequently asked questions, take orders or customize responses to a given user. One of the key insights of recent chatbot applications has been the development of application programming frameworks for representing a user's intent and the appropriate response.

Externally facing chatbots can help automate many aspects of customer interactions. They also allow brands to reach out across various social media channels such as Facebook in a more engaging way.

The application development and deployment side of businesses has been seeing an explosion in the use of ChatOps technology that combines chatbots with operational tools for automatically provisioning applications and infrastructure and generating reports. ChatOps integrations enable teams to document their processes, which makes it easier to respond to recurring problems, or identify how a particular process was carried out in the past.

ChatOps tools are starting to find their way into other aspects of the business as a way to help document various communications within or across department, particularly as more companies adopt messaging applications like Slack and Microsoft Teams. A finance department could use ChatOps to generate and track the status of important business indicators. A sales team could use ChatOps to gather data about key accounts.

Chatbots can also help to facilitate other types of internal interactions. For example, employees might query HR chatbots to ask questions about their benefits status or request time off. Chatbots are also being used by businesses to automate the interactions with IT systems management to handle simple problems or automatically triage more complicated problems.

4. Natural language generation

As the volume of data grows, it can be difficult to prioritize the right information for employees or customers. Natural language generation (NLG) applications can help find, organize and summarize the most appropriate insight for a given user.

There are various flavors of this AI technology depending on the business use case. Natural language generation is starting to be added as a front end to business intelligence and analytics applications as part of a new category of applications that Gartner coined augmented analytics. These technologies combine the ability to interpret plain text queries and generate appropriate summaries of an analysis in plain English. For example USAA built an NLG application to improve the answers it gives to business users about how different insurance products were selling.

Another flavor of this AI technology is being used to improve the way that product information is presented to users. In these types of applications, the natural language generation engine can customize a description of a product based on a user's preferences. For example, a more technical user might be presented with a deep dive on the technical properties of a product like a new phone headset, while a fashion-conscious buyer would be given an aesthetic description of how it looks and feels. NLG can also help improve the way that content is translated to new markets.

Trulia is using NLG to automatically generate descriptions of neighborhoods for real estate listings. NLG is also being used to generate basic news articles for the Associated Press. Esquire Singapore even crafted a special issue filled with AI-generated stories. However, it is still early days for this AI technology, and experts caution businesses that new types of quality control are required for mission-critical applications, such as healthcare.

Still, businesses are likely to benefit from a variety of recent improvement in natural language processing frameworks. New natural language processing metrics are emerging to help enterprises assess the utility of a given framework and improve these implementations of NLG applications.

5. Sentiment analysis

People often express varying types and intensities of emotion when writing about events, brands, politicians and other things. The field of sentiment analysis began in the 1950s with marketers analyzing the tone of written paper documents. But this was a very manual process. Now, though, virtually everyone leaves a digital trail of sentiments in their writings on social media, in blogs, news articles comments, reviews, support forums and correspondences with companies.

A variety of AI technologies, including natural language processing, machine learning and statistics, are used to analyze the emotional tone of these digital trails. Such tools are useful for tracking how changes to a product or service affect customers without having to ask people directly. It is also helpful in keeping tabs on competitors' products and campaigns.

Another use case for sentiment analysis identifies so-called brand influencers, enabling companies to form a stronger relationship with individuals who might be able to give better advice on how to improve a service or offering.

Sentiment analytics can also help identify important trends about the things customers or prospects may be interested in so that businesses can improve their current offerings or create new ones to meet these demands. Businesses can also use sentiment analysis to identify specific reasons their brand might be suffering, such as long wait times, poor quality or a poorly conceived feature.

Internally, companies also use this AI technology in business to help understand and improve employee morale and well-being. In this use case, sentiment analysis analyzes internal postings of employees to help understand issues of importance or how management changes may be affecting them.

More sophisticated applications of sentiment analysis use AI to make sense of the emotion conveyed in voices and faces. The analysis can help identify changes in mood during support calls or assess customer perception of new products on a store shelf. Netflix has even experimented with using facial expression sentiment analysis to improve movie trailers. However, some researchers caution that these applications of sentiment analytics can suffer from issues of reliability, specificity and generalizability.