What is unsupervised learning?

Unsupervised learning is a type of machine learning (ML) technique that uses artificial intelligence (AI) algorithms to identify patterns in data sets that are neither classified nor labeled. Unsupervised learning models don't need supervision or preexisting categories while training data sets, making them ideal for discovering patterns, groupings and differences in unstructured data. It's well-suited for processes such as customer segmentation, exploratory data analysis, dimensionality reduction and image recognition.

Unsupervised learning algorithms can classify, label and group the data points contained within data sets without requiring any external guidance to perform that task. In other words, unsupervised learning enables a system to identify patterns within data sets on its own.

In unsupervised learning, an AI system groups unsorted information according to similarities and differences even though no categories are provided.

AI systems capable of unsupervised learning are often associated with generative learning models, although they might also use a retrieval-based approach, which is most often associated with supervised learning. Chatbots, self-driving cars, facial recognition programs, expert systems and robots are among the systems that use supervised or unsupervised learning approaches. Unsupervised learning is also known as unsupervised machine learning.

How unsupervised learning works

Unsupervised learning involves the following key steps:

1. Data input.

Unsupervised learning starts when ML engineers or data scientists pass data sets through machine learning algorithms to train them. There are no labels or categories contained within the data sets being used to train such systems; each piece of data that's being passed through the algorithms during training is an unlabeled input object or sample.

2. Pattern identification.

The objective of unsupervised learning is to have the algorithms identify patterns within the training data sets and categorize the input objects based on the patterns the system identifies. The algorithms analyze the underlying structure of the data sets by extracting useful information or several features from them. Thus, these algorithms are expected to develop specific outputs by looking for relationships between each sample or input object.

For example, unsupervised learning algorithms might be given data sets containing images of animals. The algorithms can classify the animals as those with fur, those with scales and those with feathers. The algorithms then group the images into increasingly more specific subgroups as they learn to identify distinctions within each category. The algorithms do this by uncovering and identifying patterns. In unsupervised learning, pattern recognition happens without the system having been fed data that teaches it to distinguish specific categories.

3. Clustering and association.

Unsupervised learning tasks can be categorized into clustering and association tasks. The focus of clustering is to explore and group objects into clusters based on their traits and similarities, while association uncovers relationships and patterns between items within a data set.

These learning methods are commonly applied in customer market analysis to reveal product relationships and enhance cross-selling and recommendation strategies. For example, Amazon's "Customers who bought this item also bought" and Spotify's "Discover Weekly" playlist recommendations use these techniques to personalize user experiences based on consumption habits.

4. Evaluation.

Evaluation in unsupervised learning typically involves assessing the quality or usefulness of the discovered patterns or structures. For example, an ML engineer might look at how meaningful the clusters are or how well the dimensionality reduction aligns with known data properties.

5. Application.

Once the unsupervised learning process is complete, the discovered patterns and insights can be used for various applications, including news categorization, targeting customers with distinct marketing strategies and contextual image classification.

Unsupervised vs. supervised learning vs. semi-supervised learning

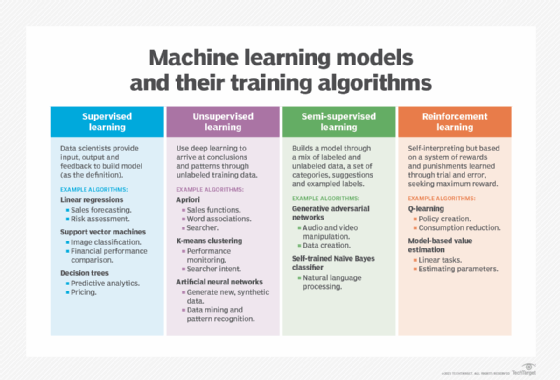

Data science and ML models typically come with three unique approaches: unsupervised learning, supervised learning and semi-supervised learning. The following are some unique features and differences between these approaches:

- Supervised learning is an ML technique similar to unsupervised learning, but in supervised learning, data scientists feed algorithms with labeled training data and define the variables they want the algorithm to assess. Unlike in unsupervised learning, both the input data and output variables of the algorithm are specified in the training data. Using the animal example, data scientists would feed the algorithm photos of each animal and create a label for each photo used in the training data to indicate if an image contains an animal and to which category it belongs.

- Supervised learning models are trained until they can detect patterns and relationships between the input data and the output labels. Classification, decision trees, regression and predictive modeling are common types of supervised algorithms.

- Comparing supervised versus unsupervised learning, supervised learning uses labeled data sets to train algorithms to identify and sort based on provided labels.

- Unsupervised learning is more unpredictable than a supervised learning model. While an unsupervised learning AI system might, for example, figure out on its own how to sort cats from dogs, it could also add unforeseen and undesired categories to deal with unusual breeds, creating clutter instead of order.

- ML engineers or data scientists can opt to use a combination of labeled and unlabeled data to train their algorithms. This in-between option is appropriately called semi-supervised learning. In semi-supervised ML, an algorithm is taught through a hybrid of labeled and unlabeled data. This process begins from a set of human suggestions and categories and then uses unsupervised learning to help inform the supervised learning process.

- Semi-supervised learning provides the freedom of defining labels for data while still being directed by a human perspective.

Another ML technique is reinforcement learning, which is based on rewarding desired behaviors and punishing undesired ones. In this process, developers create a method of assigning positive values to the desired actions and negative values to undesired behaviors.

Clustering and other types of unsupervised learning

Unsupervised learning is often focused on clustering. Clustering is the grouping of similar objects or data points while placing dissimilar objects in other clusters.

ML engineers and data scientists can use different algorithms for clustering, with the algorithms themselves falling into the following categories based on how they work:

- Exclusive clustering. This form of grouping data specifies that a data point can exist only in one cluster and is also known as hard clustering. A common type of exclusive clustering is k-means clustering where data is partitioned into k clusters based on the nearest mean, with each data point assigned to the cluster whose mean is closest to it.

- Overlapping clustering. This form of grouping data enables data points to belong to multiple clusters with different levels of membership. An example of overlapping clustering is the soft or fuzzy k-means clustering algorithm.

- Hierarchical clustering. This form of grouping data is classified as either agglomerative or divisive. With agglomerative clustering, data points are initially set as separate groupings and are later merged, and divisive clustering takes a single data cluster and divides it based on data points.

- Probabilistic clustering. This form of grouping data points is based on the potential for them to belong to a specific distribution. A soft clustering technique known as the Gaussian mixture model is commonly used here to represent subpopulations within an overall population.

Benefits of unsupervised learning

The benefits and applications of unsupervised learning include the following:

- Handles complex tasks. Unsupervised learning is more useful than supervised learning where the initial input data is more complex and unstructured. Therefore, it's ideal for complex tasks, such as grouping large data sets into clusters.

- No need to interpret labels. ML engineers and data scientists oversee passing data sets through algorithms to train them, but they aren't needed to interpret labels for each data point.

- Derives meaning from raw data sets. AI tools can more quickly evaluate raw data when compared to a person.

- Identifies underlying patterns in unstructured data sets. Unsupervised learning can be used to identify common factors between large amounts of different data points.

- Works in real time. Unsupervised learning can work with real-time data to identify patterns.

- Less costly than supervised learning. Unsupervised learning doesn't require the manual work associated with labeling data that supervised learning requires.

- Similar to human intelligence. Unsupervised learning shares some similarities with human intelligence, as both involve gradual learning and pattern recognition before deriving results or insights.

Challenges of unsupervised learning

Although organizations value the beneficial features of unsupervised learning, there are some disadvantages, which include the following:

- Results can be unpredictable. It can be difficult to check the accuracy of the unsupervised learning outputs, as there are no labeled data sets to verify the results.

- Longer overall training times. Unsupervised learning models need a large training set to produce outcomes, and learning from raw data can be time-consuming.

- Lack of insight. Identifying the hidden patterns in large unclassified data sets can make the training process more difficult.

- Hard to interpret. The patterns or clusters identified might be hard to interpret or understand, as there are no predefined labels or categories.

- Risk of overfitting. The unsupervised learning model might identify patterns that are specific to the training data, but it might not generalize well to new and unseen data.

- Overestimates similarities. There's an additional disadvantage with clustering as well, in that cluster analysis could overestimate the similarities in the input objects. This can obscure individual data points important for some use cases, such as customer segmentation, where the objective is to understand individual customers and their unique buying habits.

Best practices for unsupervised learning

Key best practices for unsupervised learning include the following:

- Understanding data. Gaining a thorough knowledge of the data used for unsupervised learning is crucial for organizations. This includes understanding the data's properties, distribution and potential patterns.

- Feature engineering. Due to its critical role in unsupervised learning, feature engineering should be prioritized. Relevant feature selection and extraction can have a significant effect on the performance of dimensionality reduction and clustering methods.

- Data preprocessing. Data should be thoroughly preprocessed to address outliers, missing values and standardization. Unsupervised learning outputs can be more accurate and dependable when the data is clean and well-preprocessed.

- Evaluation metrics. To evaluate the effectiveness of unsupervised learning models, suitable assessment metrics should be used. Insights can be gained from metrics including reconstruction error for dimensionality reduction and silhouette score for clustering.

- Testing and validation. Thorough testing and validation should be conducted on the unsupervised model to ensure it generalizes well. Testing should include evaluating the model's performance on new data.

- Model maintenance. Data scientists should maintain and update unsupervised learning models regularly, particularly when working with dynamic data. Ongoing revisions and monitoring are required to maintain the accuracy and usefulness of the model.

- Documentation and interoperability. The entire unsupervised learning process should be documented, including data preprocessing, model selection and parameter tuning. Data scientists should aim for a clear interpretation of the patterns and clusters to enhance understanding and decision-making.

Examples and use cases

Exploratory analysis and dimensionality reduction are two of the most common uses for unsupervised learning.

Exploratory analysis, which uses algorithms to detect patterns that were previously unknown, has a range of real-world enterprise applications. For example, businesses can use exploratory analysis as a starting point for their customer segmentation efforts.

Dimensionality reduction is used for data visualization and for enhancing the performance of ML algorithms. Algorithms such as principal component analysis (PCA) and autoencoders reduce the number of variables or features -- dimensions -- within the data sets so that the focus can be given to the relevant features for various objectives. Some experts explain this by saying that dimensionality reduction removes noisy data. ML engineers often use latent variable model-based algorithms to do this work. For example, an organization can use dimensionality reduction to read images that are blurry by reducing the background.

Additionally, organizations can use unsupervised learning for the following applications:

- Clustering anomaly detection. This technique uses unsupervised learning to detect the performance of outliers in a data set grouping without labeling the data.

- Association rule mining. Unsupervised learning identifies occurrence patterns in large data sets and how they affect each other. This application is often used to detect suspicious activity, disease symptoms and customer shopping habits.

- Cybersecurity. Cybersecurity software trained in unsupervised learning can help detect when a cyber attack might occur as well as where and how.

- Customer segmentation. Marketing groups personalize their advertising strategies based on which categories their customers fit into.

- Medical imaging. Healthcare organizations use the unsupervised ML features in radiology and pathology devices to help detect and diagnose diseases in patients.

- Prognostic validity. Often used in healthcare, this application groups patients with similar health issues and predicts how these patients will do over time.

- Recommendation engines. Organizations gather data about people's browsing, shopping and viewing habits to provide them with personalized content.

- Image compression. Unsupervised learning can reduce the size of images by identifying and removing redundant information. This helps with storage optimization and transmission of visual data.

- Computer vision. Unsupervised learning algorithms are applied to visual tasks, including object recognition, as they can help identify and categorize objects in images without needing labeled data sets as examples.

Future of unsupervised learning technology

Unsupervised learning technology is experiencing substantial growth. According to a report by Allied Market Research, the global unsupervised learning market, which was valued at $4.2 billion in 2022, is projected to reach $86.1 billion by 2032.

This growth is promoted by the increasing availability of diverse data sets and advancements in AI and ML techniques. Despite challenges such as limited interpretability, the rising demand for anomaly detection and cybersecurity is expected to create significant opportunities for unsupervised learning market expansion.

Learn more about unsupervised learning techniques including clustering to help categorize data.