What is prompt engineering?

Prompt engineering is an artificial intelligence (AI) engineering technique that refines large language models (LLMs), with specific prompts and recommended outputs. It also is part of the process of refining input to various generative AI (GenAI) services to generate text or images. Prompt engineering helps generative AI tools create various types of content and digital artifacts, including robotic process automation bots, 3D assets, scripts and robot instructions.

Prompt engineering techniques help tune LLMs for specific use cases, ranging from text-based output to graphic design to cybersecurity. However, prompt engineering for various existing generative AI tools is its most widespread use, because there are far more users of existing tools than developers working on new ones.

Prompt engineering combines elements of logic, coding, art and sometimes additional modifiers, such as adjectives and adverbs to make prompts more specific. The prompt can include natural language text, images or other types of input data. Although the most common generative AI tools can process natural language queries, the same prompt will likely generate different results across AI services and tools. Each tool has its own special modifiers to make it easier to describe the weight of words, styles, perspectives, layout or other properties of the desired output or response.

Why is prompt engineering important to AI?

Prompt engineering is essential for creating better AI-powered services, minimizing biases and getting better results from existing generative AI tools.

This article is part of

What is GenAI? Generative AI explained

Creating better AI-powered services

In terms of creating better AI, prompt engineering can help teams tune LLMs and troubleshoot workflows for specific results. Enterprise developers might experiment with this aspect of prompt engineering when tuning an LLM like OpenAI's GPT-4 to power customer-facing chatbots or search engines, or to handle specific tasks such as creating contracts tailored to the needs of a specific business or industry. For example, a law firm might use generative AI to generate contracts that use consistent language.

Prompt engineering also plays a role in identifying and mitigating various types of prompt injection attacks. These kinds of attacks are a modern variant of Structured Query Language injection attacks in which malicious actors or curious experimenters try to break the logic of generative AI services, such as OpenAI's ChatGPT, Microsoft Copilot or Google Gemini. The models can exhibit erratic behavior if asked to ignore previous commands, enter a special mode or make sense of contrary information. In these cases, enterprise developers can remediate the problem by exploring the prompts in question and fine-tuning the service's deep learning models.

In other cases, researchers have to create prompts to elicit sensitive information from the underlying generative AI engine. For example, data scientists discovered that the secret name of Microsoft Bing's chatbot is Sydney and that ChatGPT has a special DAN -- aka "Do Anything Now" -- mode that can break normal rules. Developers can use prompt engineering to protect against such access by directing generative models to never disclose specific sensitive information.

Microsoft had to adjust Bing Chat's prompt engineering capabilities when it started responding with misinformation and berating users. The company reduced the number of interactions users could have with the tool within a single session. However, longer-running interactions can lead to better results, so improved prompt engineering will be required to strike the right balance between better results and safety.

Improving the accuracy of existing GenAI tools

In terms of improving existing generative AI tools, prompt engineering can help users identify ways to reframe their query to home in on the desired results. A writer might experiment with different ways of framing the same question to tease out the best text or style of writing. For example, in tools such as ChatGPT, variations in word order and the number of times a single modifier is used can significantly affect the final text.

Developers also use prompt engineering to combine examples of existing code and descriptions of problems they're trying to solve for code completion. Similarly, the right prompt can help them interpret the purpose and function of existing code to understand how it works and how it could be improved or extended.

In the case of text-to-image synthesis, prompt engineering helps define various characteristics of generated imagery. Users can request that the AI model create images in a particular style, perspective, aspect ratio, point of view or image resolution. The first prompt is usually just the starting point, as subsequent requests let users downplay certain aspects of the image, enhance others, and add or remove objects.

What are some real-world use cases of prompt engineering?

Businesses are finding creative uses for GenAI prompt engineering, such as the following:

- Writing. Prompt engineering helps journalists, marketers and other writers generate ideas and speed up content creation. GenAI tools can help with tasks such as generating transcripts from live events and creating screenplays from narrative text.

- Graphic design. Prompts help generative models shape and produce detailed designs. More specific prompts mean more detailed design outputs.

- Software development and engineering. Prompt engineering simplifies software developers' jobs as generative models can solve coding problems and generate code snippets faster than people can.

- Healthcare. LLMs are used to summarize and analyze large amounts of patient data, case studies and white papers. Customized chatbots are used to answer patients' questions.

- Conversational AI. Various vertical markets create, customize and use their own conversational AI programs. For example, e-commerce companies use custom chatbots to interact with customers, answer basic questions and address concerns. An AI model trained for customer service might use prompt engineering to help consumers solve problems more efficiently. In this case, it might be desirable to use natural language processing to generate summaries to help people with different skill levels analyze and solve a problem on their own. For example, a skilled technician would only need a summary of key steps, while a customer would need a longer, step-by-step guide elaborating on procedures involved.

- Cybersecurity. In the tech sector, cybersecurity professionals use prompts to get generative models to simulate cyberattacks so that they can test how prepared systems are to combat such attacks and discover vulnerabilities.

- Law. A law firm might want to use a generative AI model to help lawyers automatically generate contracts in response to a specific requirement, such as that all clauses in a contract reflect existing clauses in the firm's contract documentation library to prevent new summaries from introducing legal issues.

Prompt engineering examples

There are differences in the types of AI prompts used to generate text, code and images. Here are examples of tools used to create each type of user-generated content and the types of prompts often used:

Text: ChatGPT and OpenAI's GPT-4

- What's the difference between generative AI and traditional AI?

- Write 10 compelling variations for the headline "Top generative AI use cases for the enterprise."

- Write an outline for an article or blog post about the benefits of generative AI for marketing.

- Now write 300 words for each section.

- Create an engaging headline for each section.

- Write a 100-word product description for Product XYZ in five different styles.

- Define the term "prompt engineering" in iambic pentameter in the style of Shakespeare.

Code: ChatGPT and OpenAI's Codex

- Act as an American Standard Code for Information Interchange artist that translates object names into ASCII code.

- Find mistakes in the following code snippet.

- Write a function that multiplies two numbers and returns the result.

- Create a basic REST API in Python.

- What function is the following code doing?

- Simplify the following code.

- Continue writing the following code.

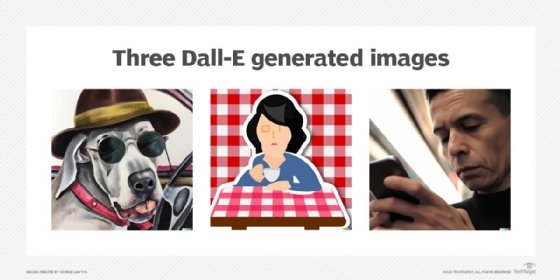

Images: Stability AI's Stable Diffusion, Midjourney, OpenAI's Dall-E 3

- Create an image of a dog in a car wearing sunglasses and a hat in the style of Salvador Dalí.

- A lizard on the beach in the style of Claymation art.

- A man using a phone on the subway, 4K, bokeh -- a higher 4K-resolution image with bokeh blurring.

- A sticker illustration of a woman drinking coffee at a table with a checkered tablecloth.

- A jungle forest with cinematic lighting and nature photography.

- A first-person image looking out at orange clouds during a sunrise.

What are prompt engineering techniques?

There are various prompt engineering techniques. The following are some frequently used techniques:

- Chain-of-thought prompting. In chain-of-thought (CoT) prompting, a user prompts an LLM to solve a complex problem that's broken down into smaller, logical steps so that the LLM more easily understands the problem. This must be done within a single prompt. For example, a complicated math problem is more easily understood when instructions are delivered step by step in one prompt.

- Zero-shot prompting. An LLM is given a task that doesn't require specific examples in the input. The user relies on the LLM's vast machine learning training data to produce an output. One example would be asking what an obscure word or term means.

- Few-shot prompting. Users build on zero-shot prompting, providing more examples in their prompts to get more specific outputs. For instance, an LLM can be prompted to write a short story involving certain characters and events. This approach isn't as suitable for complex tasks as CoT prompting.

- Prompt chaining. Prompt chaining is similar to CoT prompting in that it requests an LLM to solve complex problems. However, prompt chaining involves multiple contextually related prompts, while CoT involves a single, longer prompt listing all the elements of a given problem.

- Self-consistency prompting. This technique breaks down complex problems in different ways, giving an LLM multiple angles to understand and answer the prompt. This could mean receiving multiple model outputs, in which case it's up to the user to select the best one. An example would be asking an LLM to do the same math problem in multiple ways to ensure the same output is achieved each time.

- Meta prompting. Users format prompts clearly with the structure and syntax needed to get specific answers from the LLM. These types of prompts are more abstract and don't require long, detailed requests from a user. For example, a user can prompt an LLM to explain how a process works, then send a subsequent prompt asking it to break that process down into specific steps.

- Generated knowledge prompting. This technique involves a user instructing an LLM to provide general knowledge related to an answer before answering the prompt. This gives the user a better understanding of that answer. When asking an LLM about the benefits of new technology, the user might first request background information related to it.

Tips and best practices for writing good prompts

Since prompt engineering is an iterative process, one of the most important tips for writing good prompts is to phrase a concept in diverse ways to see how they work. Use various modifiers, styles, perspectives, names of authors or artists, and formatting to make the request in different ways. This approach produces nuances that can provide more interesting results for a particular query.

Another approach is to use best practices for a specific type of output. For example, when writing product descriptions for marketing copy, the AI system can be asked to use different variations, styles and levels of detail. For a more technical piece of writing that requires understanding a difficult concept, it might be helpful to ask how it compares with a related concept to help understand the differences.

It's also helpful to play with the different types of input in a prompt. This could include examples, input data, instructions or questions combined in different ways. Even though most tools limit the amount of user inputs, it's possible to provide instructions in one round that apply to subsequent prompts.

Once a user has basic familiarity with a tool, it's worth exploring its special modifiers. Many generative AI apps have short keywords for describing properties such as style, level of abstraction, resolution and aspect ratio, as well as methods for weighing the importance of words in the prompt. These can make it easier to precisely describe specific variations and reduce time spent writing effective prompts.

It can also be worth exploring prompt engineering integrated development environments (IDEs). These tools organize prompts and results for engineers to fine-tune generative AI models and for users trying to achieve a particular type of result. Engineering-oriented IDEs include open source tools such as Snorkel and PromptChainer. More user-focused prompt engineering IDEs include Playground AI and DreamStudio.

AI engineering is a viable career option for aspiring tech professionals. Explore what AI engineers do and how to become one.