What is prompt chaining? Definition and benefits

Prompt chaining is a technique used when working with generative artificial intelligence (generative AI) models in which the output from one prompt is used as input for the next. This method is a form of prompt engineering, which is the practice of improving how questions and problems are posed to elicit better output from pretrained generative AI models.

Prompt chaining is best suited to solve a complicated problem in a piecewise manner or to refine and expand on an initial output. It's helpful for users who might have a task in mind and a general idea of their desired output, but don't know what the exact details or structure of that output should be.

Prompt chaining is most commonly used when interacting with large language models (LLMs), as these models do a good job retaining context and refining previously generated output without making too many changes or removing desirable features. Developers are working on the ability to iteratively refine the output of other types of generative AI models, such as image generators. For example, OpenAI's Dall-E image generation model, accessible within ChatGPT, offers this capability with varying degrees of success.

How does prompt chaining work?

The process starts like any other interaction with a generative AI model: by providing the model with an initial prompt, usually a question or a statement describing the desired output. After processing this initial input, the model generates its first output.

This article is part of

What is GenAI? Generative AI explained

That initial output is then evaluated, either by a human user or an automated system trained to check against criteria such as accuracy and creativity. Based on the results of that evaluation, the user or system creates another prompt that considers the feedback from the previous round, aiming to bring the output closer to the user's intent.

For example, the evaluation might determine that the initial output is overly broad and not sufficiently focused on the target problem. In the next prompt, the user instructs the model to focus on a specific element. The new prompt is fed back into the model, and the process continues until a satisfactory final output is achieved.

From a technical perspective, prompt chaining is effective because it takes advantage of certain aspects of LLM architecture. Structurally speaking, LLMs are neural networks that rely heavily on transformer models, which are adept at identifying patterns and relationships in long sequences of text data. Thus, LLMs are well-suited for recognizing and replicating complex patterns and maintaining an awareness of context over time.

Prompt chaining involves building on previous output, with each new prompt incrementally adjusting the context or focus. This method is a good fit for LLMs' ability to manage context over extended sequences and allows for more nuanced refinement compared with giving the LLM a lengthy, detailed initial prompt.

Prompt chaining techniques

Prompt chaining includes several techniques that describe a specific approach to chaining prompts. LLM users should understand their differences and when to use each. They include the following:

- Interactive chaining. With this technique, user feedback is incorporated into a chain to respond to LLM outputs in real time. Users have free-flowing conversations with the LLM, adding new information and ideas to continuously shape outputs. LLM applications refine their outputs in response to the human feedback they get.

- Sequential chaining. The LLM user breaks down complex tasks into sequential prompts, with each prompt representing one step or phase. This method is useful for tasks such as summarizing long text in sections or sorting data sets into various parts.

- Looping chaining. Users create loops of prompts so that steps articulated through those prompts can be used repeatedly for many different tasks. For example, loop chaining is used when processing data where multiple data sets require the same processing and organizing tasks.

- Conditional chaining. Users provide "if-then" logic to an LLM to shape its outputs. This technique ensures future outputs will be based on this logic.

Prompt chaining vs. chain-of-thought prompting

Prompt chaining and chain-of-thought prompting (CoT) sound similar, and both techniques aim to improve LLM output through prompt engineering. However, they have several key differences. Prompt chaining involves using output from one model's interaction as input for the next. This inherently involves multiple prompts. By comparison, CoT prompting uses a single, longer prompt that asks the model to describe the step-by-step reasoning process used to arrive at its answer. In many cases, CoT prompts also include one or more examples of such step-by-step reasoning as an illustration for the model.

Prompt chaining benefits

Some of the specific benefits of prompt chaining include the following:

- Flexibility. Prompt chaining breaks down a problem or query into multiple stages, which gives users several opportunities to provide feedback on model output or edit their querying approach. This offers better flexibility and customization in LLM output.

- Creativity. Prompt chaining is useful for open-ended, creative tasks such as brainstorming. Users engage in a back-and-forth dialogue that asks the model to expand on particularly promising ideas.

- Precision. The chaining approach lets users gradually adjust their query and provide feedback on the model's responses at each step. In this way, the technique elicits more precise, higher-quality responses from LLMs.

- Efficiency. Handling a less-than-optimal response with chained prompts is often more efficient than restating the entire problem or asking the model to regenerate its response. Rather than asking the model to reprocess the entire task, prompt chaining focuses on making specific, targeted improvements.

- Problem solving. Prompt chaining breaks down complicated questions and scenarios into smaller, more manageable components. This makes it a useful technique for approaching complex tasks.

- Fine-tuning. Prompt chaining is also useful for model training and fine-tuning. Giving models the opportunity to learn from iterative feedback in a prompt chaining context improves their ability to generate high-quality, accurate output.

Prompt chaining challenges

LLM users who rely on prompt chaining might experience challenges as well. Users should understand them before attempting to craft prompt chains. They include the following:

- More complex management. Prompt chaining can quickly become an endless stream of prompts that are difficult to manage. Longer chains mean more room for error. For example, if each prompt is a step in a long list of steps, an LLM might lose context between steps and produce inconsistent or unrelated outputs.

- Flaws in initial prompts. A chain is predicated on the substance of the initial prompt. If a user doesn't articulate a clearly defined problem within the first prompt, the LLM outputs likely won't align with the user's goals.

- Time consumption. Long chains of prompts take up a user's time. This is especially true of prompt engineers who use prompt chains to fine-tune LLMs.

- Potential costs. For users or enterprises using LLMs on paid data plans, longer prompt chains mean increased time spent on the application, potentially leading to higher costs.

How to build a prompt chain

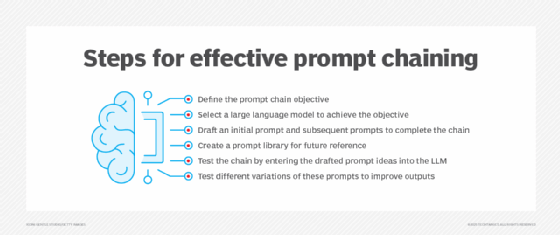

Each prompt chain is different, but a certain set of best practices apply to the basic chain creation process. Many of the tips that apply to prompt engineers also help typical LLM users. An effective chain-building strategy should include the following steps:

- Define objectives. The LLM will need a clear goal before building a prompt chain. Any detailed instructions an LLM will need to follow should be thought out carefully before the initial prompt is sent.

- Select an LLM. Pick a large language model that fits with the objective of the prompt chain.

- Map out sequences and subtasks. The initial prompt is the most important one, however, the purpose of prompt chaining is to not overwhelm an LLM with an initial complex prompt. Prompt chaining spreads out the complexities over multiple prompts, so the user should divide complex details into different prompts.

- Build a prompt library. Once a new prompt is crafted, it should be added to a library or reference list of previous prompts to simplify prompt creation in the future.

- Test the chain. The user sends the initial prompt to the LLM for a test run and follows up the LLM's response with additional clarifying prompts. Prompts can be tweaked to test how the LLM would react and see if it produces better outputs.

- Iteratively refine and experiment. It's worth the effort to run chains repeatedly, refining prompts to improve previous LLM outputs.

Prompt chaining examples and use cases

Prompt chaining is a technique well-suited to creative uses and complex problem-solving. The following are examples of prompt chaining:

- Software development. Developers use prompt chaining to produce high-quality code. After generating code with an initial prompt, they then use chained prompts to optimize it, align it with specific organizational standards and debug it.

- Product design. Product teams use prompt chaining to work through the initial stages of product design. For example, a product designer could use an LLM to create the initial generative design documents for a product and then use chained prompts to refine those documents based on considerations such as technical feasibility and market research.

- Content creation. Marketing and content teams use prompt chaining to iteratively generate marketing collateral, such as blog posts, ad copy and social media posts. Chained prompts help refine a basic draft to match requirements such as brand voice, length and tone.

- Strategic planning. Executives and business leaders use chained generative AI prompts to assist with strategic decision support. After producing a general market analysis, subsequent follow-up prompts dig deeper into specific areas or introduce new relevant information, eventually producing a detailed scenario analysis or prediction.

Prompting chaining and other prompt engineering skills are integral for AI engineers. Find out what other skills pertain to AI engineering.