What is machine learning bias (AI bias)?

Machine learning bias, also known as algorithm bias or AI bias, is a phenomenon that occurs when an algorithm produces results that are systemically prejudiced due to erroneous assumptions in the machine learning (ML) process.

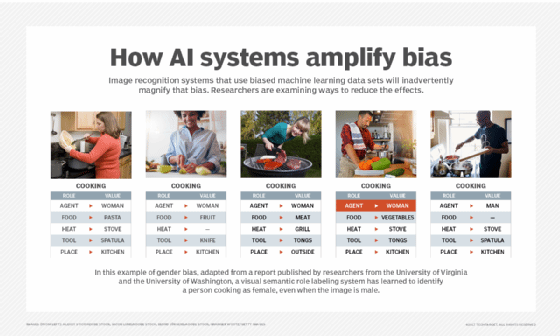

Machine learning, a subset of artificial intelligence (AI), depends on the quality, objectivity, scope and size of training data used to teach it. Faulty, poor or incomplete training data results in skewed or inaccurate predictions, reflecting the garbage in, garbage out admonishment used in computer science to convey the concept that the quality of the output is determined by the quality of the input.

ML bias generally stems from problems introduced by the individuals who design and train the ML systems. These people might create algorithms that reflect unintended cognitive biases or real-life prejudices. Or they could introduce bias in ML models because they use incomplete, faulty or prejudicial data sets to train and validate the ML systems.

Types of cognitive bias that can inadvertently affect ML algorithms include stereotyping, the bandwagon effect, priming, selective perception and confirmation bias.

Although these biases are often unintentional, the consequences of their presence in ML systems can be significant. Depending on how the ML systems are used, such biases could result in bad customer service experiences, reduced sales and revenue, unfair or possibly illegal actions, and potentially dangerous conditions.

To prevent biased models, organizations should check the data being used to train ML models for lack of comprehensiveness and cognitive bias. The data should be representative of different races, genders, backgrounds and cultures that could be adversely affected. Data scientists developing the algorithms should shape data samples so it minimizes algorithmic and other types of ML bias, and decision-makers should evaluate when it's appropriate, or inappropriate, to apply ML technology.

Types of machine learning bias

There are various ways that bias can be brought into an ML system. Common scenarios, or types of bias, include the following:

- Algorithm bias. This occurs when there's a problem within the algorithm that performs the calculations or other processing that powers the ML computations.

- Automation bias. This occurs when the results of automated systems are preferred over the results of human or other non-automated systems, even though the automated system might not provide better accuracy. In other words, users trust AI more.

- Sample bias. This happens when there's a problem with the data used to train the ML model. In this type of bias, the data used either isn't large enough or representative enough to teach the system. For example, using training data that features only female teachers trains the system to conclude that all teachers are female.

- Prejudice bias. In this case, the data used to train the system reflects existing prejudices, stereotypes and faulty societal assumptions, thereby introducing those same real-world biases into the machine learning itself. For example, using data about medical professionals that includes only female nurses and male doctors could perpetuate a real-world gender stereotype about healthcare workers in the computer system.

- Implicit bias. Similar to prejudice bias, implicit bias occurs when models are designed or data is curated using the human designer's own ways of thinking or personal experiences, which might not fully or accurately map to the task at hand.

- Group attribution bias. This occurs when the characteristics of an individual or single sample are improperly applied to a broader set of individuals or a group of data points. Such generalizations made about an entire group can overlook the nuances of individual samples.

- Measurement bias. As the name suggests, this bias arises due to underlying problems with the accuracy of the data and how it was measured or assessed. Using pictures of happy workers to train a system meant to assess a workplace environment could be biased if the workers in the pictures knew they were being measured for happiness; a system being trained to precisely assess weight is biased if the weights contained in the training data were consistently rounded up or down.

- Exclusion or reporting bias. This happens when an important data point is left out of the data being used. This can happen if the modelers don't recognize the data point as consequential. For example, incidents reported in police crime analytics might be skewed when incidents go unreported or under-reported because victims fail to report the incidents.

- Selection bias. This occurs when the data used in training either isn't large enough or representative enough, thereby misrepresenting and lowering accuracy results and performance. There are several variations of selection bias, including coverage bias where the data isn't representative, participation bias where non-responses leave gaps in data, and sampling bias where statistical randomization isn't used.

- Recall bias. This data quality bias develops in the data labeling stage, where labels are inconsistently given through subjective observations. Recall is measured as how many points are labeled accurately over the total number of observations in a model.

Bias vs. variance

Data scientists and others involved in building, training and using ML models must consider not just bias, but also variance when seeking to create systems that can deliver consistently accurate results.

Like bias, variance is an error that results when machine learning produces the wrong assumptions based on the training data. Unlike bias, variance is a reaction to real and legitimate fluctuations in the data sets. These fluctuations, or noise, shouldn't affect the intended model, yet the system might still use that noise for modeling. In other words, variance is a problematic sensitivity to small fluctuations in the training set, which, like bias, can produce inaccurate results.

Taken another way, variance is the difference in output based on subsets or portions of the training data. For example, if the model were trained using a subset of the total data, and then asked to make determinations, the variance would be the difference in results for every training subset. Ideally, the variance would be low or zero. Poorly selected data sets can result in unnecessarily or unacceptably high variance.

Variance can be reduced by careful application of data science methodologies, including cross-validation of the data, limiting feature selection in the data, comparing results from similar or ensemble models, simplifying the models and preventing overfitting.

Although bias and variance are different, they're interrelated in that a level of variance can help reduce bias and a level of bias can help reduce variance. If the data population has enough variety, biases should be drowned out by the variance. The sensitivity to bias and variance is often influenced by the types of ML algorithms in use. Here are some examples:

- Bagging algorithms can bring low bias and high variance.

- Decision tree algorithms can bring low bias and high variance.

- Linear regression algorithms can bring high bias and low variance.

- Random forest algorithms can bring low bias and high variance.

As such, the objective in machine learning is to have a tradeoff, or balance, between the two to develop a system that produces a minimal number of errors.

How bias occurs in each stage of the ML pipeline/ML development lifecycle

Bias represents an array of insidious errors that typically result from imperfect human knowledge, poor assumptions or weak algorithms and sometimes malign intent. Although bias is often seen as a data issue, AI experts must learn to recognize the potential for bias at the following points along the ML pipeline:

- Data. Bias commonly occurs with data early in the ML lifecycle. Bias can occur in the data collection phase when raw data is improperly selected, incomplete or untrue in any form. Bias can also appear in the data preparation phase when data is cleaned and transformed prior to being ingested into a model for training, such as missing values or rounding errors. Finally, bias can appear in the feature selection phase when data scientists select relevant details about the data needed for the model's successful prediction.

- Model. Bias can be amplified in the selection of the actual models or algorithms such as classification versus regression; some algorithms are more sensitive to bias and variance than others. Some AI platforms employ multiple adversarial models to help counterweight the potential for errors or model bias.

- Development. Bias can be exacerbated during the development phase, when models are coded, if needed, and undergo training and testing. Errors in algorithms can impact bias here. Poor training practices such as improper or absent human feedback and insufficient validation with a small or insufficient testing data set will all have an adverse effect on the model.

- Operations. Bias can also arise after deployment while a model is operating in production. Improper human feedback can drive errors in the model, reducing its effectiveness. Further, humans must apply some of their own interpretations and opinions about the model's output. Users who don't trust or otherwise "like" the predictions might insert their own errors and biases after the fact, reducing the model's value.

How to prevent bias

Awareness and governance can help prevent machine learning bias. An organization that recognizes the potential for bias can implement and institute best practices to combat it that include the following steps:

- Select training data that's appropriately representative, large and diverse enough to counteract common types of ML bias, such as sample and prejudice bias. There's no substitute for human review and collaboration in data quality activities such as data labeling.

- Test and validate to ensure the results of ML systems don't reflect bias due to algorithms or data sets.

- Monitor ML systems as they perform their tasks to ensure biases don't creep in over time, as the systems continue to learn as they work.

- Use additional resources, such as Google's What-If Tool or IBM's AI Fairness 360 open source toolkit, to examine and inspect models.

- Create a data collection method that accounts for different opinions. One data point could have multiple valid options for labels. When initially gathering data, taking those options into account increases the model's flexibility.

- Understand any training data used, as these training data sets could contain classes or labels that can introduce bias. Don't overlook the importance of consistent, high-quality data labeling and annotation.

- Continually review the ML model and plan to make improvements as more feedback is received.

- Avoid imputation, which is a bias introduced when humans fill in missing or incomplete entries in data sets.

History of machine learning bias

The term algorithmic bias was first defined by Trishan Panch and Heather Mattie in a program at the Harvard T.H. Chan School of Public Health. ML bias has been a known risk for decades, yet it remains a complex problem that has been difficult to counteract.

In fact, ML bias has already been implicated in real-world cases, with some bias having significant and even life-altering consequences.

COMPAS is one such example. The COMPAS algorithm -- short for the Correctional Offender Management Profiling for Alternative Sanctions -- used ML to predict the potential for criminal defendants to reoffend. Multiple states had rolled out the software in the early part of the 21st century before its bias against people of color was exposed and subsequently publicized in news articles.

Amazon, a hiring powerhouse whose recruiting policies shape those at other companies, in 2018, scrapped its recruiting algorithm after it found that it was identifying word patterns. Rather than relevant skill sets, the algorithm inadvertently penalized resumes containing certain words, including women's -- a bias that favored male candidates over women candidates by discounting women's resumes.

Meanwhile, that same year, academic researchers announced findings that commercial facial recognition AI systems contained gender and skin-type biases.

ML bias has also appeared in the medical field. For example, in 2019, a study unveiled that racial bias was found in an AI-based system that decided which patients needed care in multiple hospitals. The AI algorithm showed racial bias, as black patients were labeled as being sicker than white patients recommended for the same care.

The 2024 research paper titled "How Much Does Racial Bias Affect Mortgage Lending? Evidence from Human and Algorithmic Credit Decisions" from the Federal Reserve Bank in Philadelphia showed that AI bias was responsible in 2018 and 2019 for 18% of black mortgage applicants being denied. Likewise, a story reported by The Markup and distributed by the Associated Press in August 2021 shows that lenders were 40% more likely to turn down Latino applicants, 50% more likely to turn down Asian/Pacific Islander applicants, 70% more likely to turn down Native American applicants and 80% more likely to turn down black applicants all when compared to similar white applicants.

Learn more about ways to reduce machine learning bias in its different forms.