large language model operations (LLMOps)

What is large language model operations (LLMOps)?

Large language model operations (LLMOps) is a methodology for managing, deploying, monitoring and maintaining LLMs in production environments.

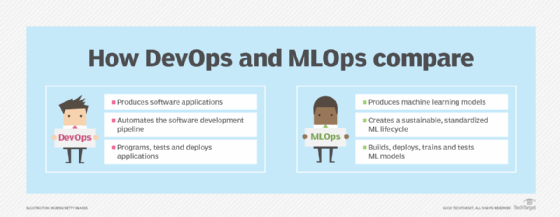

LLMOps narrows the focus of the machine learning operations (MLOps) framework -- itself an extension of DevOps -- to address the unique challenges associated with LLMs, such as OpenAI's GPT series, Google's Gemini and Anthropic's Claude. It has gained prominence and popularity since early 2023, when businesses increasingly began to explore generative artificial intelligence (AI) deployments.

The main goal of LLMOps is to ensure that LLMs are reliable, efficient and scalable when integrated into real-world applications. The approach offers a number of benefits, including the following:

- Flexibility. With its focus on enabling models to handle varying workloads and integrate with various applications, LLMOps helps make LLM deployments more scalable and adaptable.

- Automation. Like MLOps and DevOps, LLMOps heavily emphasizes automated workflows and continuous integration/continuous delivery (CI/CD) pipelines, reducing the need for manual intervention and speeding up development cycles.

- Collaboration. Adopting an LLMOps approach standardizes tools and practices across the organization and ensures that best practices and knowledge are shared among relevant teams, such as data scientists, AI engineers and software developers.

- Performance. LLMOps implements continuous retraining and user feedback loops, with the goal of maintaining and improving model performance over time.

- Security and ethics. The cyclical nature of LLMOps ensures that security tests and ethics reviews occur regularly over time, protecting against cybersecurity threats and promoting responsible AI practices.

What are the stages of the LLMOps lifecycle?

To an extent, the LLMOps lifecycle overlaps with similar methodologies such as MLOps and DevOps, but there are several differences related to LLMs' unique characteristics. Moreover, the content of each stage varies depending on whether the LLM is built from scratch or fine-tuned from a pretrained model.

Data collection and preparation

This stage of LLMOps involves sourcing, cleaning and annotating data for model training. Building an LLM from scratch requires gathering large volumes of text data from diverse sources, such as articles, books and internet forums. Fine-tuning an existing foundation model is simpler, focusing on collecting a well-curated, domain-specific data set relevant to the task at hand, rather than a massive amount of more general data.

In both cases, the next step is preparing the data for model training. This involves standard data cleaning tasks -- such as removing duplicates and noise, and handling missing data -- as well as labeling data to improve its utility for specific tasks, such as sentiment analysis. Depending on the task's scope, this stage can also include augmenting the data set with synthetic data.

Given the extent and nature of LLMs' training data, teams should also take care to comply with relevant data privacy laws and regulations when gathering training data. For example, personally identifiable information should be removed to comply with laws such as the General Data Protection Regulation, and copyrighted works should be avoided to minimize potential intellectual property concerns.

Model training or fine-tuning

The next step is to choose a model -- whether an algorithmic architecture or a pretrained foundation model -- and train or fine-tune it on the data gathered in the first stage.

Training an LLM from scratch is complex and computationally intensive. Teams must design an appropriate model architecture and train the LLM on an enormous, diverse corpus of text data to enable it to learn general language patterns. The LLM is then optimized by tuning specific hyperparameters, such as learning rate and batch size, to achieve the best performance.

Fine-tuning an existing LLM is simpler, but still technically challenging and resource-intensive. The first step is to choose a pretrained model that fits the task, considering factors such as model size, speed and accuracy. Then, machine learning teams train the selected pretrained model on their task-specific data set to adapt it to that task. As when training an LLM from scratch, this process involves tuning hyperparameters. But when fine-tuning, teams must balance adjusting the weights to improve performance on the fine-tuning task without compromising the benefits of the model's pretrained knowledge.

Model testing and validation

This stage of the LLMOps lifecycle is similar for both types of models, although a fine-tuned LLM is more likely to have better performance in early tests compared with a model built from scratch, since the foundation model will have been tested during pretraining.

For both types, this stage involves evaluating the trained model's performance on a different, previously unseen data set to assess how it handles new data. This is measured through standard machine learning metrics -- such as accuracy, precision and F1 score -- and applying cross-validation and other techniques to improve the model's ability to generalize to new data.

This step should also include a bias and security assessment. Although foundation models have typically already undergone such testing, teams fine-tuning an existing model should still not overlook this step: The new data used for fine-tuning can introduce new biases and security vulnerabilities not present in the original pretrained LLM.

Deployment

The deployment stage of LLMOps is also similar for both pretrained and built-from-scratch models. As in DevOps more generally, this involves preparing necessary hardware and software environments, and setting up monitoring and logging systems to track performance and identify issues post-deployment.

Compared with other software -- including most other AI models -- LLMs require larger amounts of high-powered infrastructure, typically graphics processing units (GPUs) and tensor processing units (TPUs). This is especially true for organizations building and hosting their own LLMs, but even hosting a fine-tuned model or LLM-powered application requires significant compute. In addition, developers will usually need to create application programming interfaces (APIs) to integrate the trained or fine-tuned model into end applications.

Optimization and maintenance

The LLMOps lifecycle doesn't end after a model has been deployed. Teams must continuously monitor the deployed model's performance in production to detect model drift, which can degrade accuracy, as well as other issues such as latency and integration problems.

As in DevOps and MLOps, this process involves using monitoring and observability software to track the model's performance and detect bugs and anomalies. It can also include loops where user feedback is used to iteratively improve the model, as well as version control to manage different model versions to allow for rollbacks if needed.

For LLMs, continuous improvement also involves various optimization techniques. These include using methods such as quantization and pruning to compress models, and load balancing to distribute workloads more efficiently during high-traffic periods.

MLOps vs. LLMOps: What's the difference?

MLOps and LLMOps share a common foundation and goal -- managing machine learning models in real-world settings -- but they differ in scope. LLMOps focuses on one specific type of model, while MLOps is a broader framework designed to encompass ML models of any size or purpose, such as predictive analytics systems or recommendation engines.

MLOps applies DevOps principles to machine learning, emphasizing CI/CD, rapid iteration and ongoing monitoring. The overall goal is to simplify and automate the ML model lifecycle through a combination of team practices and tools.

Because MLOps was designed to ensure that machine learning models are consistently tested, versioned and deployed in a reliable and scalable way, it can be applied to LLMs, a subcategory of machine learning models. Amid expanding LLM use, however, the term LLMOps has emerged to account for LLMs' differences from other ML models, including the following:

- Development process. Less complex ML models are usually developed in house, whereas LLMs are often provided as pretrained models from AI startups and large tech companies. This shifts the focus of LLMOps to fine-tuning and customization, which require different tools and workflows.

- Visibility and interpretability. Developers have little control over the architecture and training process of pretrained LLMs, especially proprietary ones. Even open source LLMs typically only offer access to the model's code, not its training data. This lack of access to the model's internal workings and training data, as well as reliance on external AI providers' APIs, complicates troubleshooting and performance optimization.

- Ethics, security and compliance considerations. Although ethics and security are concerns for any machine learning project, LLMs present unique challenges due to their complexity and widespread use. Some biases and vulnerabilities might appear only in response to specific prompts, making them difficult to detect. Enterprise LLM deployments also raise concerns about data provenance, user privacy and regulatory compliance, requiring sophisticated data governance strategies.

- Operational and infrastructure requirements. LLMs are resource-intensive, requiring substantial compute power, specialized hardware such as GPUs or TPUs, and distributed computing techniques. Many other types of machine learning models, while still usually more resource-intensive than non-ML software, are comparatively lightweight.

- Scale and complexity. LLMs' size and complexity require teams to pay close attention to resource allocation, scaling and cost management -- particularly when serving LLMs in real-time applications, where they can suffer from high latency. Mitigating this problem can require advanced optimization techniques, such as model quantization, distillation and pruning.