What is image-to-image translation?

Image-to-image translation is a generative artificial intelligence (AI) technique that translates a source image into a target image while preserving certain visual properties of the original image. This technology uses machine learning and deep learning techniques such as generative adversarial networks (GANs); conditional adversarial networks, or cGANs; and convolutional neural networks (CNNs) to learn complex mapping functions between input and output images.

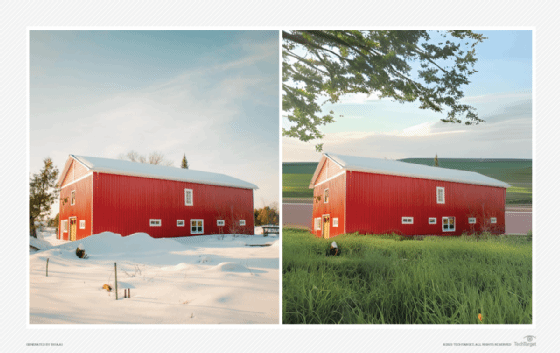

Image-to-image translation allows images to be converted from one form to another while retaining essential features. The goal is to learn a mapping between the two domains and then generate realistic images in whatever style a designer chooses. This approach enables tasks such as style transfer, colorization and super-resolution, a technique that improves the resolution of an image.

The image-to-image technology encompasses a diverse set of applications in art, image engagement, data augmentation and computer vision, also known as machine vision. For instance, image-to-image translation allows photographers to change a daytime photo to a nighttime one, convert a satellite image into a map and enhance medical images to enable more accurate diagnoses.

How does image-to-image translation work?

Image processing systems using image-to-image translation require the following basic steps:

- Define image domains. The process begins by defining the image domains, which represent the types of input and output images the system will handle. These domains can include diverse categories such as style transfer, super-resolution and semantic segmentation.

- Train the system. A data set containing paired examples of input and target images -- sometimes called ground truth target images -- is used to train the system so that it can learn the mapping that's required between the two domains.

- Combine the generator and discriminator. Once trained, a GAN is used to combine generator and discriminator networks. The generator network takes in an input image from the source domain and generates an output image that belongs to the target domain. Meanwhile, the discriminator network learns to distinguish real images in the target domain as well as synthesized images produced by the generator. A loss function metric is used to measure the difference between the generated output and ground truth target image.

A critical aspect of image-to-image translation is ensuring the model generalizes well in response to previously unseen or unsupervised scenarios. Cycle consistency and unsupervised learning help to ensure that if an image is translated from one domain to another and then back, it returns to its original form. Deep learning architectures, such as U-Net and CNNs, are also commonly used because they can capture complex spatial relationships in images. In the training process, batch normalization and optimization algorithms are used to stabilize and expedite convergence.

This article is part of

What is GenAI? Generative AI explained

Supervised vs. unsupervised image-to-image translation

The two main approaches to image-to-image translation are supervised and unsupervised learning.

Supervised learning

Supervised methods rely on paired training data, where each input image has a corresponding target image. Using this approach, the generated image system learns the direct mapping that's required between the two domains. However, obtaining paired data can be challenging and time-consuming, especially when dealing with complex image transformation.

Unsupervised learning

Unsupervised methods tackle the image-to-image translation problem without paired training examples. One prominent unsupervised approach is CycleGAN, which introduces the concept of cycle consistency. This involves two mappings: from the source domain to the target domain and vice versa. CycleGAN ensures the target domain is similar to the original source image.

For more information on generative AI-related terms, read the following articles:

AI models for image translation

Image-to-image translation and generative AI in general are touted for being cost-effective, but they're also criticized for lacking creativity. It's essential to research the various AI models that have been developed to handle image-to-image translation tasks, as each comes with its own unique benefits and drawbacks. Research groups such as Gartner also urge users and generative AI developers to look for trust and transparency when choosing and designing models.

Some of the most popular models include the following:

- StarGAN. This is a scalable, single-model image translation approach, designed to perform image translation for multiple domains. Unlike traditional methods that require building separate models for each pair of image domains, StarGAN consolidates the translation process into a unified framework. This model introduces a novel architecture that can effectively learn mappings between different image domains, enabling versatile and efficient image translation.

- CycleGAN. This is an unsupervised image-to-image translation model that has gained significant attention in the research community. It addresses the challenge of training data with unpaired images by using the concept of cycle consistency. By incorporating cycle consistency loss, which ensures the translated image can be mapped back to the original source image, CycleGAN achieves remarkable results in various image transformations without the need for paired examples.

- Pix2Pix GAN. This GAN is a conditional generative model that learns a mapping from an input image and a noise vector to the output image instead of from random noise. This conditional approach enables more controlled and precise translations. The model uses a U-Net architecture, which combines an encoder and decoder network to capture detailed pixel-to-pixel features and enable high-quality image generation.

- Unsupervised image-to-image translation (UNIT). The UNIT model focuses on unsupervised image translation and aims to learn mapping between different image domains without a paired training set of data. UNIT uses a U-Net autoencoder-like architecture and introduces a novel loss function that encourages the preservation of content representations during translation. This approach enables the model to generate visually appealing and semantically consistent images across different domains.

Image-to-image translation is a popular generative AI technology. Learn the eight biggest generative AI ethical concerns.