What is cognitive bias?

Cognitive bias is a systematic thought process caused by the tendency of the human brain to simplify information processing through a filter of personal experience and preferences. The filtering process is a coping mechanism that enables the brain to prioritize and process large amounts of information quickly. While the mechanism is effective, its limitations can cause errors in thought.

Cognitive bias as a concept was first introduced by Amos Tversky and Daniel Kahneman in 1972. Essentially, it helps people find mental shortcuts to assist in the navigation of daily life. However, it can cause irrational interpretations and judgments.

Cognitive biases can stem from problems related to memory, attention and other mental mistakes. They're often unconscious decision-making processes that make it easy for individuals to be affected without intentionally realizing it. The filtering process and coping mechanism used to process large amounts of information quickly is called heuristics.

The problem for humans isn't necessarily the judgments or conclusions that can result in suboptimal outcomes, but rather the flawed, weak or incomplete processes by which those judgments or conclusions are reached. For example, the decision to flee an approaching predator is usually a good choice. However, the impulsive choice to jump into a raging river as a means of escape could result in a poor outcome for the person involved.

It isn't possible to completely eliminate the brain's predisposition to taking shortcuts, but understanding that biases exist can be useful when making decisions.

Stereotypes are a common example of cognitive bias, also referred to as implicit bias; the belief or supposition that one gender, age, racial or social group is better or worse than another at certain tasks or abilities. Stereotypes are incorrect and undesirable, but they can linger in the minds of bosses, life partners and general society, causing negative interactions and outcomes for those being stereotyped.

Key causes of cognitive bias

Recognizing and mitigating cognitive bias is difficult, because it comes from a variety of causes:

- Cognitive ability. People can only know, recall and process a finite amount of information within a limited amount of time. This restricts their ability to consider all of the issues and details involved with a decision. As a result, some relevant details are forgotten, never known at all or not fully considered, which leads to bias.

- Emotions. Human emotion causes bias. For example, love of a spouse might blunt a response to physical or emotional abuse, where such abuse would never be tolerated from a stranger. It's impossible for people to make all decisions objectively without emotion.

- Personal motivations. People often make decisions based on what motivates them. They reach a decision based on achieving a goal even when that decision might be wrong or unhealthy. For example, a person on a diet might sacrifice necessary nutrition to reduce calories and lose weight.

- Societal pressure. Social conventions, such as values, behaviors and goals, can influence people's decisions -- even when they're in conflict with a person's own preferences or beliefs. For example, a person might opt to marry because of family and social pressure rather than a personal belief in the institution.

- Time pressure. The mental shortcuts involved in heuristics and biases are a byproduct of evolution that let people process information quickly with limited mental effort. It's a manifestation of the fight-or-flight response. The goal isn't to make the best decision with all of the salient facts, but rather to reach an adequate decision and react as fast as possible.

- Effects of aging. Although age isn't a guarantee of cognitive bias, the tendency for aging people to overlook, undervalue or simply ignore new and changing information can lead to cognitive bias. Consider the aging parent who avoids new technologies because they lose the mental flexibility to adopt and use something new, preferring to use familiar alternatives even though they might not always provide optimal outcomes.

Cognitive bias vs. logical fallacy

Cognition relates to the ways in which the brain normally operates. Logic refers to more abstract reasoning and decision-making. This creates two different levels of potential flaws in human thinking. These flaws can occur separately or together.

- A cognitive bias occurs when a person's judgment is impaired because of natural human tendencies or predispositions that are hard to recognize.

- A logical fallacy or error occurs when a person's judgment is impaired because of mistakes in reasoning or flaws in an underlying argument.

Suppose a politician gives a speech advocating investments in a new technology. A voter doesn't like that politician and cites allegations of misconduct. In this example, there's cognitive bias -- not liking the politician because of the unproven allegations. In addition, there's logical fallacy -- the rejection of the politician's suggestion because of the unproven allegations. This latter situation is known as an ad hominem logical fallacy. Even if the allegations against the politician are proven true, it wouldn't necessarily negate the societal value of more government support of the new technology.

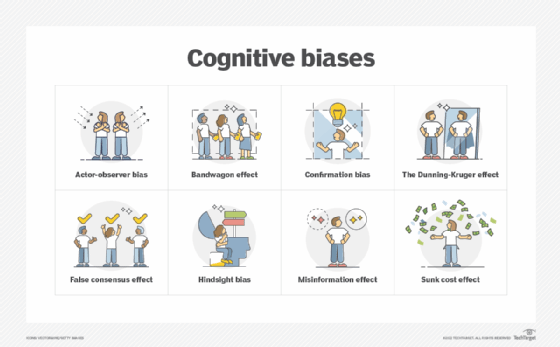

Types of cognitive bias

A continually evolving list of common cognitive biases has been identified over the last six decades of research on human judgment and decision-making in cognitive psychology, social psychology and behavioral economics. They include the following:

- Actor-observer bias. This is the tendency for an individual to credit their own situation to external causes while ascribing other people's behaviors to internal causes.

- Affect heuristic. This is the tendency for a person's mood or emotional state -- their affect -- to influence decisions. For example, an angry person might say something hurtful which they would otherwise never do if they weren't angry.

- Algorithm bias. This occurs when a computer algorithm systematically favors or discriminates against certain groups or individuals based on factors such as race, gender or socioeconomic status.

- Anchoring bias. This is the tendency for the brain to rely too much on the first piece of information it receives when making decisions.

- Attentional bias. This is the tendency for an individual to pay attention to a single object or idea while deviating from others.

- Availability bias. The tendency for the brain to conclude that a known instance is more representative of the whole than is actually the case results in availability bias.

- Availability heuristic. This is the tendency to use information that comes to mind quickly when making decisions based on the future.

- The Baader-Meinhof phenomenon. Also known as the frequency illusion, this is the sense of seeing new patterns everywhere once we become aware of them.

- Bandwagon effect. This is the tendency for the brain to conclude that something must be desirable because other people desire it.

- Bias blind spot. This is the tendency for the brain to recognize another's bias but not its own.

- Clustering illusion. This is the tendency for the brain to want to see a pattern in what is a random sequence of numbers or events.

- Confirmation bias. This is the tendency for the brain to value new information that supports existing ideas, sometimes called belief bias.

- The Dunning-Kruger effect. This is the tendency for an individual with limited knowledge or competence in a given field to overestimate their own skills in that field.

- Exclusion bias. When certain data points are left out or excluded from a study or analysis, this leads to a skewed or incomplete representation of the population, which can affect the accuracy of the results.

- False consensus effect. This is the tendency for an individual to overestimate how much other people agree with them.

- Framing effect. This is the tendency for the brain to arrive at different conclusions when reviewing the same information, depending on how the information is presented.

- Functional fixedness. This is the tendency to see objects as only being used in one specific way.

- Group think. This is the tendency for the brain to place value on consensus.

- Halo effect. This is the tendency for a person's impression in one area to influence an opinion in another area.

- Hindsight bias. This is the tendency to interpret past events as more predictable than they actually were.

- Hyperbolic discounting. The tendency to have a preference for an immediate payoff rather than a later one that might be bigger or better in some way.

- Misinformation effect. This is the tendency for information that appears after an event to interfere with the memory of an original event.

- Negativity bias. This is the tendency for the brain to subconsciously place more significance on negative events than positive ones.

- Prejudice bias. This is the tendency to rely on preconceived opinions, attitudes or viewpoints when interpreting or acting on information, leading to unfair or discriminatory treatment based on irrelevant characteristics such as race, gender or age.

- Proximity bias. The tendency to give preferential treatment to people who are physically close can result in proximity bias. A local worker being considered for a raise before a remote worker because they're in the immediate vicinity of their supervisor is an example of proximity bias.

- Recency bias. This is the tendency for the brain to subconsciously place more value on the last information it received about a topic.

- Representativeness heuristic. This is the tendency for a person to evaluate a situation and make decisions based on another similar situational model already in mind. It looks similar, so the conclusions are also similar even when the surrounding facts might be different.

- Sample bias. When the sample selected for a study doesn't represent the entire population, leading to results that aren't generalizable.

- Self-serving bias. This is the tendency for an individual to blame external forces when bad events happen but give themselves credit when good events happen.

- Sunk cost effect. Also called the sunk cost fallacy, this is the tendency for the brain to continue investing in something that clearly isn't working to avoid failure.

- Survivorship bias. This is the tendency for the brain to focus on positive outcomes in favor of negative ones. A related phenomenon is the ostrich effect, in which people metaphorically bury their heads in the sand to avoid bad news.

Signs and effects of bias

Because cognitive bias is often an unconscious process, it's easier for an individual to recognize bias in other people. However, some ways to recognize bias include the following:

- If an individual attributes a success to themselves, while attributing other people's successes to luck.

- If an individual relies on early or initial information and fails to adapt or change their thinking based on new information.

- If an individual assumes they have more knowledge than they actually have on a topic.

- If an individual insists on blaming outside factors instead of themselves.

- If an individual is only paying attention to what confirms their opinions.

- If an individual assumes everyone shares their own opinions.

Individuals should try their best to stay away from these signs, as they all affect -- at a base rate -- how that person interprets the world around them. Even if an individual is objective, logical and can accurately evaluate their surroundings, they still should be wary of adopting any new unconscious biases.

Impact of cognitive bias on software development data analytics

Cognitive biases can affect individuals by changing their world views or influencing their work. For example, in technical fields, cognitive biases can affect software data analytics and machine learning (ML) models.

Being aware of how human bias can obscure analytics is an important first step toward preventing it from happening. While data analytics tools can help business executives make data-driven decisions, it's still up to humans to select what data should be analyzed. This is why it's important for business managers to understand that cognitive biases that occur when selecting data can cause digital tools used in predictive analytics and prescriptive analytics to generate false results.

If a data analyst used predictive modeling without first examining data for bias, they could end up with unexpected results. These biases could also influence developer actions. Some examples of cognitive bias that can inadvertently affect algorithms are stereotyping, the bandwagon effect, priming, selective perception and confirmation bias.

Cognitive bias can also lead to machine learning bias, which is a phenomenon that occurs when an algorithm produces systemically prejudiced results based on assumptions made in the ML process. Machine learning bias often originates from poor or incomplete data that results in inaccurate predictions. Individuals who design and train ML systems can also introduce bias, because these algorithms can reflect the unintended cognitive biases of the developers. Some example types of biases that affect ML include prejudice bias, algorithm bias, sample bias, measurement bias and exclusion bias.

Consequently, ML projects demand careful attention to data quality methodologies and data sources, such as public, open source and government repositories, to identify potential data bias and take decisive steps to mitigate bias in ML model training.

Tips for overcoming and preventing bias

To prevent cognitive bias and other types of biased overconfidence caused by it, organizations should check the data being used to train ML models for lack of comprehensiveness.

Data scientists developing ML algorithms should shape data samples in a way that minimizes algorithmic and other types of ML bias. Decision-makers should evaluate when it's appropriate to apply ML technology. Data associated with people should be representative of different races, genders, backgrounds and cultures.

Awareness, good governance and the application of critical thinking are ways to prevent ML bias. This means the following actions should be taken:

- Appropriate training data should be selected.

- Systems should be tested and validated to ensure the results don't reflect bias.

- Systems should be monitored as they perform their tasks, especially if they continue to learn or refine training over time.

- Additional tools and resources should be used, such as Google's What-If Tool or IBM's AI Fairness 360 open source toolkit.

Organizations can prevent bias using both automated and human action. However, they might not be able to remove it all. In addition, not all bias is inherently damaging. For example, algorithmic bias can include the process an algorithm uses to determine how to sort and organize data. It's important to ensure that the type of bias isn't inherently harmful and makes sense for the task at hand.

Learn more about algorithmic bias in artificial intelligence and how to effectively deal with it.