What is Bayes' theorem? How is it used in machine learning?

Bayes' theorem is a mathematical formula used in probability theory to calculate conditional probability, i.e., the revised likelihood of an outcome occurring given the knowledge of a related condition or previous outcome. It enables the updating of predictions as new data becomes available, finding posterior probability by incorporating prior probability.

Sometimes called Bayes' rule or Bayes' law, the theorem is named after Thomas Bayes, an 18th-century English mathematician and Presbyterian minister. Bayes documented the theorem in his paper "An Essay Toward Solving a Problem in the Doctrine of Chances," published posthumously in 1763.

Bayes' work also laid the foundation for Bayesian statistics, a branch of philosophy focused on statistics and how they should be calculated. Bayesian statistics is closely related to the subjectivist approach to epistemology, which emphasizes the role of probability in empirical learning, and has been influential within the disciplines of probability and machine learning (ML).

Bayes' theorem's real-world applications are diverse. For example, it could help an anthropologist determine the likelihood of someone enjoying soccer given that they grew up in England; assist a scientist in calculating the probability of a patient having a specific disease given a diagnostic test's accuracy rate; or enable an analyst to estimate the likelihood of a financial downfall in a hypothetical future scenario.

Understanding conditional probability and Bayes' theorem

Bayes' theorem hinges on the principles of conditional probability. To illustrate, consider a simple card game where winning requires picking a queen from a full deck of 52 cards. The probability of picking a queen from the deck is calculated by dividing the number of queens (4) by the total number of cards (52). Thus, the probability of winning by picking a queen is approximately 7.69%.

Now, imagine picking a card and placing it face down. The dealer then says that the chosen card is a face card. This new condition influences the probability of winning. To calculate this conditional probability, use the equation P(A|B) = P(A ∩ B) / P(B), where P represents probability, | represents "given that," A represents the event of interest and B represents the known condition.

Here, the probability of A (picking a queen) given B (the card is a face card) equals the probability of the card being both a queen and a face card (4/52) divided by the probability of the card being a face card (12/52). This simplifies to approximately 33.33%, as there are 4 queens among the 12 face cards.

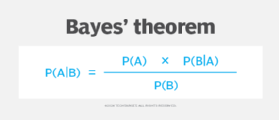

Bayes' theorem extends this concept to situations where direct probabilities are unknown. It helps calculate conditional probability in complex scenarios by using inverse probabilities, which are often easier to determine. The theorem is expressed as follows:

Here's how it applies to the card game example:

- A is the event of drawing a queen card.

- B is the event of drawing a face card.

- P(A) is the probability of drawing a queen (7.69%).

- P(B) is the probability of drawing a face card (23.08%).

- P(A|B) is the probability of drawing a queen given that the chosen card is a face card.

- P(B|A) is the probability of drawing a face card given that the chosen card is a queen (100%).

Inputting these numbers into Bayes' theorem results in the following:

P(A|B) = (7.69% * 100%) / 23.08% = 33.33%

While this particular calculation could have been simplified with basic conditional probability, real-world scenarios are often more complex. For instance, in medical diagnostics, patient statistics are often estimated across thousands of cases. Similarly, determining how many people in England are soccer fans would involve numerous variables. Bayes' theorem provides a structured method for deriving conditional probabilities in these types of multifaceted real-world circumstances.

Deriving the formula for Bayes' theorem

We know that Bayes' theorem is P(A|B) = (P(A) * P(B|A)) / P(B). But how did we get there?

To derive the formula, start with the definition of conditional probability:

P(A|B) = P(A ∩ B) / P(B)

Solve for P(A ∩ B) by multiplying both sides by P(B):

P(A ∩ B) = P(A|B) * P(B)

Similarly, the conditional probability P(B|A) is defined as follows:

P(B|A) = P(B ∩ A) / P(A)

Because P(B ∩ A) is the same as P(A ∩ B), we can write:

P(A ∩ B) = P(A) * P(B|A)

Now, we have two expressions for P(A ∩ B), which we can therefore set equal to each other:

P(B) * P(A|B) = P(A) * P(B|A)

To find the conditional probability P(A|B), solve for P(A|B) by dividing both sides by P(B):

P(A|B) = (P(A) * P(B|A)) / P(B)

You have now derived Bayes' theorem. Note that if you wanted to solve for the probability of B given A, you can rearrange the formula to divide both sides by P(A):

P(B|A) = (P(A|B) * P(B)) / P(A)

Real-world applications of Bayes' theorem

Bayes' theorem has diverse applications across various industries. The following are some example use cases:

- Business. Bayes' theorem can help businesses ascertain the probability of events given prior information. For example, it could be used to estimate supply chain losses due to a worker shortage, product exportation impacts from currency rate exchange changes, or revenue increases from a new product or marketing plan.

- Finance. Bayes' theorem can come in handy for many financial evaluations. For instance, lenders can evaluate the risk a potential borrower might pose based on prior information. It could also be used to hypothesize future financial situations, such as a company's potential stock market losses given situations like a stock market crash or major world event.

- Insurance. Similar to financial evaluations, Bayes' theorem is useful in insurance for calculating risk probabilities, such as the likelihood of natural disasters, in light of known information. For example, insurers can estimate flood probabilities by considering factors such as property location, time of year and past weather patterns.

- AI and machine learning. In ML, Bayes' theorem underpins algorithms that help models form relationships between input data and predictive output. This leads to more accurate models that can better adapt to new and changing data.

- Medicine. Bayes' theorem is applicable in many medical contexts. For example, the theorem can help evaluate the accuracy of a patient's test results given other health factors or test accuracy. It is also used in pharmaceutical drug testing and assessing disease risk levels.

- Theory and statistics. In research, Bayes' theorem helps create more accurate and realistic hypotheses and predictions. Therefore, it aids statisticians, anthropologists and other researchers in data-driven analyses and forecasts.

How is Bayes' theorem used in machine learning?

In ML, Bayes' theorem enhances classification and decision-making by providing accurate predictions based on learned data. It helps ML systems establish relationships between data and output, enabling revised predictions that result in more accurate decisions and actions, even with uncertain or incomplete data. However, it should be noted that the more data is collected, the more accurate a model will be.

The Bayesian approach in ML assigns a probability distribution to all elements, including model parameters and variables. It is often used in probabilistic models and provides a foundation for multiple ML algorithms and techniques, including the following:

- Naïve Bayes classifier. This common ML algorithm is used for classification tasks. It relies on Bayes' theorem to make classifications based on given information and assumes that different features are conditionally independent given the class.

- Bayes optimal classifier. This is a type of theoretical model that finds the most optimal, or probable, prediction by averaging over all possible models weighted by their posterior probabilities based on training data.

- Bayesian optimization. This sequential design strategy searches for optimal outcomes based on prior knowledge. It is particularly useful for objective functions that are complex or noisy.

- Bayesian networks. Sometimes referred to as Bayesian belief networks, Bayesian networks are probabilistic graphical models that depict relationships among variables via conditional dependencies.

- Bayesian linear regression. This conditional modeling technique finds posterior probability through a linear regression model, where the mean of one variable is described by the linear combination of other variables.

- Bayesian neural networks. An extension of traditional neural networks, these models help control overfitting by incorporating uncertainty in weights through posterior distributions, informing a model's output with predictions based on historical data.

- Bayesian model averaging. This approach averages predictions from different models to make predictions about new observations, with each considered model weighted by its model probability.

Bayesian ML's ability to improve prediction accuracy using data makes it useful for many ML tasks, such as fraud detection, spam filtering, medical diagnostics, weather predictions, forensic analysis, robot decision-making and more.

Bayes' theorem advantages and disadvantages

Bayes' theorem has significant benefits for calculating conditional probability in complex scenarios, but it also presents some challenges due to its complexity and reliance on prior probabilities.

Advantages of Bayes' theorem include the following:

- Combines information in an accessible and interpretable way.

- Improves the accuracy of predictions and hypotheses.

- Accounts for unknowns and uncertainties in the data.

- Produces more realistic and reliable predictions.

- Allows for data adjustments, increasing flexibility.

Disadvantages or challenges associated with Bayes' theorem include the following:

- Requires a prior probability, which can sometimes be subjective or difficult to determine.

- Focuses narrowly on finding posterior probability given prior probability.

- Is computationally complex, which can result in high compute costs, especially in ML use cases with large volumes of data and numerous parameters.