How will the GDPR and AI clash affect enterprise applications?

Compliance rules for GDPR and AI implementation may not seamlessly work together. Experts say AI's automated decisions on customer data are risky and need to be explainable.

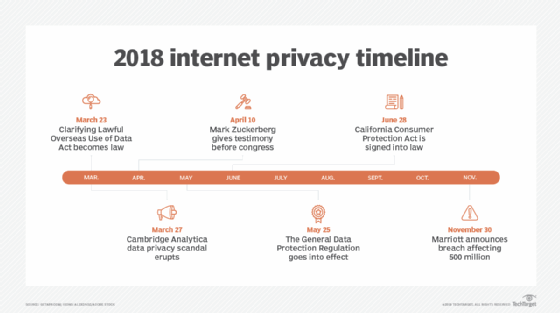

Six months into GDPR compliance, the fines have been a fraction of the allowable amount for major breaches of the law. However, with the first multimillion-dollar fine hitting Google in 2019 and the California Consumer Privacy Act (CCPA) going into effect in 2020, the question remains: Will companies with AI on their to-do list be affected by complications stemming from the privacy laws?

In this Q&A, Stuart Battersby, chief technology officer at Chatterbox Labs and Gartner senior analyst Lauren Maffeo dive headfirst into some of the biggest questions surrounding GDPR and AI implementation.

Editor's note: The following has been edited for clarity and brevity.

Will complying with GDPR complicate the unrestricted use of data for powering and researching AI algorithms?

Lauren Maffeo: GDPR will change big data as we know it. Specifically, it will impact data profiling -- the collection of personal data and subsequent use of that data to discern information about the data subject.

GDPR limits business' abilities to gain key data about EU [European Union] citizens, such as age, location, and socioeconomic status. Under GDPR, businesses that want to use these details must inform users of intent to collect data, how they plan to do it and how they intend to use it. They must also give each person the right to explicitly object to or opt out of profiling, and they can't subject people to automated profiling. All of this will impact the data sets used to train AI products.

Stuart Battersby: Yes, it will complicate things. AI, generally, is a two-phase process. There is the training in which the algorithms learn certain patterns across a whole cohort or data set. The next step is prediction, where some decision is made on each data point -- each customer transaction or customer application, for example.

Both sides of the process have concerns, but one of the main concerns for GDPR is where automated decisions are made on the individual.

In the context of GDPR and AI, do companies have a legal obligation to limit personal data usage?

Battersby: The critical bit in the context of AI is that the companies have legal obligations around automated processing of data. If a decision is made about a customer in an automated manner, then this must be explained to the end customer.

Maffeo: Yes, any business that has personally identifiable information on EU citizens must comply with GDPR, regardless of where that business is located.

What are GDPR's implications on machine learning algorithms and AI, which require large amounts of data?

Battersby: There is a real concern here that black box AI and machine learning systems that offer no explanations and require the long-term storage of large customer data sets will simply not be permitted under the GDPR. There has been a heavy focus on [producing] highly accurate systems at a cost of explaining the output of these systems to both businesses and customers.

How can businesses that deploy AI organize, analyze and make their data compliant with data protection laws such as CCPA and GDPR?

Maffeo: Under GDPR, businesses are responsible for confirming if collecting personal data from users is essential and if they need to get consent from said users. General best practice regarding the use of AI data is to start with the acceptable legal standard. The challenge is that we don't know what an acceptable legal standard looks like in relation to GDPR. Until the first case goes to court, compliance confusion will abound.

Battersby: Transparency is key. It's critical for end customers to know why decisions were made about them. The more detailed and interpretable this is, the better.

What is the likelihood of industry-wide non-compliance with GDPR?

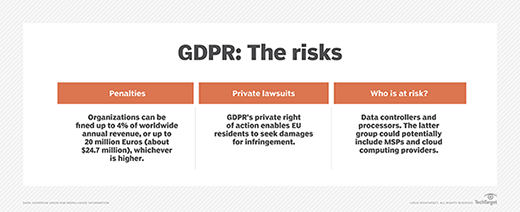

Maffeo: A GetApp survey of 190 U.S.-based small business owners found that 50% percent didn't know what GDPR is. That's a problem considering that businesses found guilty of non-compliance can be fined up to 20 million euros [roughly $22,647,000 USD] or 4% global turnover.

How does explainable AI help companies with GDPR compliance?

Battersby: Explainable AI is quite a general term and not all of it will help. A lot of research into explainable AI is trying to explain the algorithm or the model. They answer questions such as, 'Across our customer base, what did the algorithm learn from the data?' or 'Within our deep learning environment, what do the learned layers in the neural network represent?'

This is on the training side of things. For GDPR, this doesn't solve the [potential] problem because the requirement is to tell individuals why an automated decision was made about them. The explanation of why that individual piece of content was flagged for removal is needed, not a general explanation of what hate speech is across the whole user base.

Does the recent American AI Initiative executive order by U.S. President Donald Trump have any impact on the relationship between GDPR and AI?

Maffeo: GDPR legislation protects people in the European Union and European Economic Area [EEA]. So, inability to collect data on EU citizens could theoretically hinder [U.S.] AI advancement. That said, GDPR does not cover people living in the U.S., which means that U.S. citizens don't have the same entitlements to personal data privacy as EU/EEA citizens.

The executive order pledges to 'enhance access to high-quality and fully traceable Federal data, models, and computing resources to increase the value of such resources for AI R&D, while maintaining safety, security, privacy, and confidentiality protections consistent with applicable laws and policies.'

But as with GDPR, there are few applicable laws and policies protecting the safety, security, privacy and confidentiality of U.S. citizens' personal data. This limited legal precedent is good for building large data sets to train AI, but falls short on protecting people's individual data.